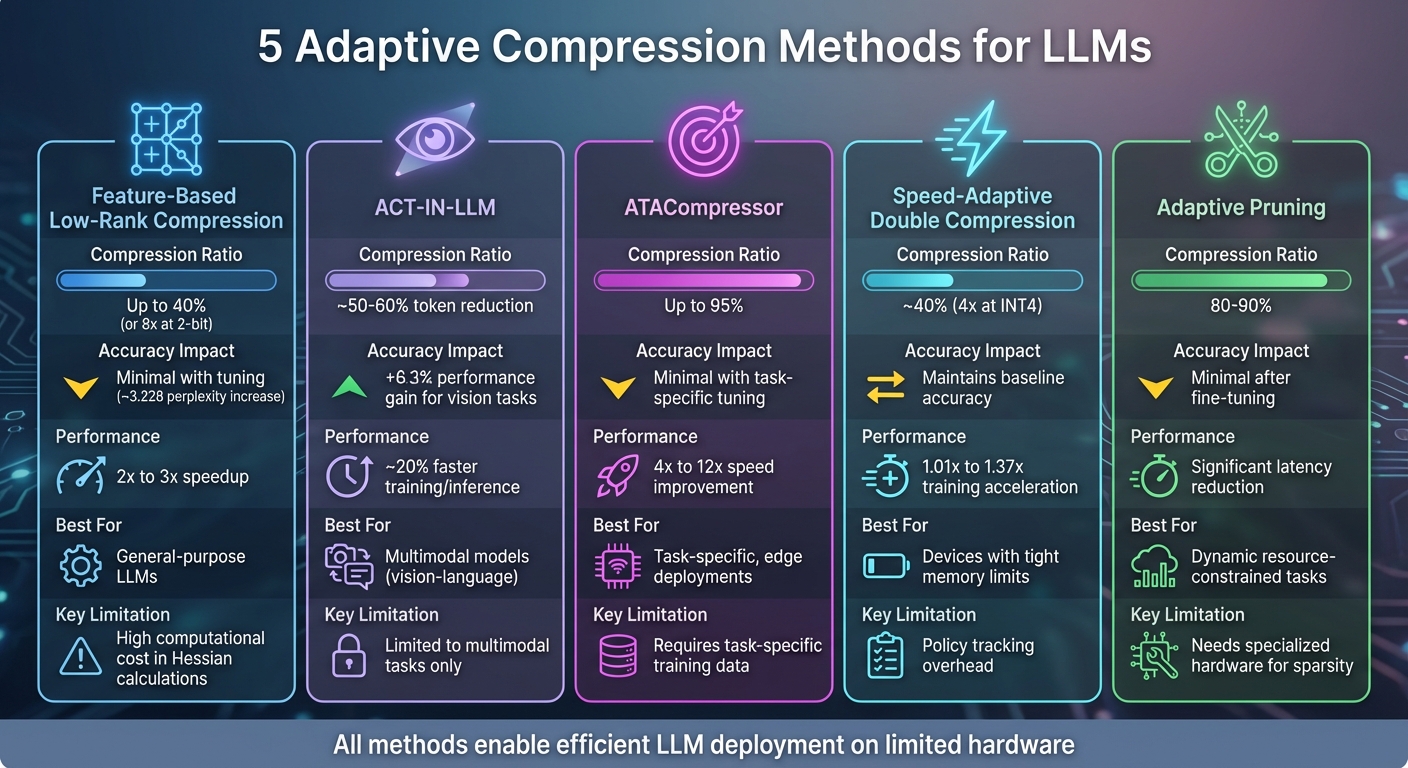

5 Adaptive Compression Methods for LLMs

Five adaptive LLM compression methods—low-rank SVD, vision token resampling, task-aware mixed precision, quantize+prune, and adaptive pruning—that reduce model size while preserving accuracy.

Large language models (LLMs) like Llama-3.1-405B require immense memory and computational resources, making deployment challenging. Adaptive compression methods dynamically reduce model size while preserving accuracy, cutting costs, and improving efficiency. Here's a breakdown of five methods:

- Feature-Based Low-Rank Compression: Uses SVD to reduce weight matrices, achieving up to 40% compression with minimal accuracy loss.

- ACT-IN-LLM: Compresses vision tokens in multimodal models, reducing memory usage by 50% and improving performance for vision-language tasks.

- ATACompressor: Task-specific compression using mixed-precision techniques, achieving up to 95% size reduction with minimal performance impact.

- Speed-Adaptive Double Compression: Combines quantization and pruning to optimize speed and memory usage, reducing size by 40%.

- Adaptive Pruning with Optimization: Selectively removes redundant model components, cutting memory needs by up to 90% while maintaining accuracy.

Each method offers unique benefits depending on the task, hardware, and performance requirements.

Comparison of 5 Adaptive Compression Methods for LLMs

Quick Comparison

| Method | Compression Ratio | Accuracy Impact | Best Use Case | Key Limitation |

|---|---|---|---|---|

| Feature-Based Low-Rank | Up to 40% | Minimal with tuning | General-purpose LLMs | High computational cost |

| ACT-IN-LLM | ~50% | Improved for vision | Multimodal models (vision-language) | Limited to multimodal tasks |

| ATACompressor | Up to 95% | Minimal | Task-specific, edge deployments | Requires task-specific data |

| Speed-Adaptive Double | ~40% | Maintains baseline | Devices with tight memory limits | Overhead from dynamic tuning |

| Adaptive Pruning | 80–90% | Minimal after tuning | Dynamic resource-constrained tasks | Needs hardware for sparsity |

These methods make LLMs more efficient for real-world applications, enabling deployment on limited hardware or reducing operational costs.

1. Feature-Based Low-Rank Compression

Feature-based low-rank compression uses Singular Value Decomposition (SVD) to break down large weight matrices into two smaller ones, U and V. The product of these smaller matrices closely approximates the original, significantly reducing the total number of parameters and cutting down memory usage.

The term "feature-based" highlights the role of activation data in guiding compression. Methods like ASVD and SVD-LLM focus on activation distributions to minimize errors where precision matters most. Meanwhile, advanced approaches such as D-Rank take this further by tailoring compression levels for each layer. This is based on the "effective rank", which measures how much information a layer holds. Middle layers, which carry denser information, are assigned higher rank budgets, while early and late layers undergo more aggressive compression. This strategy has proven highly effective in boosting efficiency, as explained in the following sections.

Compression Efficiency

In January 2025, researchers from Samsung Research America and Florida State University introduced FlexiGPT, a system combining low-rank compression with weight-sharing and adapters. This model achieved state-of-the-art performance across six benchmarks for LLaMA-2 7B, all while compressing the model by 40%. Impressively, they extended a 22-layer TinyLLaMA model using just 0.3% of the original training tokens.

SVD-based methods like these can hit compression rates of 30% to 40%, making them highly efficient without requiring specialized hardware. In contrast, quantization techniques often depend on custom CUDA kernels to achieve similar results. This efficiency translates directly into better accuracy retention, even under significant compression.

Impact on Accuracy Retention

Adaptive low-rank methods go beyond just improving efficiency - they also help maintain accuracy. By redistributing rank from less critical matrices to those with higher information density, these methods preserve performance even under aggressive compression. Traditional SVD often results in performance gaps, but adaptive techniques like D-Rank narrow those gaps significantly. For instance, D-Rank achieved over 15 lower perplexity on the C4 dataset with LLaMA-3-8B at a 20% compression rate compared to standard SVD. Additionally, adaptive rank allocation has been shown to improve zero-shot reasoning accuracy by up to 5% in LLaMA-7B models compressed by 40%.

A key factor in this success is the reallocation of rank budgets. For example, query and key matrices ($W^Q$ and $W^K$), which hold less dense information, are compressed more heavily. This allows value matrices ($W^V$), which are richer in information, to retain higher ranks.

Adaptability to Specific Tasks or Model Characteristics

Low-rank compression also adapts well to specific tasks or model structures. For example, Bayesian optimization has been used to automatically distribute low-rank dimensions across layers. Researchers at EMNLP demonstrated this on LLaMA-2 models, where it outperformed structured pruning by leveraging pooled covariance matrices to better estimate feature distributions.

As Yixin Ji and colleagues emphasized:

"The key to low-rank compression lies in low-rank factorization and low-rank dimensions allocation".

Replacing pruned blocks with low-rank adapters further enhances stability. Output feature normalization plays a crucial role here, gradually adjusting output magnitudes to stabilize the model.

2. ACT-IN-LLM for Vision Token Compression

ACT-IN-LLM, also known as AdaTok in research, introduces a vision-based approach to token compression for multimodal large language models (LLMs). Instead of compressing text tokens directly, this method converts text into images and employs a Perceiver Resampler to distill visual features into fixed tokens. By focusing on visual compression, it addresses the issue of quadratic growth in visual tokens - a common challenge in transformer-based architectures used for multimodal tasks. This approach offers a practical path toward improving efficiency.

Compression Efficiency

When handling 4,096 text tokens, ACT-IN-LLM transforms them into 28 images and compresses them into 1,792 tokens. This includes 64 tokens per image plus one classification token. This process cuts memory usage in half and reduces computational FLOPs by 16% compared to conventional text encoders. The framework also allows developers to fine-tune the compression ratio, offering flexibility to balance efficiency and information retention based on specific requirements.

Adaptability to Specific Tasks or Model Characteristics

ACT-IN-LLM adopts an object-level token merging approach, mimicking how humans perceive visual data by moving away from rigid patch-based scanning. This makes it particularly effective in multimodal systems where visual and textual inputs need to integrate smoothly. Furthermore, the framework is task-agnostic, delivering consistent performance across a variety of vision-related tasks while maintaining compatibility with transformer-based multimodal models. Like other adaptive compression methods, ACT-IN-LLM dynamically tailors its process to meet the demands of multimodal applications while safeguarding essential information.

3. ATACompressor Task-Aware Compression

ATACompressor, also known as Delta-CoMe, applies a mixed-precision delta compression technique to minimize the size of delta weights between task-aligned models and their base counterparts. It takes advantage of the fact that the singular values in these weights tend to follow a long-tail distribution. By assigning higher-bit precision to the more important singular vectors and using lower precision for less critical components, it achieves a more efficient compression process.

Tailored for Specific Tasks and Model Features

The system uses a dynamic token routing mechanism to fine-tune compression based on the specific task or model characteristics. This mechanism employs a learnable router that skips less critical tokens during inference. The decision to skip is influenced by factors like token position, absolute and relative attention scores, and block-specific sparsity patterns. This allows the compression process to adapt dynamically to the needs of each task.

Maximizing Compression Efficiency

To further enhance efficiency, ATACompressor incorporates a decoupled sparsity scheduling system, fine-tuned using genetic algorithms. This scheduler adjusts the compression intensity for each layer, striking a balance between reducing memory usage and preserving essential information. When combined with its mixed-precision approach, the result is a method that significantly reduces memory demands while maintaining the model's effectiveness in performing specialized tasks. This task-aware compression strategy builds on earlier techniques, pushing the boundaries of large language model efficiency even further.

4. Speed-Adaptive Double Compression

Speed-Adaptive Double Compression takes the concept of optimizing inference speed to the next level by further compressing a quantized large language model (LLM). The goal? To tackle decompression bottlenecks and improve overall efficiency.

Compression Efficiency

This approach begins with compression-aware quantization, which adjusts parameters to make them easier to compress. It then prunes unnecessary parameters, achieving a compression ratio that's 2.2 times higher than standard quantization methods. The result? A 40% reduction in memory size, making it a practical solution for devices with tight memory limitations. This streamlined memory usage ensures the model can still perform effectively, even under stringent hardware constraints.

Performance Improvements

Even with significant compression, the model's accuracy and speed remain virtually unaffected. Thanks to its speed-adaptive design, the system continuously evaluates the trade-offs between memory and latency. It dynamically tweaks compression settings to align with the device's capabilities. This ensures that the decompression process doesn't slow things down during inference, keeping operations smooth and efficient - even on devices with limited resources.

5. Adaptive Pruning with Optimization

Adaptive pruning with optimization focuses on selectively removing parts of a model based on their importance, rather than applying a uniform pruning rate across all layers. This method acknowledges that different components in large language models (LLMs) have varying levels of redundancy. Below, we explore how adaptive pruning improves compression efficiency, accuracy, task-specific adaptability, and overall performance.

Compression Efficiency

In July 2024, NVIDIA researchers Saurav Muralidharan and Pavlo Molchanov introduced the Minitron family by pruning the Nemotron-4 15B model into smaller 8B and 4B versions. They achieved these reductions by combining width and depth pruning with knowledge distillation, leading to a 1.8x reduction in compute costs across the entire model family. This approach also required significantly fewer training tokens - 40 times fewer than building the models from scratch.

"Deriving 8B and 4B models from an already pretrained 15B model using our approach requires up to 40x fewer training tokens per model compared to training from scratch." – Saurav Muralidharan, Research Scientist, NVIDIA

Impact on Accuracy Retention

Adaptive pruning has been shown to preserve and even enhance accuracy. In February 2025, Rui Pan's research team applied the Adapt-Pruner technique to LLaMA-3.1-8B, outperforming traditional methods like FLAP and SliceGPT by 1% to 7% on commonsense benchmarks. Additionally, the MobileLLM-125M model, when pruned adaptively, matched the performance of a 600M model on the MMLU benchmark while using 200 times fewer tokens than conventional pre-training methods. The Minitron models, for instance, demonstrated up to a 16% improvement in MMLU scores compared to models trained from scratch.

Adaptability to Specific Tasks

Modern frameworks are designed to adjust pruning dynamically, tailoring models to specific tasks in real time. For example, RAP (Runtime Adaptive Pruning) uses reinforcement learning to monitor and adjust the balance between model parameters and KV-cache, optimizing compression based on workload and memory conditions. Similarly, ZipLM identifies and removes components with the poorest loss-runtime trade-off, ensuring the pruned model meets speedup targets for specific inference environments. For 70B models, adaptive quantization and pruning calibration can be completed within 1 to 6 hours on a single GPU. These techniques highlight the flexibility and efficiency of adaptive pruning in dynamic scenarios.

Performance Improvements

Adaptive pruning not only enhances adaptability but also delivers measurable performance benefits. For instance, ZipLM-compressed GPT2 models are 60% smaller and 30% faster than DistilGPT2, all while maintaining better performance. Another method, incremental pruning, removes about 5% of neurons at a time, interleaving pruning with training. This approach ensures both speed and accuracy are preserved even after substantial compression.

Comparison Table

The table below provides a breakdown of five adaptive compression methods, comparing their compression ratios, impact on accuracy, adaptability to specific tasks, and performance improvements. Each method has its own strengths and trade-offs, depending on your requirements.

| Method | Compression Ratio | Accuracy Impact | Task-Specific Adaptability | Performance Improvement | Key Trade-off |

|---|---|---|---|---|---|

| Feature-Based Low-Rank | Up to 8x (2-bit) | ~3.228 perplexity increase on C4 | General-purpose for standard LLMs | 2x to 3x speedup | High complexity in Hessian calculations |

| ACT-IN-LLM | ~60% token reduction | 6.3% performance gain | Specialized for vision-language tasks | ~20% faster training/inference | Limited to multimodal models only |

| ATACompressor (Task-Aware) | Up to 95% | Minimal with task-specific tuning | Optimized per task/hardware | 4x to 12x speed improvement | Requires task-specific training data |

| Speed-Adaptive Double | 4x (INT4) | Maintains baseline accuracy | Ideal for dynamic training environments | 1.01x to 1.37x training acceleration | Policy tracking overhead |

| Adaptive Pruning | 80–90% | Minimal after fine-tuning | Dynamic resource adjustment per input | Significant latency reduction | Needs specialized hardware for sparsity |

Here's a quick overview to help you decide which method might suit your needs best:

- ATACompressor delivers the highest compression ratio at 95%, making it a strong choice for tasks where size reduction is critical, such as edge deployments.

- ACT-IN-LLM specializes in reducing vision tokens by 60%, offering a 6.3% performance boost for multimodal applications like vision-language tasks.

- Speed-Adaptive Double Compression uses Mixed-Integer Linear Programming to adjust its compression policy efficiently, adapting to data outliers in under 0.5 seconds.

For maximum size reduction, ATACompressor is a top pick. If you're working on multimodal tasks, ACT-IN-LLM shines. And for environments requiring fast training throughput, Speed-Adaptive Double Compression offers a solid balance of speed and accuracy.

Conclusion

Adaptive compression techniques are reshaping how large language models (LLMs) are deployed and scaled. These methods tackle one of the biggest challenges in AI: balancing high performance with the practical limitations of hardware and resources. Whether you're running models on edge devices or managing long-context tasks in the cloud, these strategies help create systems that are both efficient and accurate.

The efficiency gains highlighted earlier stem from this dynamic approach. The five strategies we explored show how adaptive compression can significantly reduce model size while maintaining high-quality outputs. As Alina Shutova and her team pointed out, "The memory footprint of a full-length Key-Value cache can reach tens of gigabytes of device memory, sometimes more than the model itself". By tailoring compression to the importance of each layer, these methods adjust dynamically to hardware conditions and task-specific needs.

For AI engineers working on production systems, the choice between lossless and lossy compression depends on how much accuracy your application can afford to trade. Tasks requiring precise reasoning typically lean toward lossless methods, while general-purpose applications can often handle higher compression ratios without noticeable quality loss. The key is knowing your workload's specific needs and finding the right balance between performance, cost, and accuracy.

Discover more about scalable LLM deployments at Latitude.

FAQs

How do adaptive compression techniques ensure LLMs remain accurate?

Large language models (LLMs) rely on adaptive compression techniques to maintain their accuracy while optimizing data storage and processing. These approaches focus on compressing parts of the model that are less critical. For instance, activation-aware weight quantization targets weights that have a smaller impact on performance. At the same time, lossless encoding methods - like dynamic-length floating-point formats - ensure the outputs remain identical to the uncompressed version.

To further enhance efficiency, adaptive quantizers are fine-tuned using real-world data. This allows for effective cache compression with minimal impact on performance, typically resulting in less than 1% relative error in perplexity and no noticeable decline in downstream task results. These methods make it possible to scale models efficiently without sacrificing quality or reliability.

What is the best adaptive compression method for deploying LLMs on edge devices?

The layer-wise unified compression (LUC) with adaptive layer-tuning approach, as outlined in Edge-LLM, is tailored for running large language models (LLMs) on edge devices. With LUC, the pruning sparsity and quantization bit-width for each layer are automatically adjusted, which helps cut down on both computational demands and memory usage. The adaptive layer-tuning process focuses on fine-tuning only the most essential layers, striking a balance between maintaining accuracy and improving efficiency.

This approach is built specifically for the constraints of edge hardware and consistently delivers better results than standard compression methods in terms of latency and power consumption during real-world testing. Latitude’s open-source tools further streamline the process by simplifying the definition of LUC policies, managing tuning workflows, and monitoring performance metrics, making it more straightforward to deploy high-performing LLMs on edge devices.

Can adaptive compression be used for multimodal models like those handling text and images?

Adaptive compression techniques, initially developed for text-based large language models (LLMs), can also be extended to multimodal models (MLLMs) that handle inputs like text and images. Take ACT-IN-LLM, for instance. This method focuses on adaptively compressing vision tokens within the self-attention layers rather than eliminating them before they reach the language model. The result? A reduction of vision tokens by around 60% and a 20% decrease in inference time - all while maintaining or even enhancing accuracy on high-resolution benchmarks.

These techniques demonstrate how adaptive, layer-specific token compression can process multimodal inputs, such as images or audio, efficiently without compromising performance. Platforms like Latitude play a key role in this space by equipping engineers and domain experts with tools to prototype, test, and deploy such strategies, ensuring models are both efficient and ready for production.