Best Practices for Dataset Version Control

Effective dataset version control is crucial for reproducibility, debugging, and compliance in AI development and LLM workflows.

Why It Matters:

- Reproducibility: Ensures experiments can be repeated with the same data.

- Debugging: Links performance changes to dataset updates.

- Collaboration: Keeps teams aligned with consistent datasets.

- Compliance: Tracks changes for audits and regulations.

Key Strategies:

- Use Metadata: Track version IDs, timestamps, quality metrics, and processing steps.

- Automate Quality Control: Implement checks for anomalies, completeness, and schema validation.

- Adopt Tools: Use platforms like DVC, LakeFS, or Delta Lake for efficient tracking and storage.

- LLM-Specific Tactics: Synchronize prompts with datasets and monitor dataset drift using statistical and embedding analysis.

Quick Tip:

Start small by piloting version control tools and workflows before scaling to larger projects.

Dataset version control isn’t just a tool - it’s a necessity for efficient AI development.

Core Dataset Version Control Principles

These principles help teams implement version control systematically, ensuring data accuracy and reproducibility throughout their workflows.

Dataset Snapshots and Metadata

Creating reliable dataset snapshots starts with content-based addressing, which uses data-derived identifiers to avoid accidental overwrites and ensure integrity.

Each snapshot should include detailed metadata for complete traceability:

| Metadata Component | Description |

|---|---|

| Version Identifier | Unique reference for tracking versions |

| Timestamp & Author | Records of creation time and ownership |

| Parent Version | Tracks data lineage |

| Processing Steps | Documents transformations applied |

| Quality Metrics | Indicators of data quality |

Data History and Quality Control

Maintaining a clear data history requires a mix of automated and manual quality checks. Modern systems often use delta encoding to store changes efficiently.

Automated quality control should cover:

- Statistical anomaly detection

- Validation of format and completeness

- Integration with CI/CD pipelines

- Real-time alerts for issues

Additionally, peer reviews for dataset changes are essential. Use automated tools to compare statistics between versions for added precision.

"Model training runs should automatically log dataset versions to isolate performance changes between code and data updates", according to a leading ML infrastructure engineer.

These practices are especially crucial for LLM development, where managing prompt-dataset relationships and addressing model drift becomes a priority - topics we'll explore in later sections.

Setting Up Version Control Systems

To effectively implement version control, it's essential to align the methods with your team's specific needs. A 2023 Kaggle survey revealed that while 31% of data scientists rely on dedicated version control tools, 45% still use manual versioning methods.

Version Control Methods

Modern techniques go beyond simple file duplication, offering more advanced tracking options.

| Method | Best For | Storage Impact | Implementation Complexity |

|---|---|---|---|

| Full Duplication | Small datasets, simple workflows | High (100% per version) | Low |

| Metadata Tracking | Structured data, frequent updates | Medium (10-20% overhead) | Medium |

| Dedicated VCS | Large-scale ML projects | Low (typically 40% reduction) | High |

Data Storage Methods

Balancing accessibility, performance, and cost is key when managing data storage.

- Choose the right storage backend: For scalability, cloud storage solutions like AWS S3 work well, while local storage is suitable for smaller teams.

- Implement deduplication strategies: Advanced version control systems utilize delta storage and content-based addressing to reduce redundancy effectively.

Available Tools and Platforms

Several tools can help integrate version control into your workflows while maintaining metadata and quality control.

| Tool | Key Features | Best Use Case |

|---|---|---|

| DVC | Git-like interface, ML pipeline integration | End-to-end ML workflows |

| LakeFS | Data lake versioning, atomic operations | Large-scale data operations |

| Delta Lake | ACID transactions, time travel | Structured data in Spark |

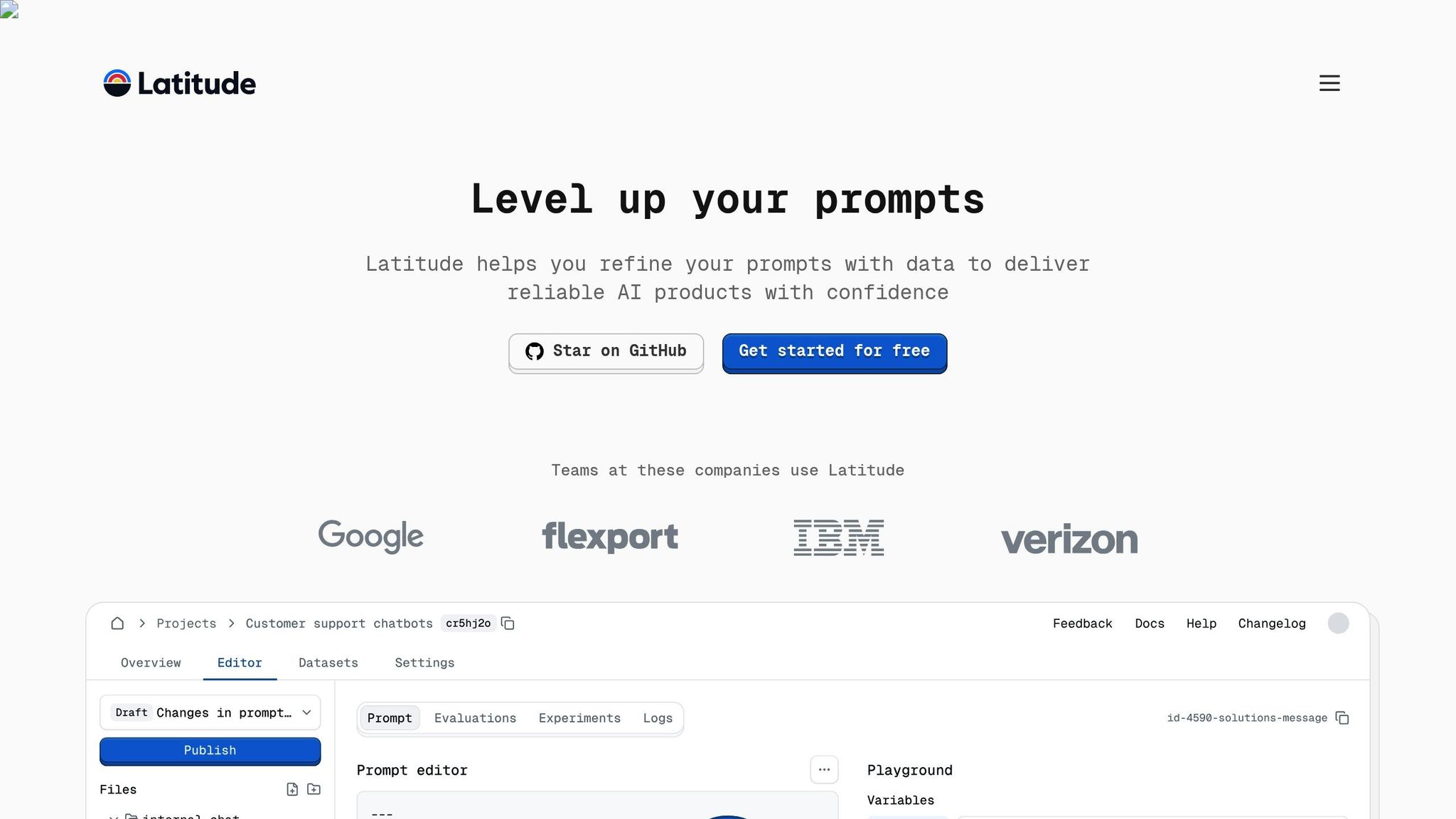

| Latitude | Prompt-dataset synchronization, LLM optimization | LLM development workflows |

For teams working on LLM development, Latitude offers features tailored to managing the critical relationship between prompts and datasets.

When adopting these tools, it's a good idea to begin with smaller pilot projects. This approach allows you to test and refine the integration within your existing MLOps pipelines before scaling up to full production.

Team Guidelines and Workflows

Managing dataset versions effectively requires clear protocols and automated workflows. A structured system not only ensures high data quality but also simplifies team collaboration. This becomes even more crucial when dealing with LLM training data, as discussed in later sections.

Dataset Branching Strategies

Dataset branching borrows concepts from code version control but must also prioritize data integrity. Adopting a GitFlow-inspired branching model tailored for datasets can help. Each branch type should serve a specific purpose:

| Branch Type | Purpose | Merge Requirements |

|---|---|---|

| Main | Production-ready datasets | Requires quality checks and approvals |

| Feature | Adding new data sources or preprocessing | Needs validation and peer review |

| Release | Specific versions for model training | Must include documentation and metrics |

| Experiment | For testing and validation | Requires results documentation |

Tip: Refer to Section 3.2 for storage methods.

Quality Control Automation

Automating quality checks is essential for preserving dataset integrity. Teams that use automated systems report a 73% drop in data-related errors during production.

"Implementing automated validation pipelines reduced our dataset-related incidents by 85% in the first quarter after deployment", says Alex Johnson, Principal Data Scientist at Airbnb.

The best results come from combining pre-commit validation, distribution monitoring, and schema enforcement.

Team Collaboration Methods

Effective collaboration hinges on clearly defined protocols and tools that support multi-user workflows. In fact, 92% of data scientists say their productivity improves with structured collaboration guidelines.

| Collaboration Aspect | Implementation |

|---|---|

| Access Control | Use role-based permissions |

| Change Review | Require mandatory peer reviews |

| Conflict Resolution | Enable automated merging with reviews |

To streamline teamwork, assign domain reviewers, document changes with impact assessments, and hold regular sync meetings. For LLM teams, these practices help ensure prompt updates and dataset versions stay aligned.

Start with pilot projects before rolling out these methods across the organization. This allows teams to identify and address workflow challenges early.

LLM-Specific Version Control

Developing large language models (LLMs) comes with unique challenges, especially when dealing with prompt-dataset dependencies and the frequent occurrence of dataset drift. In fact, 90% of machine learning models face dataset drift within just three months of deployment.

Prompt and Dataset Synchronization

In LLM workflows, prompts and datasets must be treated as a single, inseparable unit. This requires atomic versioning to ensure consistency.

| Component | Version Control Needs | Implementation Method |

|---|---|---|

| Prompt-Dataset Pairs | Explicit version linkage | Config files |

| Dependencies | Automated tracking | Hash verification |

| Testing | Compatibility validation | Automated tests |

To manage these relationships, you can use versioned configuration files like the example below:

version: 1.0

prompt:

version: 2.3

hash: a1b2c3d4e5f6

dataset:

version: 4.1

hash: f6e5d4c3b2a1

This approach ensures that every prompt-dataset pair is explicitly tracked and can be reproduced reliably.

Dataset Drift Monitoring

Dataset drift is one of the biggest challenges in LLM development, as it can degrade model performance over time. Setting up monitoring systems helps detect and address these changes early.

Key monitoring strategies include:

- Statistical Analysis: Use metrics like KL divergence and JS divergence to measure shifts in data distributions. These metrics provide a quantitative way to detect subtle changes.

- Embedding Space Analysis: Visualize shifts in embedding spaces using dimensionality reduction techniques. If embedding similarities drop below a threshold (e.g., 0.85), it’s time for manual review.

- Automated Alerts: Implement systems that notify your team when significant drift is detected, allowing for timely updates to datasets and models.

Using Latitude for Version Control

Latitude offers tools tailored for managing LLM version control workflows. Its integrated environment helps ensure consistency between prompts and datasets throughout the development process.

| Feature | Purpose | Advantage |

|---|---|---|

| Integrated Prompt Management | Tracks versions and history | Simplifies iterations |

| Experiment Tracking | Monitors performance | Supports informed decisions |

| API Integration | Automates workflows | Enables CI/CD integration |

With Latitude, teams can set up automated testing pipelines to validate prompt-dataset compatibility before deployment. Additionally, it tracks performance metrics across different combinations, helping teams make smarter, data-driven decisions during deployment.

Conclusion

Key Takeaways

Dataset version control plays a central role in modern AI development. In fact, 41% of data scientists and ML engineers now rely on specialized tools for versioning. These practices boost project outcomes by improving reproducibility, traceability, and team collaboration.

Version control is especially critical for managing LLM training data. Tasks like aligning prompts with datasets and monitoring drift require a structured approach to versioning.

How to Get Started

If you're working with LLMs and want to apply the strategies discussed, here’s how to implement version control effectively:

-

Choose the Right Tools

Pick tools that meet the specific needs of your LLM workflows. -

Define Clear Protocols

Create guidelines for version naming, metadata documentation, and automated quality checks. Don’t forget to track prompt-dataset pairs, as explained in Section 5.1. -

Plan for Growth

Regularly review storage and infrastructure needs as your project scales.