Best Practices for Prompt Documentation

Effective prompt documentation enhances AI accuracy and consistency, fostering collaboration and reducing errors. Learn best practices for success.

Prompt documentation ensures AI systems deliver accurate and consistent results. It involves recording the instructions given to language models (LLMs) in a systematic way, including task details, context, formatting rules, and version history. Here's why it matters and how to do it effectively:

-

Why It Matters:

- Reduces errors and ensures consistent outputs.

- Improves collaboration between engineers and subject matter experts.

- Helps track changes and refine prompts over time.

-

Key Elements:

- Clear Instructions: Use specific, task-focused language.

- Context and Examples: Provide clear parameters and sample outputs.

- Version Control: Track changes to maintain relevance.

- Tools: Platforms like Latitude simplify collaboration, versioning, and performance tracking.

-

Tools to Use:

- Version Control Systems: Keep track of prompt updates.

- Collaboration Platforms (e.g., Latitude): Enable teamwork and streamline documentation.

Quick Tip: Use templates to standardize prompt documentation and schedule regular reviews to ensure quality and accuracy. Tools like Latitude can automate testing and provide analytics for prompt performance.

Core Elements of Prompt Documentation

Creating clear and reliable prompt documentation involves focusing on key components that ensure it’s easy to understand, reuse, and maintain. Let’s break down the main elements that contribute to high-quality prompt documentation.

Crafting Clear Instructions

Instructions should be straightforward and leave no room for confusion:

- Specific Language: Use precise, task-focused instructions. For example, say “Explain the causes of World War II” instead of “Tell me about historical conflicts”.

- Positive Framing: Phrase instructions in a way that highlights desired outcomes. For instance, “Provide a concise summary” works better than “Don’t include too much detail”.

Managing Versions and Updates

Keeping track of changes and updates is essential for maintaining usable documentation over time.

Version Control:

| Version Number | Change Log | Performance Metrics | Last Updated |

|---|---|---|---|

| v1.0, v1.1, v2.0 | Updated context parameters | Response accuracy rate | Date and owner |

With a versioning system in place, you can ensure that prompts remain consistent and relevant even as they evolve. Adding clear notes about changes and performance metrics helps teams stay aligned.

Adding Context and Examples

Providing context is key to ensuring prompts are used effectively. Include:

- Parameters and Formats: Define the scope, limitations, and expected output clearly. For example, “Create an audit report for the Department of Energy on The Beaver Valley Power Station”.

- Examples: Offer sample prompts and outcomes to show proper usage and set clear expectations.

Using tools like Latitude can help teams organize these elements efficiently, making it easier for engineers and domain experts to collaborate. By combining clear instructions, version tracking, and contextual examples, teams can build documentation that supports reliable and scalable LLM deployment.

Documentation Tools and Automation

Using the right tools is crucial for keeping documentation efficient and scalable. Modern tools help maintain quality and consistency while cutting down on manual work.

Tools for Documentation

Current documentation tools cater to the demands of AI teams through three key features:

- Version Control Systems: Keep track of prompt changes and history efficiently.

- Documentation Generators: Automatically extract and format documentation.

- Collaboration Platforms: Enable team coordination and streamlined reviews.

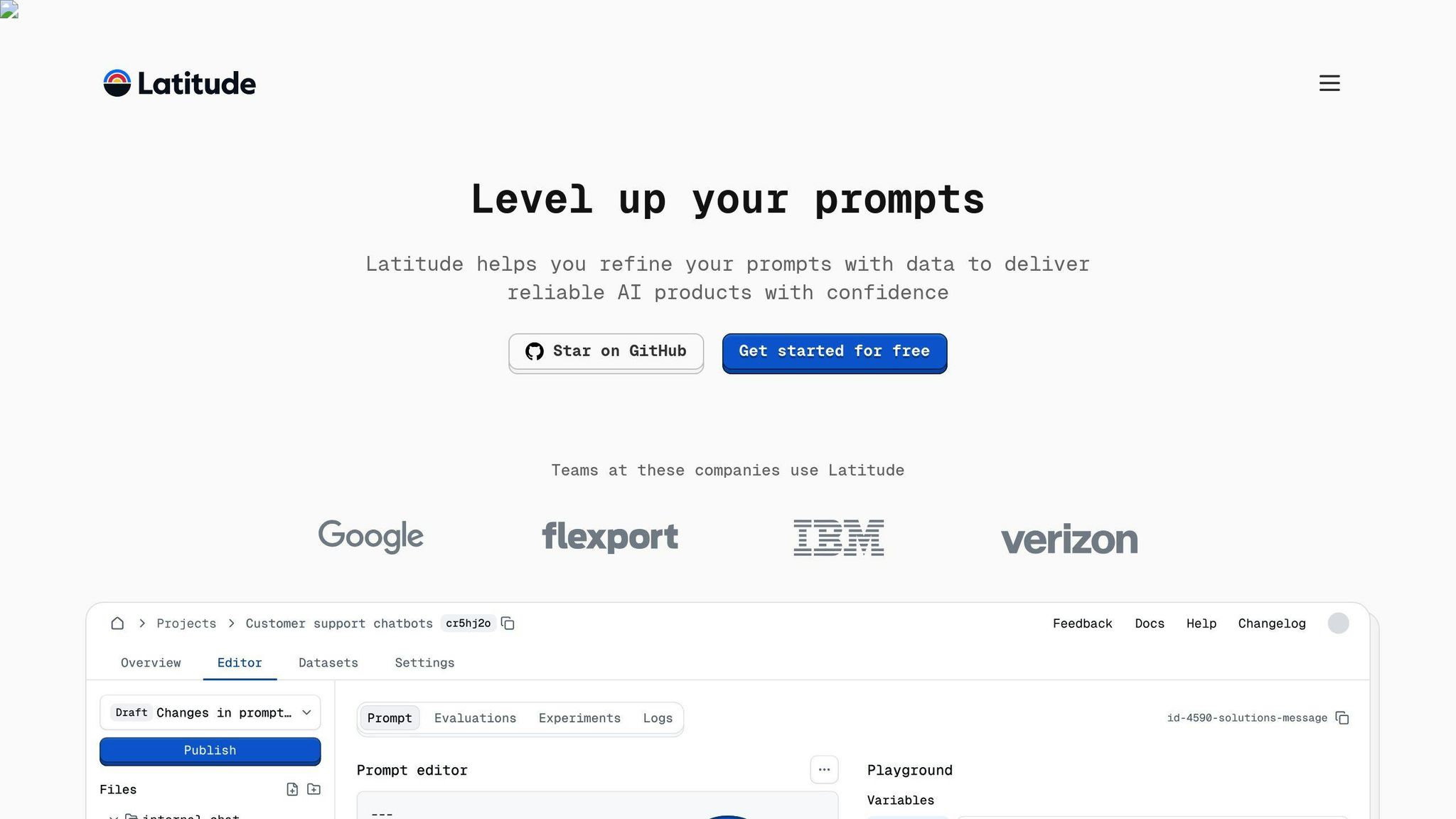

These tools simplify workflows and ensure consistent documentation standards. One standout option is Latitude, which includes features specifically designed for prompt engineering.

Features of the Latitude Platform

Latitude is designed to meet the unique needs of prompt engineering and documentation management. Here's what it offers:

| Feature Category | Functionality | Benefits |

|---|---|---|

| Collaboration Tools | Real-time editing and review | Makes teamwork between experts and engineers seamless. |

| Version Management | Automated tracking and history | Automatically logs prompt changes. |

| Context Management | Structured metadata and examples | Ensures prompts are applied consistently across teams. |

Since Latitude is open-source, teams can adapt it to their specific needs while maintaining high standards for production-grade LLM features.

Choosing the Right Tools

When selecting documentation tools, it's important to align your choice with your team's specific needs. Here's a quick guide:

| Tool Type | Best For | Key Considerations |

|---|---|---|

| Standalone Documentation Platforms | Teams managing large prompt libraries with multiple contributors | Ease of integration and cost. |

| Integrated Development Environments | Development teams focused on coding workflows | Developer experience and workflow support. |

| Collaborative Platforms (e.g., Latitude) | Teams needing both technical and business tools | Accessibility and technical balance. |

Key factors to evaluate include:

- Integration: How well the tool works with your current systems.

- Scalability: Whether it can handle a growing prompt library.

- Maintenance: The effort required to keep the tool updated.

- Collaboration: Features that support team communication and reviews.

For teams working on production-ready LLM features, platforms like Latitude offer a solid mix of technical and collaborative tools that benefit both engineers and domain experts.

Team Documentation Practices

Creating effective documentation for prompts depends on organized collaboration between technical teams and subject matter experts. With clear workflows and the right tools, teams can ensure high-quality output and better efficiency.

Documentation Templates

Using templates helps standardize documentation and minimize mistakes. A well-structured prompt documentation template typically includes:

| Component | Description |

|---|---|

| Prompt Context | Outlines the use case, goals, audience, and expected outcomes |

| Technical Details | Details input format, parameters, and model configurations |

| Version History | Logs update dates, changes made, and contributors |

| Performance Metrics | Tracks accuracy, response quality, and other key indicators |

While templates are a great starting point, successful documentation also depends on strong teamwork and communication.

Cross-Team Communication

For smooth collaboration between domain experts and engineers, clear communication is essential. Here are some best practices:

- Set up regular sync meetings and keep documentation easily accessible for everyone.

- Clearly define roles and responsibilities for maintaining prompts.

- Build feedback loops to address issues and improve workflows.

When these practices are in place, tools like Latitude can further simplify collaboration.

Using Latitude for Teams

Latitude offers tools that make team workflows more efficient and transparent, including:

- Shared Workspaces: Work together in real-time on prompt development.

- Version Control: Keep track of changes and maintain a detailed history of prompt updates.

- Built-in Observability: Monitor how prompts perform and gather insights for improvements.

- Evaluation Tools: Test prompts in batch or real-time to ensure quality.

As an open-source platform, Latitude provides practical solutions for managing even the most complex prompt libraries. Its features make it a reliable choice for teams handling large-scale documentation efforts.

Documentation Upkeep and Updates

Keeping documentation current and accurate requires a structured plan. Regular reviews and tracking performance are key to addressing potential issues and ensuring prompt libraries remain useful and efficient.

Review Schedule and Process

Set up a clear review schedule to maintain documentation quality. Here's a suggested framework:

- Weekly security audits: Identify vulnerabilities or data exposure risks.

- Monthly performance reviews: Evaluate response quality and consistency.

- Quarterly content updates: Refresh examples and refine specifications.

- Bi-annual full audits: Conduct in-depth evaluations of the entire documentation.

Combining automated tools with manual checks helps maintain both security and reliability.

Measuring Prompt Success

Evaluating prompts effectively means looking at both numbers and user feedback. Focus on these key metrics:

- Response accuracy and relevance

- User engagement and satisfaction

- System reliability and performance

- Cost efficiency and resource use

Tools like PromptLayer and LangSmith can simplify tracking these metrics, making it easier to assess the strengths and weaknesses of your prompts.

Community Support Tools

Community-driven platforms can further improve documentation and foster collaboration. Latitude's platform, for instance, offers two standout features:

- Automated Testing: Validates prompts against historical datasets to catch regressions and ensure stable performance.

- Performance Analytics: Tracks trends and highlights areas for improvement.

"Regular evaluations of prompt performance aid in the early detection of possible problems", says Mehnoor Aijaz from Athina AI. "Including user feedback in the assessment procedure can provide important information about how well prompts function in practical situations."

For the best results, pair automated tools with regular human reviews. This approach ensures your documentation is both technically sound and user-friendly.

Summary and Next Steps

Creating effective prompt documentation involves combining clear guidelines, reliable tools, and consistent team practices. Here’s a breakdown of the key elements for successful implementation:

Documentation Foundation

A structured approach is crucial for maintaining consistent quality and manageable systems. The goal is to establish clear protocols that ensure technical precision while remaining practical and user-friendly.

Performance Metrics That Matter

Well-documented prompt engineering delivers measurable results. For example, systematic optimization has led to an 8.2% increase in test accuracy and improvements of up to 18.5% in logical deduction tasks. These numbers highlight the real-world benefits of prioritizing detailed documentation.

Implementation Framework

To build on these results, adopting a structured framework is essential. Here are three strategies to ensure consistent outcomes and scalability:

- Version Control Integration: Using dedicated repositories and file-based tracking can cut maintenance efforts by up to 30%, making it easier to manage prompt updates.

- Automation and Testing: Platforms like Latitude support ongoing quality assurance by enabling continuous validation and performance checks, complementing earlier-established templates and workflows.

- Team Collaboration: Defined workflows improve communication across teams, helping refine prompts and maintain consistent quality throughout the documentation process.

Core Actions for Success

| Phase | Expected Outcome |

|---|---|

| Initial Setup | Standardized prompt management system |

| Tool Integration | Simplified quality assurance processes |

| Team Training | Uniform practices across the team |

| Monitoring | Data-driven improvements |

"Prompt engineering done right introduces predictability in the model's outputs and saves you the effort of having to iterate excessively on your prompts." - Mirascope Author

FAQs

What is the best practice to interact with the prompt?

This section focuses on common questions about how to craft and interact with prompts effectively, especially for documentation tasks.

Key Elements: A strong prompt should include:

- A clear definition of the task with specific requirements

- Relevant context and constraints

- Details on the desired output format

- Clear criteria for measuring success

How to Apply This: For more detailed tasks, using a chain-of-thought approach can help break the process into manageable steps, ensuring accuracy and thoroughness. This method helps cover all necessary details while keeping the output clear.

| Component | Example Implementation |

|---|---|

| Task Definition | "Document the password reset workflow in detail" |

| Context | "Target audience: senior developers using OAuth 2.0" |

| Output Format | "Include code snippets, JSON API responses, and errors" |

| Success Criteria | "Should address all edge cases and security considerations" |

Tools and Validation: Platforms like Latitude can streamline this process by offering automated validation and collaboration features. These tools help refine prompts, ensure consistent quality, and support ongoing performance checks, aligning with established workflows and templates.