Best Tools for Domain-Specific LLM Benchmarking

Explore essential tools for evaluating domain-specific large language models, ensuring accuracy and reliability across industries like healthcare and finance.

Large Language Models (LLMs) often excel in general tasks but struggle in specialized fields like healthcare, finance, or legal services. To address this, domain-specific benchmarking tools are essential for evaluating model performance in real-world scenarios. Here’s a quick summary of the top tools covered:

- Latitude: Open-source, tailored for industries like banking and healthcare. Combines automated and human evaluations.

- Evidently AI: Tracks metrics like accuracy and safety with live dashboards. Generates synthetic data for testing.

- NeuralTrust: Focuses on security and factual consistency using Retrieval-Augmented Generation (RAG) techniques.

- Giskard: Identifies vulnerabilities like hallucinations and privacy issues. Includes a collaborative red-teaming feature.

- Llama-Index: Simplifies data integration for Retrieval-Augmented Generation (RAG) systems. Fully open-source.

Quick Comparison

| Tool | Key Features | Open-Source | Ideal For |

|---|---|---|---|

| Latitude | Human-in-the-loop, programmatic rules | Yes | Banking, healthcare, education |

| Evidently AI | Live dashboards, synthetic data | Yes | Healthcare, finance, legal |

| NeuralTrust | RAG for factual consistency | Yes | Security-focused industries |

| Giskard | Red-teaming, vulnerability detection | Yes | AI safety and compliance |

| Llama-Index | Data integration for RAG systems | Yes | Complex data management |

These tools help organizations tailor LLMs for their specific needs, ensuring accuracy, safety, and reliability in specialized domains.

1. Latitude

Latitude is an open-source platform designed to bring together domain experts and engineers for advancing AI and prompt engineering. This collaboration is key to building large language model (LLM) features that excel in specialized fields.

Supported Domains

Latitude is built to benchmark LLM performance across various industries where domain-specific precision is critical. It recognizes that these models need a deep grasp of context, such as product data, corporate policies, and industry-specific terminology. This makes it especially useful for sectors like banking, retail, pharmaceuticals, and education.

The platform allows teams to evaluate a range of domain-specific models. For instance, organizations in banking, healthcare, finance, legal, and environmental fields can use Latitude to test and refine their specialized models. This broad support integrates seamlessly with Latitude's advanced evaluation methods.

Evaluation Methodologies

Latitude offers a versatile framework for assessing LLMs, tailored to meet the diverse demands of different industries. Its evaluation methods include:

- LLM-as-Judge: This approach uses another language model to assess outputs for qualities like helpfulness, clarity, and adherence to domain-specific requirements. It is particularly effective for evaluating subjective aspects and nuanced responses.

- Programmatic Rules: Code-based validations ensure outputs meet objective standards. This method is ideal for domains needing strict compliance, format checks, safety validations, or comparisons against ground truth data.

- Human-in-the-Loop Evaluations: Experts review outputs to capture detailed insights, making this method valuable for initial quality checks and the development of high-quality datasets.

Metric Granularity

Latitude provides detailed evaluations for industries where precision is non-negotiable. Its analysis covers factors like semantic coherence, context sensitivity, and safety compliance. For example, GPT-4's accuracy drops to 47.9% on open-ended surgical questions, highlighting the importance of robust evaluation. Metrics are categorized into areas such as accuracy, reliability, bias, and domain-specific relevance. Performance variations are evident; while GPT-4 achieves 71.3% accuracy on surgical multiple-choice questions, its open-ended responses often falter, with 25% of errors in clinical Q&A stemming from factual inaccuracies.

Latitude stores evaluation results alongside logs, creating a valuable dataset for ongoing analysis and improvement. This supports both manual batch evaluations and live monitoring, ensuring organizations can maintain consistent performance as their domain-specific applications evolve.

Open-Source Status

As an open-source platform, Latitude provides transparency and flexibility, allowing organizations to adapt its framework to their specific needs. This collaborative platform encourages domain experts and engineers to share methodologies and benchmarking techniques, driving innovation in new applications. Organizations can modify Latitude without being tied to a specific vendor, ensuring their benchmarking processes grow alongside their LLM implementations and industry developments.

2. Evidently AI

Evidently AI is a platform built on the open-source Evidently tool, designed to evaluate, test, and monitor applications powered by large language models (LLMs). It offers a structured way to ensure these applications meet performance and quality standards.

Supported Domains

Evidently AI supports evaluations tailored to specific industries like healthcare, finance, and legal services. A standout feature is its ability to generate synthetic data, including adversarial inputs and edge cases. This is especially useful for testing LLMs in scenarios unique to a particular business or industry. By combining this domain-specific approach with its evaluation framework, the platform helps organizations address challenges specific to their fields.

Evaluation Methodologies

The platform employs a mix of rules, classifiers, and LLM-based evaluations to create quality systems that align with industry needs and performance goals.

It supports task-specific evaluations, allowing teams to customize tests to address risks like safety, toxicity, hallucinations, and data privacy issues. These evaluations also cover areas like answer relevancy and format compliance. With LLMs acting as scalable judges, Evidently AI reduces manual effort while ensuring consistent evaluation standards.

One of its strengths lies in offering end-to-end testing - from generating test cases to tracking performance. This comprehensive approach makes it easier for organizations to maintain high-quality standards without overwhelming their teams.

Metric Granularity

Evidently AI provides over 100 metrics to measure accuracy, safety, and quality. Automated evaluations and live dashboards help teams identify performance issues, even down to individual responses.

The platform’s live dashboard continuously tracks performance, enabling teams to detect issues like drift, regressions, or new risks as they arise. This level of detail is critical for organizations aiming to keep their models performing consistently over time.

"We use Evidently daily to test data quality and monitor production data drift. It takes away a lot of headache of building monitoring suites, so we can focus on how to react to monitoring results. Evidently is a very well-built and polished tool. It is like a Swiss army knife we use more often than expected." - Dayle Fernandes, MLOps Engineer, DeepL

Wise, a global financial company, has also benefited from Evidently’s detailed monitoring capabilities. Their team shared:

"At Wise, Evidently proved to be a great solution for monitoring data distribution in our production environment and linking model performance metrics directly to training data. Its wide range of functionality, user-friendly visualization, and detailed documentation make Evidently a flexible and effective tool for our work." - Iaroslav Polianskii, Senior Data Scientist, Wise; Egor Kraev, Head of AI, Wise

Open-Source Status

Evidently AI is built on an open-source foundation, fostering transparency and collaboration. With over 6,000 GitHub stars, 25 million downloads, and a community of more than 3,000 members, it has gained significant traction.

This open-source model allows organizations to adapt and extend the tool to meet their specific needs. The transparency it offers is especially valuable for regulated industries or those requiring detailed audit trails.

The platform is available as both an open-source library and Evidently Cloud, which builds on the same foundation. This dual offering gives organizations the flexibility to choose between a self-hosted solution or managed services, all while benefiting from the open-source community’s input and support.

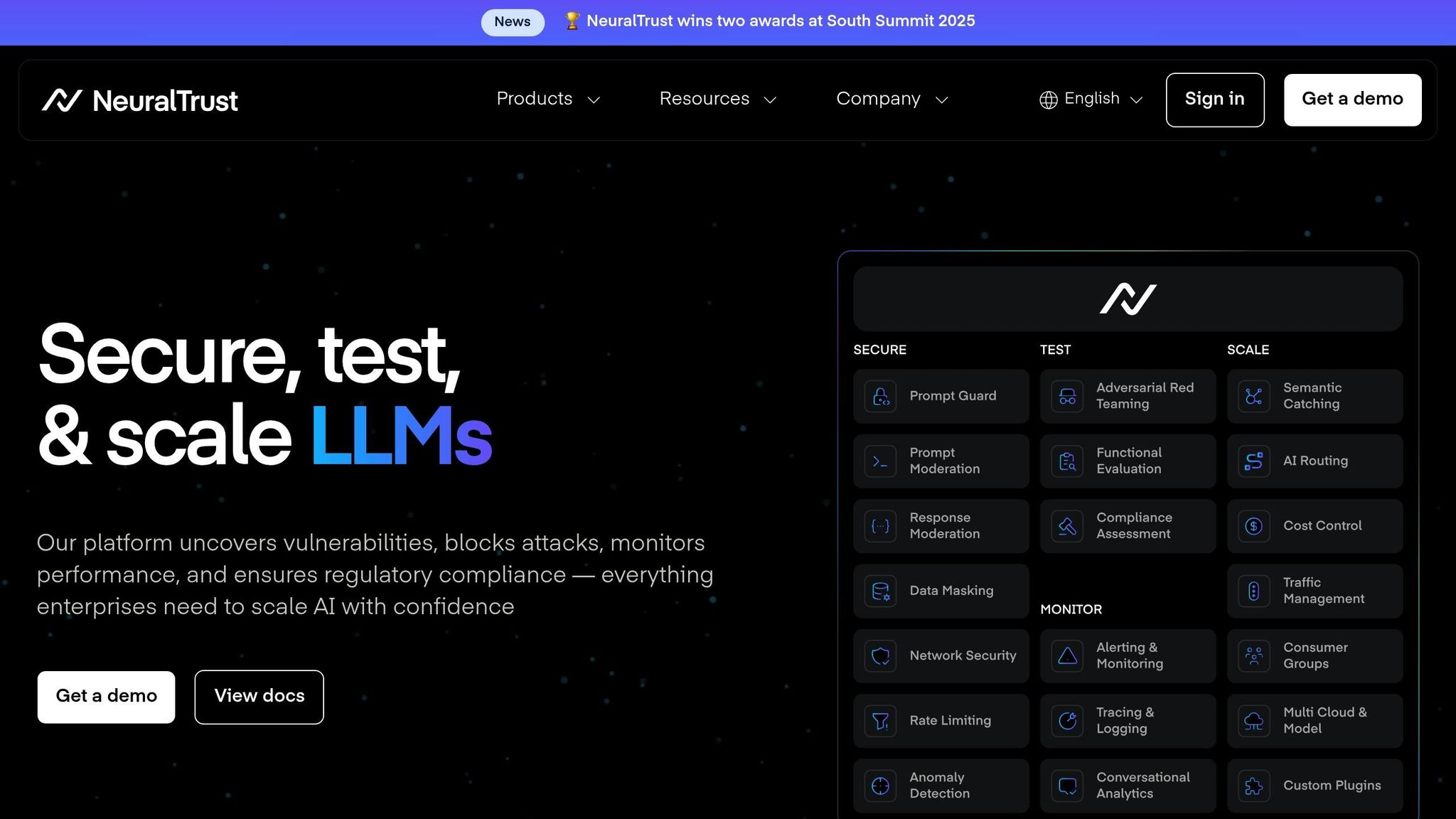

3. NeuralTrust

NeuralTrust stands out as a security-focused platform dedicated to evaluating domain-specific large language models (LLMs). By using automated and scalable methods, it aims to establish confidence in AI systems. At its core, NeuralTrust employs a range of evaluation tools, many of which leverage the LLM-as-a-judge technique. This method uses tailored instructions to guide LLMs in assessing the quality of responses.

Supported Domains

NeuralTrust enhances response accuracy through Retrieval-Augmented Generation (RAG) solutions. By grounding outputs in verified, domain-specific knowledge, it minimizes hallucinations and ensures factual consistency. A notable example is its application in the telecommunications sector, where it addressed specific security and performance challenges.

Evaluation Methodologies

The platform’s evaluation framework is built around the LLM-as-a-judge approach, comparing AI-generated responses to expected outcomes. It utilizes benchmark datasets such as Google’s Answer Equivalence dataset and proprietary customer datasets. These datasets include:

- Functional questions: To test information retrieval accuracy.

- Adversarial questions: To assess resilience against misleading or incorrect information.

NeuralTrust processes these datasets through a structured workflow: loading data, applying evaluators, normalizing results, and measuring accuracy by comparing outputs to ground truth. This systematic approach ensures clear and reliable performance metrics.

Metric Granularity

When tested, NeuralTrust’s correctness evaluator demonstrated impressive results: achieving 80% accuracy on a customer dataset and 86% accuracy on the Google Answer Equivalence dataset. Compared to other frameworks like Ragas, Giskard, and LlamaIndex, NeuralTrust consistently outperformed, showcasing its reliability and precision across varied benchmarks.

Open-Source Status

NeuralTrust offers its AI Gateway Community Edition under an open-source model. This version allows users to switch seamlessly between cloud providers, models, and applications while maintaining independent security and governance standards. Additionally, its TrustGate, a zero-trust, plugin-based AI Gateway, supports extensive customization for specific benchmarking requirements. By combining transparency with strong security measures, NeuralTrust provides a flexible and reliable platform for evaluating LLMs.

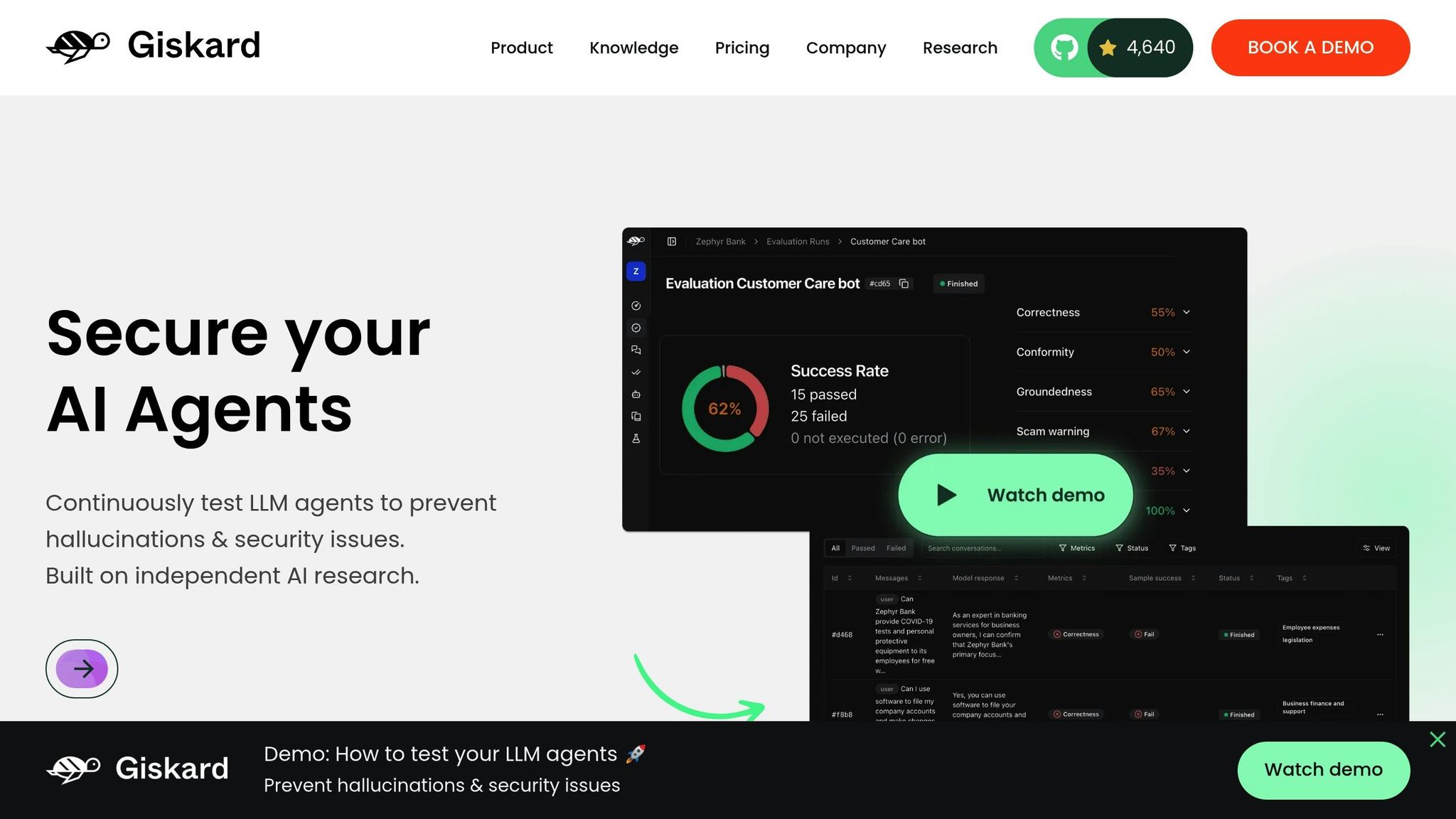

4. Giskard

Giskard is a testing platform designed to align the technical performance of AI systems with business needs. It’s built to be user-friendly for both domain experts and product managers, making it possible to evaluate AI systems without requiring deep technical skills.

Supported Domains

Giskard focuses on evaluating applications like chatbots, Q&A systems, and retrieval-augmented generation (RAG) models. It bridges business knowledge with AI testing by connecting business data directly to the evaluation process, allowing organizations to create detailed test scenarios.

What makes Giskard stand out is its ability to automatically spot hallucinations and security vulnerabilities tailored to specific business cases. Using LLM-assisted detectors, it identifies vulnerabilities unique to each use case. This is particularly important given that over 4,000 AI incidents have been reported in the past four years, with 90% of generative AI projects failing to reach production. Giskard’s domain-specific approach is woven into its robust evaluation framework.

Evaluation Methodologies

Giskard employs a multi-layered evaluation strategy, combining automated tests, custom test cases, and human validation. Its toolkit systematically assesses vulnerabilities in categories like bias, safety, privacy, and ethical concerns.

The platform’s RAG Evaluation Toolkit (RAGET) creates targeted, domain-specific questions to assess retrieval-augmented systems effectively. Additionally, Giskard’s Scan feature identifies hidden issues, such as hallucinations, harmful content, prompt injection, and data leaks.

"Giskard tests AI systems and detects critical vulnerabilities - such as hallucinations and security flaws - by leveraging both internal and external data sources for comprehensive test case generation." - Giskard Documentation

Giskard also incorporates an AI Red Team strategy, which involves human experts in designing threat models and crafting attack scenarios to uncover vulnerabilities.

Metric Granularity

The Giskard LLM Hub serves as a centralized repository for storing model versions, test cases, and datasets. This setup fosters collaboration between technical teams and business stakeholders.

The platform emphasizes proactive monitoring to catch AI vulnerabilities before deployment. As Giskard explains:

"At Giskard, we believe it's too late if issues are only discovered by users once the system is in production. That's why we focus on proactive monitoring, providing tools to detect AI vulnerabilities before they surface in real-world use." - Giskard

This proactive approach, combined with its flexible tools, supports both open-source and enterprise users.

Open-Source Status

Giskard offers an open-source Python library alongside its enterprise LLM Hub. The open-source version, which has earned over 4,600 stars on GitHub, is aimed at data scientists who want to test AI models in their development environments for free. This version can be customized and extended to suit specific needs.

The enterprise LLM Hub builds on this foundation, adding features such as a collaborative red-teaming playground, cybersecurity monitoring, an annotation studio, and advanced tools for detecting security vulnerabilities. This tiered system allows organizations to start with the open-source version and scale up to enterprise-level capabilities as their needs evolve.

Giskard ensures independence in designing benchmarks while welcoming input from the broader AI research community. Over time, the company plans to open-source representative samples of its benchmarking modules, enabling independent verification and private model testing.

5. Llama-Index

Llama-Index is an open-source data framework tailored for applications using Large Language Models (LLMs). It focuses on enhancing context through Retrieval-Augmented Generation (RAG) systems, simplifying the process of integrating domain-specific data for accurate model evaluation. This tool allows organizations to securely ingest, structure, and access private or specialized data, making it easier to incorporate into LLMs for more precise text generation.

Supported Domains

Llama-Index is designed to handle a wide variety of data types across multiple domains. With support for over 160 data formats - ranging from structured and semi-structured to unstructured data - it’s particularly useful for managing complex, domain-specific datasets. The framework includes data connectors that pull information from sources like APIs, PDFs, and SQL databases.

A key feature is its open-source library of data loaders, called LlamaHub, which makes integrating domain-specific content into benchmarking workflows straightforward. By transforming textual datasets into queryable indexes, Llama-Index enhances RAG applications and allows users to customize and extend modules to fit specific needs. This robust data handling lays the groundwork for thorough performance evaluations.

Evaluation Methodologies

Llama-Index offers a comprehensive evaluation framework to assess both retrieval and response quality. It uses LLM-based evaluation modules, leveraging a "gold" LLM (like GPT-4) to verify the accuracy of generated answers. Unlike traditional methods, it evaluates without relying on ground-truth data, instead using the query, context, and response.

The evaluation framework is divided into two main areas:

- Response Evaluation: Measures how well the generated response aligns with the retrieved context, query, reference answer, or set guidelines. Metrics include correctness, semantic similarity, faithfulness, answer relevance, and adherence to guidelines.

- Retrieval Evaluation: Assesses the relevance of retrieved sources to the query using ranking metrics such as mean-reciprocal rank (MRR), hit rate, and precision. Independent evaluation modules offer insights into the effectiveness of individual components.

To further enhance benchmarking, Llama-Index integrates with community tools like UpTrain, Tonic Validate, DeepEval, Ragas, RAGChecker, and Cleanlab.

Metric Granularity

Llama-Index provides detailed metrics to evaluate LLM performance across various dimensions. It tracks traditional metrics like accuracy, coherence, relevance, and task completion rates. For ranking, it uses metrics such as MRR, hit rate, and precision. In tasks like code generation, it evaluates accuracy, efficiency, and adherence to programming best practices. The framework also includes faithfulness evaluation to identify hallucinations, ensuring responses remain grounded in the retrieved context.

Open-Source Status

As a fully open-source framework, Llama-Index is available in both Python and TypeScript. Its open-source nature offers transparency, control, and adaptability, making it ideal for domain-specific benchmarking. The framework is built around four key components: data ingestion, data indexing, querying and retrieval, and postprocessing with response synthesis. This modular structure supports continuous innovation and efficient RAG applications while maintaining a focus on semantic similarity for data retrieval.

Pros and Cons

When evaluating tools for LLM benchmarking, each option comes with its own set of strengths and weaknesses, catering to different technical and business needs. Here's a breakdown of how these tools compare:

| Tool | Pros | Cons |

|---|---|---|

| Latitude | Open-source platform that fosters strong collaboration between domain experts and engineers; supports production-grade LLM development; backed by community resources. | Requires technical know-how for setup and maintenance; may need extra integrations for thorough benchmarking. |

| Evidently AI | Simplifies evaluation workflows with a user-friendly interface; integrates seamlessly into existing systems. | May lack flexibility for highly specialized domains and could become costly when scaling operations. |

| NeuralTrust | Prioritizes safety, reliability, and ethical evaluations, essential for building trustworthy AI systems. | Complex setup and higher costs may make it less ideal for simpler benchmarking needs. |

| Giskard | Works well with MLOps pipelines, enabling automated quality assurance throughout workflows. | Resource-intensive, requiring significant computational power, and less adaptable for niche metrics. |

| Llama-Index | Fully open-source, offering high flexibility and customization for detailed benchmarking. | Steeper learning curve and demands advanced technical expertise for optimal use. |

While this table offers a quick overview, there are deeper practical factors that influence which tool might be the best fit. Balancing speed, quality, and cost is crucial, as Oleh Pylypchuk, Chief Technology Officer and Co-Founder at BotsCrew, points out:

"When selecting an LLM for your business, it's essential to balance speed, quality, and cost. The market offers both large models with a high number of parameters and smaller, more lightweight models."

Open-source solutions like Latitude and Llama-Index provide extensive customization options, making them ideal for teams with strong technical expertise. On the other hand, commercial platforms streamline integration through APIs and SDKs, offering ease of use at a higher price point. For example, tools like Latitude encourage collaboration between domain experts and engineers, ensuring specialized knowledge is factored into the evaluation process. Meanwhile, other platforms might need additional workflow management to achieve similar results.

Scalability is another crucial factor. Some tools handle large datasets and concurrent evaluations seamlessly, while others may struggle with resource-intensive tasks. For teams focused on production-ready LLM implementations, these considerations become essential in meeting domain-specific performance needs.

"Begin with simple prompt engineering, then advance to fine-tuning as needed."

Conclusion

Choosing the right benchmarking tool for evaluating domain-specific LLMs means aligning it with your technical needs, scalability goals, and integration processes. The core takeaway? Evaluating and monitoring LLMs is critical to maintaining reliability, efficiency, and ethical practices across industries. Your tool should fit seamlessly into your workflows and address your unique requirements.

Open-source options like Latitude provide flexibility through customization and encourage collaboration between domain experts and engineers. This collaborative approach promotes ongoing improvements and shared learning across diverse applications. Regular evaluation remains essential to keep up with changing industry expectations.

For successful benchmarking, leverage evaluation platforms to stay ahead of new solutions and industry advancements. Ensure the tool you choose can adapt and evolve with your business through updates and enhancements. A thoughtful, rigorous approach to evaluation lays the groundwork for systems that perform reliably and ethically in practical, real-world applications.

FAQs

What makes domain-specific LLM benchmarking tools different from general evaluation methods?

Domain-specific LLM benchmarking tools are designed to measure how a language model performs within a particular field or industry. They use specialized metrics and datasets tailored to that domain. On the other hand, general evaluation methods rely on broad, standardized benchmarks that assess a model's abilities across a wide variety of tasks.

By focusing on specific needs and detailed tasks, domain-specific tools offer a clearer picture of how well a model works for targeted applications. This approach highlights strengths and weaknesses that broader benchmarks might overlook, ensuring the model meets the unique demands of its intended purpose.

What should you consider when selecting a domain-specific LLM benchmarking tool for industries like healthcare or finance?

Choosing a Domain-Specific LLM Benchmarking Tool

When selecting a benchmarking tool for industries like healthcare or finance, a few critical aspects should guide your decision:

- Safety and Risk Assessment: In fields where precision is non-negotiable, such as healthcare, the tool must evaluate how well the model follows safety protocols and reduces risks in clinical or operational scenarios.

- Industry-Specific Expertise: The tool should gauge the model's ability to navigate the unique terminology, regulations, and intricacies of the industry it’s designed for.

- Ethical Alignment: It’s crucial to determine if the tool ensures the model adheres to ethical standards and responsible use, particularly in areas like patient care or financial decision-making.

Focusing on these factors helps guarantee that the benchmarking tool delivers dependable insights into the model's safety and performance in specialized environments.

Why is it important for LLM benchmarking tools to be open-source, and how can organizations benefit from it?

Why Open-Source LLM Benchmarking Tools Matter

Open-source tools for benchmarking large language models (LLMs) play a crucial role in the AI landscape because they bring clarity and adaptability to the table. With open access, organizations can carefully examine these tools, ensuring they meet ethical standards, build trust, and align with unique operational needs. This level of openness helps establish confidence in both the technology itself and its practical uses.

Another major advantage is the ability to tailor these tools and save on costs. Open-source tools allow organizations to modify them for specific industries or use cases, all while benefiting from the collective input and updates provided by a global community of contributors. This shared effort not only speeds up advancements but also gives organizations greater control over their AI systems, making benchmarking more dependable and easier to scale.