Dynamic LLM Routing: Tools and Frameworks

Explore dynamic LLM routing tools that optimize costs and enhance efficiency by matching queries with the right language models.

Dynamic LLM routing helps organizations save costs and improve efficiency by directing queries to the most suitable language model based on complexity and performance needs. High-performance models like GPT-4 handle complex tasks, while simpler queries go to cost-effective models like Llama-2. This approach can cut costs by up to 75% while maintaining quality.

Top tools for dynamic LLM routing:

- Latitude: Open-source platform for query routing, cost management, and seamless integration into workflows.

- RouteLLM: Python-based framework offering flexible routing strategies like preference-based and threshold-based routing.

- PickLLM: Uses reinforcement learning to balance cost, latency, and accuracy for routing queries.

- MasRouter: Designed for multi-agent systems, optimizing collaboration and model selection.

- Amazon Bedrock Intelligent Prompt Routing: AWS service automating model selection with pay-per-use pricing.

Each tool offers unique features for managing costs, performance, and scalability. Choose based on your team's technical expertise and infrastructure needs.

1. Latitude

Latitude is an open-source platform designed for AI and prompt engineering, helping domain experts and engineers create features for large language models (LLMs) with integrated routing. Let’s break down its key capabilities, including routing, cost management, integration, and deployment.

Routing Strategies

Latitude’s routing engine is built to handle queries smartly. It evaluates factors like query complexity, intent, and domain-specific needs to decide the best course of action. Simpler queries are sent to cost-efficient models, while more complex tasks are directed to high-performance LLMs. The platform also allows domain experts to define routing rules, while engineers handle the infrastructure, ensuring flexibility and precision.

Cost Management

One of Latitude’s standout features is its ability to manage costs effectively. By routing straightforward queries to less expensive models and reserving premium models for more demanding tasks, it strikes a balance between affordability and quality. Additionally, its self-hosted deployment option eliminates ongoing SaaS fees, giving teams greater control over their budgets.

Integration and Extensibility

Latitude is built to fit seamlessly into existing workflows. It offers SDKs, APIs, and a command-line interface (CLI) for easy integration. Teams can deploy prompts as API endpoints and connect Latitude with more than 2,800 applications or any MCP server, opening up a wide range of possibilities for use.

Deployment and Usability

Latitude supports enterprise-level, self-hosted deployments, offering extensive customization options for technical users. At the same time, it provides an intuitive interface for team members who may not have a technical background. This makes it a versatile choice for both AI newcomers and those seeking advanced customization options.

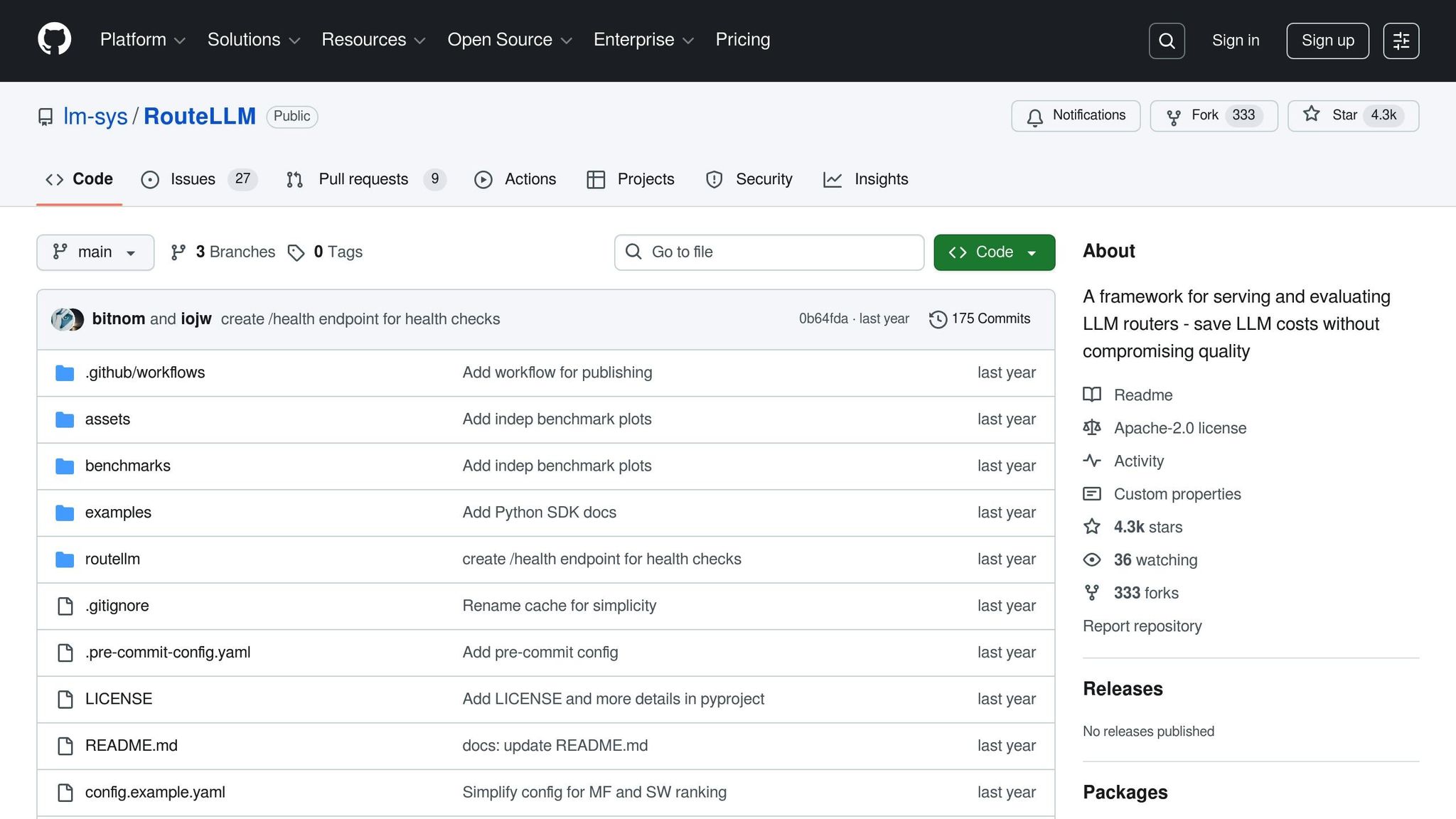

2. RouteLLM

RouteLLM is an open-source framework that dynamically selects the most suitable large language model (LLM) for each query, based on the query's complexity and performance requirements.

Routing Strategies

RouteLLM uses several methods to ensure queries are directed to the right model:

- Preference-based routing: Learns from human evaluations to improve model selection.

- Similarity-based ranking: Matches the patterns of queries to the strengths of specific models.

- Threshold-based routing: Assigns simpler queries to faster, less resource-intensive models, while directing more complex ones to high-capability models.

These strategies ensure efficient query handling without compromising quality, no matter the task.

Cost Efficiency

One of RouteLLM's key strengths is its ability to optimize model selection, which helps manage operational costs. By allocating resources appropriately for each query, it balances performance with expense control, making it a practical choice for production environments.

Extensibility

Built on Python, RouteLLM offers flexibility for developers. It allows for custom routing logic tailored to specific needs and integrates seamlessly with various LLM providers. For organizations prioritizing privacy and control, RouteLLM also supports local model deployment.

Production Readiness

RouteLLM is designed with production environments in mind. It includes monitoring and logging tools to track routing decisions and model performance. The framework also handles load balancing and failover scenarios, ensuring uninterrupted service even if a primary model becomes unavailable. While configuring the framework requires technical expertise, its robust features make it a strong contender when comparing dynamic LLM routing tools.

3. PickLLM

PickLLM is a system designed to make smart, cost-conscious decisions when routing queries to large language models (LLMs). It uses reinforcement learning to choose the most suitable LLM for each query, complementing other dynamic LLM routing tools.

Routing Strategies

PickLLM relies on two key learning algorithms to determine the best LLM from the available options: a learning automaton with gradient ascent and epsilon-greedy Q-learning. These algorithms work together to explore options and make decisions based on a weighted reward function. This function takes into account three factors - cost, latency, and accuracy - to strike the right balance for specific business needs. The system is designed to adapt over a session, eventually settling on a single LLM for all queries within that session. This approach not only helps manage costs but also ensures flexibility in meeting diverse performance goals.

Cost Efficiency

One of PickLLM's standout features is its ability to factor in the cost of each query during decision-making. By doing so, it can route simpler queries to less expensive models, saving resources, while reserving more advanced models for tasks that demand greater complexity. This ensures a practical balance between performance and cost.

Extensibility

As a lightweight, pre-generation routing framework, PickLLM is ideal for environments where reducing computational overhead is a priority. Its design allows for easy integration into existing workflows without requiring significant infrastructure changes. Additionally, developers can customize its objectives and scoring functions to align with specific use cases and performance needs.

Production Readiness

Thanks to its streamlined design and adaptability, PickLLM is ready for use in production settings. It has even gained recognition, being accepted at the first SEAS Workshop at AAAI 2025 in Philadelphia, USA.

4. MasRouter

MasRouter is designed to streamline Multi-Agent Systems (MAS) by leveraging large language models in a smarter, more cost-effective way. It tackles the high costs often associated with running complex multi-agent workflows by introducing a unified routing system. This system makes intelligent decisions about collaboration strategies, role assignments, and model selection.

Routing Strategies

At its core, MasRouter uses a three-layer decision architecture to handle the complexities of multi-agent environments. This structure brings together three essential components into a single routing framework: collaboration mode determination, role allocation, and LLM routing.

- Collaboration Mode Determination: The process begins by predicting the best collaboration strategy for each query. Whether it’s chains, trees, or more intricate setups, the system uses a statistical model to pick the optimal approach. These decisions are based on a latent representation that captures the semantic connections between the input query and potential collaboration patterns.

- Role Allocation: MasRouter assigns roles through a structured probabilistic cascade. Each role is influenced by the roles already assigned, with probabilities calculated based on complexities derived from publicly available data.

- LLM Routing: Model selection is treated as a multinomial distribution problem. MasRouter evaluates the suitability of each large language model by analyzing query characteristics and assigned roles. It learns from past executions to refine its decisions. Reinforcement learning, specifically a policy gradient approach, is used to optimize these routing mechanisms. This allows the system to weigh the trade-offs between utility and cost, ensuring smarter, more efficient decisions.

Altogether, this framework seamlessly integrates collaboration and model selection, improving both performance and cost management.

Cost Efficiency

MasRouter's dynamic routing design is crafted to reduce operational costs, which are often a major concern in Multi-Agent Systems relying on large language models. By unifying all MAS components into a single routing system, it achieves a balance between effectiveness and efficiency.

Instead of deploying multiple expensive models simultaneously, MasRouter uses a cascaded controller network to make real-time decisions. It determines when and how to use different LLMs based on the specific needs of each query and the roles required to complete the task. This targeted approach minimizes unnecessary expenses while maintaining performance.

Production Readiness

MasRouter takes on routing challenges with a formalized Multi-Agent System Routing (MASR) model, making it stand out from other frameworks like MetaGPT, which relies on static delegation, or DynTaskMAS, which uses runtime graph scheduling.

The system is built to adapt to changing workloads and conditions, maintaining a careful balance between precision and efficiency. Its reinforcement learning-based training ensures continuous improvement, making MasRouter a strong candidate for production environments where scalability and efficiency are critical.

5. Amazon Bedrock Intelligent Prompt Routing

Amazon Bedrock Intelligent Prompt Routing, a feature of AWS Bedrock, simplifies large language model (LLM) workflows by dynamically choosing the best foundation model for each incoming prompt. It evaluates factors like the complexity of the prompt, the type of content, and specific performance needs to make these decisions.

How It Works

This routing system takes automation to the next level by analyzing each query and matching it with the most suitable model. By automating this process, it removes the need for manual model selection, ensuring a balance between performance and cost. The system’s analytical approach helps businesses get the most out of their LLM workflows without overspending.

Cost Efficiency Meets Performance

With its pay-per-use pricing, Amazon Bedrock Intelligent Prompt Routing ensures that businesses only pay for what they use, helping to control costs while maintaining high-quality outcomes. This approach aligns perfectly with the need for cost-effective solutions in enterprise-level operations.

Ready for Enterprise-Scale Operations

Designed for large-scale deployments, this service integrates seamlessly with AWS's infrastructure. It comes equipped with essential tools for monitoring, logging, and security, such as AWS CloudWatch and IAM, providing enterprises with the reliability and control they need.

In short, Amazon Bedrock Intelligent Prompt Routing offers a smarter, more efficient way to manage LLM workflows within the AWS ecosystem.

Advantages and Disadvantages

Dynamic LLM routing tools come with the challenge of balancing flexibility against technical complexity and the need for consistent upkeep. Let's take a closer look at Latitude's key strengths and hurdles, as outlined in the tool review above.

Latitude stands out for its open-source and collaborative design, which offers a high level of transparency and adaptability. Since it’s free from licensing fees, it’s a cost-effective choice. However, deploying it for production requires a solid foundation of internal expertise and technical know-how.

Here’s a quick summary of Latitude's advantages and considerations:

| Tool | Approach | Cost Efficiency | Customization | Production Considerations | Best For |

|---|---|---|---|---|---|

| Latitude | Collaborative, open-source routing | High (no licensing fees) | High | Requires internal expertise and setup | Teams working on collaborative prompt engineering |

Choosing the right routing tool ultimately hinges on your team’s technical skills and operational priorities.

Conclusion

Dynamic LLM routing solutions are designed to address a variety of organizational needs and technical challenges. Latitude's open-source framework stands out by bringing together domain experts and engineers to create production-ready LLM features. Its customizable routing capabilities can also help manage costs effectively. This discussion lays the groundwork for evaluating how each tool uniquely contributes to different operational goals.

For teams in the U.S., choosing the right tool often hinges on technical expertise and operational priorities. Organizations with strong internal development resources may find Latitude particularly appealing due to its focus on collaborative prompt engineering, which is ideal for managing complex and evolving AI workflows.

On the other hand, RouteLLM and PickLLM are well-suited for teams looking for specialized routing solutions without requiring significant customization. For those needing advanced multi-agent coordination, MasRouter could be an excellent choice. Meanwhile, Amazon Bedrock Intelligent Prompt Routing is a compelling option for enterprises already integrated into the AWS ecosystem, offering managed services for quick deployment.

The key to success lies in aligning the tool with your team's current skill set and long-term goals. Teams that value collaboration and flexibility might gravitate toward Latitude's platform, while those seeking faster implementation may prefer managed solutions, with the option to explore more customizable frameworks as they grow.

FAQs

How does dynamic LLM routing help reduce costs without compromising query quality?

Dynamic LLM routing offers a smart way to manage costs by directing queries to the most appropriate models based on their complexity. Straightforward queries are handled by smaller, less expensive models, while more intricate ones are sent to larger, more advanced models. This method ensures resources are used efficiently and can slash operational costs by up to 75%, all without compromising on result quality.

This targeted method allows organizations to strike the perfect balance between performance and cost, making it a crucial strategy for building scalable and efficient LLM workflows.

What should organizations look for in a dynamic LLM routing tool?

When choosing a dynamic LLM routing tool, focus on how well it can balance response quality, cost-efficiency, and speed. The ideal tool should provide access to updated model profiles and offer customizable routing criteria, ensuring it meets your specific performance needs.

It's also essential to assess whether the tool can process real-time metrics, manage resources efficiently, and scale alongside your organization's growth. A tool that offers flexibility will help streamline workflows and get the most out of your LLM setup.

How does reinforcement learning improve model selection in tools like PickLLM?

Reinforcement learning plays a key role in improving model selection for tools like PickLLM by making decisions that adapt to the context of each query. This means it can dynamically choose the best model for a specific task, boosting both efficiency and accuracy.

Over time, the system learns the most effective routing strategies by analyzing feedback, which minimizes the need for manual adjustments. By simplifying the selection process, reinforcement learning enables smarter, real-time decisions to handle a wide range of queries seamlessly.