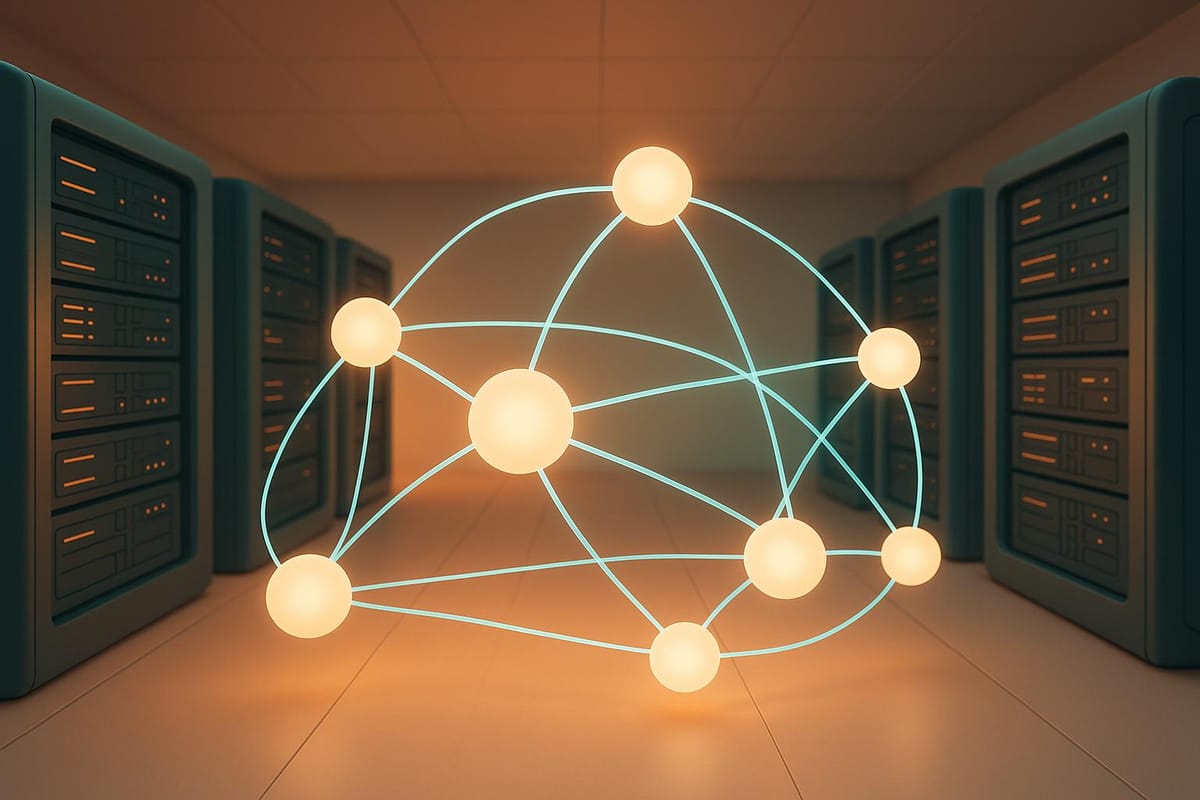

Dynamic Load Balancing for Multi-Tenant LLMs

Explore how dynamic load balancing optimizes resource allocation in multi-tenant large language model systems, addressing unique challenges and strategies.

Dynamic load balancing is the key to efficiently managing large language models (LLMs) in multi-tenant systems. These systems allow multiple users or applications to share the same infrastructure while ensuring fair and efficient resource use. Unlike traditional methods, dynamic load balancing adjusts in real time based on workload demands, such as varying query complexities and resource needs.

Here’s why it’s important:

- LLM workloads vary greatly - a simple query might take milliseconds, while complex tasks like analyzing long documents demand significant GPU power and time.

- Static methods fail - techniques like round-robin can’t handle this variability, leading to bottlenecks and uneven performance.

- Dynamic strategies solve this - by monitoring metrics like GPU usage and latency, resources are allocated intelligently, ensuring smooth operation for all users.

Key methods include weighted round-robin, latency-based routing, cost-based routing, adaptive model selection, and queue-based balancing. Tools like Latitude help teams implement these strategies by providing a platform for prompt optimization, resource management, and collaborative development.

To succeed, focus on:

- Real-time metrics for smarter decisions

- Predictive scaling to anticipate demand

- Robust architecture with clear tenant isolation

Dynamic load balancing ensures reliable, efficient, and scalable LLM performance for all tenants.

Core Challenges in Dynamic Load Balancing for Multi-Tenant LLMs

Handling dynamic load balancing in multi-tenant environments for large language models (LLMs) is no small feat. These systems face unique hurdles due to the demanding nature of LLM workloads and the complexities of serving multiple tenants at the same time.

Resource Contention and Bottlenecks

One of the biggest challenges in multi-tenant LLM systems is resource contention. Unlike more predictable resource usage in traditional web applications, LLM workloads can vary dramatically in their demands for GPU memory and compute power.

A key issue here is GPU memory fragmentation. When multiple tenants share the same hardware, the constant loading of different model weights can fragment the available GPU memory, reducing its overall efficiency. On top of that, a single tenant submitting a heavy request can delay responses for others. Switching between model variants also creates overhead, as loading new models into GPU memory takes time, temporarily impacting availability.

Variability in Latency and Prompt Complexity

The unpredictable nature of prompt complexity adds another layer of difficulty for load balancing. While simple prompts can be processed quickly, more intricate tasks - such as creative or analytical queries - take significantly longer, making it harder to predict resource needs.

Token generation variability further complicates the picture. Tasks that require longer outputs can monopolize resources for extended periods, creating bottlenecks. Batch processing can help optimize performance for similar tasks, but when requests vary widely, its benefits diminish. These factors make it clear that dynamic load balancing strategies must be highly adaptive.

Scalability and Service Reliability

Dynamic load balancing must also address the challenges of scaling and maintaining reliable service in LLM systems. These models are resource-intensive, requiring substantial GPU power and long model loading times, which complicates rapid scaling efforts.

Balancing different service level agreements (SLAs) adds yet another challenge. Some tenants may expect near-instant responses and continuous uptime, while others might tolerate occasional delays. Meeting these varied demands requires advanced resource allocation and scheduling techniques.

Failover mechanisms introduce additional hurdles. Transferring large model weights and rebuilding inference caches during failover events can cause noticeable delays. Moreover, the high cost of GPU resources demands careful management to ensure performance standards are met without unnecessary spending. Traditional monitoring tools often fall short in capturing the nuanced performance metrics of LLM systems, highlighting the need for specialized observability tools tailored to these environments.

Dynamic Load Balancing Strategies for Multi-Tenant LLMs

Managing resource variability and unpredictable latency is no small feat when it comes to multi-tenant LLM systems. The load balancing strategies outlined below play a critical role in ensuring smooth operations. The best approach depends on your specific use case, tenant needs, and infrastructure setup.

Key Load Balancing Methods

Weighted round-robin is a straightforward yet effective method for distributing workloads. It assigns weights to resources based on their capacity and current load. These weights can adjust dynamically, factoring in GPU memory and processing power. For example, a server with 32GB of GPU memory might handle twice the requests of a server with 16GB, while utilization is monitored in real-time.

Latency-based routing focuses on speed, directing requests to the server with the fastest response times. By continuously measuring response times across endpoints, this method ensures that new requests are routed to the quickest option. It’s particularly useful for tenants with strict performance expectations or SLA requirements.

Cost-based routing takes financial considerations into account. This method routes low-priority tasks to cost-effective resources while reserving high-performance resources for premium SLA tenants. It can also shift workloads to more economical options when performance demands allow, balancing cost and efficiency.

Adaptive model selection optimizes resource use by matching queries to the appropriate model size. Simple queries are routed to smaller, faster models, while complex tasks go to larger models. This dynamic adjustment ensures that resources are used effectively, especially when availability is tight.

Queue-based load balancing is ideal for handling traffic spikes. It uses intelligent queuing mechanisms to prioritize requests and manage estimated processing times. High-priority tenants are served first, while similar requests are batched together to improve throughput.

Comparison of Strategies

| Strategy | Best Use Cases | Strengths | Limitations |

|---|---|---|---|

| Weighted Round-Robin | Mixed workloads with varying resource capacity | Easy to implement, predictable | Doesn't account for request complexity |

| Latency-Based Routing | SLA-driven or performance-sensitive workloads | Optimizes for speed, adapts dynamically | Risk of overloading certain servers |

| Cost-Based Routing | Budget-conscious or mixed-priority environments | Reduces costs, flexible resource usage | May trade performance for savings |

| Adaptive Model Selection | Diverse query types, limited resources | Efficient resource use, quality control | Complex to set up |

| Queue-Based Balancing | High-volume or batch processing environments | Handles spikes, improves batching | Can introduce delays |

These strategies form the backbone of effective load balancing. When combined with auto-scaling, they can further enhance performance under fluctuating conditions.

Auto-Scaling and Cloud-Native Integration

Routing strategies are just part of the equation. Scaling techniques are equally critical for optimizing resource use in LLM systems.

Horizontal pod autoscaling is a common approach but requires careful planning for LLMs. Loading large models into GPU memory can take several minutes, so scaling decisions need to anticipate demand rather than react to it.

Vertical scaling offers a quicker way to handle spikes by adding GPU memory or compute power to existing resources. While it avoids the delays of spinning up new containers, it has its limits and works best when paired with horizontal scaling.

Predictive scaling is crucial for managing costs and maintaining performance. By analyzing usage patterns and tenant behavior, this approach pre-scales resources ahead of demand spikes. This minimizes cold starts, which can significantly disrupt user experience.

Multi-zone deployment spreads the load across various availability zones, ensuring low latency while maintaining failover options. This requires careful planning to address data residency rules and manage network latency between zones.

Container orchestration platforms like Kubernetes need special configurations for LLM workloads. GPU requirements must be accounted for in resource requests and limits. Pod disruption budgets should include the time needed to unload models gracefully, and custom health checks should verify model availability, not just container health.

Finally, integrating service mesh technologies adds advanced traffic management capabilities like circuit breakers, retries, and timeouts. These tools also provide detailed observability, offering insights into request flows and system performance.

Architecture Patterns and Best Practices

Building an effective multi-tenant LLM system hinges on creating architectures that balance performance, scalability, and resource efficiency. The right design choices can mean the difference between a system that falters under pressure and one that handles heavy traffic with ease.

Reference Architectures

Centralized AI Gateway Architecture is a widely used setup for multi-tenant LLM systems. It relies on a single entry point to manage authentication, routing, and load balancing across various model instances. This gateway enforces tenant-specific configurations and provides a unified API. Positioned behind a content delivery network (CDN), it typically includes components like a request classifier, tenant manager, and model orchestrator to ensure smooth load distribution and optimal performance.

Edge-Serving Architecture focuses on reducing latency by deploying model instances closer to end users. In this approach, smaller, specialized models handle simpler tasks at edge locations, while more complex queries are routed to full-scale models in centralized data centers. Decisions on where to send requests are based on factors like geographic location and the complexity of the query, ensuring efficient resource utilization.

Microservices-Based Architecture breaks the system into independent services, each capable of scaling separately. Key services might include model serving, prompt preprocessing, response post-processing, and analytics collection. This modular approach is particularly useful when different teams manage specific components or when data isolation is required for compliance.

These architectural patterns provide the foundation for managing workloads effectively, but the right practices are essential to ensure the system performs reliably.

Best Practices for Load Balancing

Health Check Implementation is a must for maintaining system reliability. Health checks should verify that models are loaded, responsive, and have adequate resources. This can involve sending lightweight prompts periodically to measure response times and monitor resource usage. By tracking model-specific metrics, load balancers can gradually reduce traffic to struggling instances instead of removing them abruptly.

Caching Strategies are key to reducing computational demands and speeding up responses. For example:

- Response caching stores answers for frequently asked questions or common prompt patterns.

- Semantic caching identifies and reuses responses for similar prompts that request the same information.

- Preprocessing caches save tokenized inputs and embeddings, cutting down on repeated computations for shared tasks across tenants.

Resource Quota Management ensures fair usage across tenants. Quotas can be set for metrics like requests per minute, tokens processed per hour, or GPU time usage. These systems can include burst allowances, letting tenants exceed their limits temporarily when resources are available. Dynamic adjustments based on historical usage and current demand further optimize resource distribution.

Circuit Breaker Patterns act as a safeguard against cascading failures. They monitor error rates and response times, temporarily isolating underperforming instances to prevent system-wide disruptions.

Performance Analytics and Monitoring

Analytics play a crucial role in fine-tuning load management and improving system performance.

Real-Time Metrics Collection is essential for optimizing load balancing. Key metrics include latency percentiles, throughput, GPU utilization, and memory usage. Tracking these metrics at both the system-wide and per-tenant levels helps pinpoint bottlenecks and identify usage trends. For LLM systems, distinguishing between time-to-first-token and overall response time can help diagnose specific performance issues.

Tenant-Specific Analytics provide deeper insights by tracking metrics like preferred model types, average prompt lengths, and usage patterns. This data enables more precise routing decisions, ensuring high-performance instances handle complex queries while simpler requests are routed to cost-efficient resources.

Automated Performance Tuning uses collected data to adjust load balancing settings dynamically. Machine learning algorithms can analyze historical trends to predict optimal routing and scaling decisions in real time. A/B testing different load balancing strategies can also reveal what works best under actual traffic conditions.

Alerting and Anomaly Detection systems help teams respond quickly to emerging issues. Alerts should trigger only when multiple performance indicators - like rising response times paired with higher error rates - point to a systemic problem. Anomaly detection tools can flag unusual usage patterns, offering opportunities for optimization or early warnings of potential problems.

Using Latitude for Dynamic Load Balancing

Latitude simplifies the process of implementing dynamic load balancing in multi-tenant large language model (LLM) systems. As an open-source platform, it equips teams with the tools they need to ensure efficient resource allocation and maintain strong system performance across multiple tenants.

Latitude Features for Multi-Tenant Systems

Latitude offers tools to streamline prompt engineering, enabling teams to create, manage versions, and fine-tune prompts tailored to each tenant's specific needs. Additionally, it provides a centralized environment designed to support the development and maintenance of reliable LLM deployment features.

Implementing Best Practices with Latitude

By centralizing configuration management and documentation, Latitude ensures that the unique requirements of each tenant are addressed effectively. These capabilities build upon earlier strategies for dynamic load balancing, helping to improve the overall performance of multi-tenant LLM deployments.

Collaborative Development in Latitude

Latitude encourages teamwork by offering a shared workspace that includes clear documentation, version control, and testing tools. These features simplify the process of optimizing prompts and configuring systems.

Iterative Optimization Workflows

Latitude’s built-in versioning and testing tools support iterative workflows. Teams can experiment with various load balancing strategies, analyze results using real-world data, and refine their approaches based on insights gained during testing.

Community Resources and Support

Latitude strengthens collaboration through its open-source ecosystem, which includes a GitHub page and an active Slack channel. These resources allow teams to share experiences, learn from others, and contribute improvements to the platform, fostering progress in designing multi-tenant LLM systems.

Conclusion

Dynamic load balancing is a cornerstone for effectively managing multi-tenant LLM systems. The unique challenges of resource contention, fluctuating latency, and ensuring stable service for multiple tenants demand strategies that go beyond traditional methods. This guide has broken down key approaches to help ensure every tenant gets exactly the resources they need, when they need them.

Key Takeaways

The success of deploying multi-tenant LLMs depends on smart load balancing strategies tailored to the specific demands of language model workloads. These systems require dynamic resource allocation due to the varying complexity of prompts and the diverse sizes of models.

From weighted round-robin techniques to predictive auto-scaling, the outlined strategies tackle these challenges while maintaining reliability for all tenants. Additionally, architecture patterns - such as horizontal scalability, clear tenant isolation, and robust monitoring - play a critical role in enabling responsive and efficient load distribution.

Latitude's open-source platform supports these efforts by providing tools for prompt engineering and system optimization. With resources like a GitHub repository and an active Slack community, teams can share insights and refine their approaches.

Next Steps for Implementation

To put these strategies into action, start by evaluating your current infrastructure to pinpoint bottlenecks. Understanding tenant usage patterns and resource demands will help you choose the most suitable load balancing approach.

Take advantage of collaborative tools like Latitude to streamline prompt engineering workflows and maintain detailed documentation. Establish strong monitoring and analytics systems to gain the visibility needed for smarter, data-driven decisions.

Begin with straightforward strategies, such as tenant-aware round-robin methods, before moving to more advanced predictive models. This step-by-step approach allows your team to build confidence and maintain system stability during the transition.

Investing in dynamic load balancing not only improves resource efficiency but also enhances the user experience and positions your multi-tenant LLM system for future scalability.

FAQs

How does dynamic load balancing enhance the performance of multi-tenant LLM systems compared to static approaches?

Dynamic load balancing plays a crucial role in boosting the performance of multi-tenant large language model (LLM) systems. It works by adjusting resource allocation on the fly to match changing workload demands. This real-time flexibility ensures resources are used wisely, avoiding bottlenecks and keeping response times steady - even when demand surges.

This approach not only improves how resources are utilized but also helps cut operational costs and keeps the system running smoothly. It’s especially effective during workload spikes, ensuring every tenant experiences a seamless and fast service.

What challenges arise when implementing dynamic load balancing for large language models in a multi-tenant system?

Managing dynamic load balancing for large language models in multi-tenant systems isn't without its hurdles. One major issue is resource contention - when one tenant's high-demand workloads hog resources, it can drag down performance for everyone else. The result? Slower response times and a less-than-ideal user experience.

Another challenge comes from unpredictable workloads. Sudden spikes in demand can create bottlenecks, causing delays or even freezing sessions. Then there's the noisy neighbor problem, where a single tenant's activity eats up more than its fair share of shared resources, making it harder to ensure fair access for others. Addressing these issues requires well-thought-out strategies to keep performance steady and equitable across all tenants.

How does Latitude help improve dynamic load balancing for multi-tenant LLM systems?

Latitude makes managing dynamic load balancing in multi-tenant LLM systems easier by providing an open-source platform that bridges the gap between domain experts and engineers. It simplifies prompt engineering, deployment processes, and performance tracking, allowing teams to fine-tune how workloads are distributed on the fly.

Using Latitude, you can adjust resource allocation dynamically to keep your system running smoothly, improve reliability, and handle fluctuating tenant demands efficiently. This ensures seamless operations and makes scaling in complex multi-tenant setups much more manageable.