Getting Started with LLMs: Local Models & Prompting

Learn how to set up local LLMs using LM Studio, explore prompt engineering basics, and unlock the potential of AI-powered language models.

As the rapid evolution of artificial intelligence continues, mastering the use of Large Language Models (LLMs) has become a cornerstone of success for AI developers, machine learning engineers, and technical experts. While tools like ChatGPT have become almost ubiquitous, some professionals are turning to localized LLM solutions to maintain data privacy, enhance control, and streamline workflows. This article delves into how local LLM models work, explores their transformative applications, and provides actionable instructions for effective usage.

Understanding LLMs: What They Are and Why They Matter

Large Language Models, or LLMs, belong to the broader category of artificial intelligence and utilize deep learning techniques for two primary capabilities:

- Language Understanding: The ability to interpret and comprehend human input, whether it's a question, command, or prompt.

- Language Generation: The capacity to produce coherent, contextually relevant responses based on the input it receives.

Unlike traditional AI systems, which required multiple models for different tasks (e.g., translation, summarization), LLMs consolidate these abilities into a single, highly versatile system. This consolidation has made LLMs indispensable for countless applications spanning natural language understanding, creative writing, coding, and beyond.

The breakthrough in LLMs began with a 2017 research paper "Attention Is All You Need", which introduced the transformer architecture. By 2022, models like ChatGPT 3.5 reshaped the AI landscape, showcasing capabilities that could rival human expertise in problem-solving, text generation, and more.

Local vs. Cloud-Based LLMs: Why Local Models Matter

Most popular LLMs, such as ChatGPT or Google Bard, operate in the cloud, requiring users to send their data to external servers for processing. While effective, this approach raises concerns about data privacy and control. Enter local LLM models, which run entirely on the user’s own machine, ensuring:

- Enhanced Privacy: All data remains on your device, eliminating concerns about how external servers may handle sensitive information.

- Cost Efficiency: Avoid reliance on expensive API calls to cloud providers.

- Customizability: Tailor the model for specific use cases or workflows without external restrictions.

However, there is a trade-off: local LLMs may not match the performance of cloud-based models like GPT-4, particularly when processing complex queries. Nevertheless, for tasks that prioritize privacy and security, local models remain a powerful alternative.

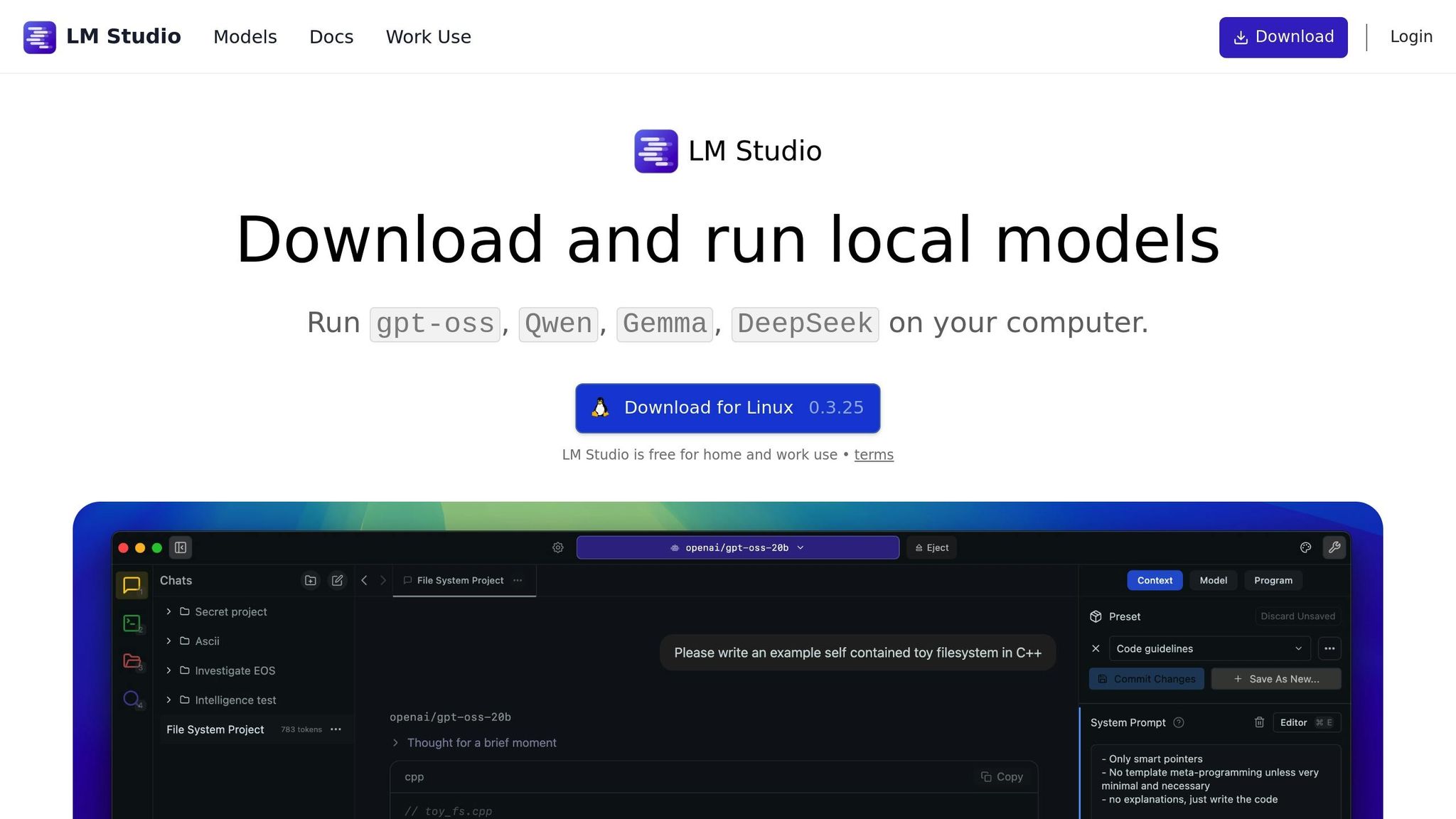

Getting Started with LM Studio: A Step-by-Step Guide

One of the standout tools for running local LLM models is LM Studio, a platform designed to simplify the process of downloading, running, and interacting with models on your local machine. Here’s a guide to getting started with LM Studio:

1. Install LM Studio

- Download the LM Studio software from the official website.

- Ensure you select the correct directory for installation, such as the D: drive, to avoid issues with system restrictions or security settings.

2. Choose and Download a Model

- Open the LM Studio dashboard after installation.

- Navigate to the Model Search Panel and browse available models.

- Start with smaller models like JMA 3 (1 billion parameters) for quick downloads and efficient performance on machines with limited RAM. For more powerful devices, you can explore larger models like 4 billion or 12 billion parameter versions.

3. Load the Model

- Select the downloaded model and load it into your system’s RAM. This step is critical as the model file (a large set of numerical parameters) needs to be accessible in memory for processing.

4. Upload Documents for Private Analysis

- Use LM Studio’s functionality to upload documents locally. The software ensures that all processing remains confined to your own device, providing a secure way to analyze sensitive files.

- Interact with the document by asking questions such as, "What is the total estimated cost in this file?" or "When is the specified event?"

By following these steps, you’ll have a private and secure LLM environment ready for real-world applications, from analyzing legal contracts to generating creative content.

What Makes LLMs Work? A Peek Under the Hood

Tokenization: How LLMs Understand Text

When interacting with an LLM, the text you input isn’t processed as a whole sentence or paragraph. Instead, it is broken down into smaller units called tokens. For example, the phrase "Bangladesh is beautiful" might be split into individual words, subwords (e.g., "Bangla"+"desh"), or even characters, depending on the tokenization strategy.

By using tokens, the LLM assigns numerical values to text inputs, enabling it to process natural language using mathematical logic. This mechanism is what allows the model to predict the next word (or token) during language generation.

Training LLMs: The Two-Step Process

Creating an LLM model involves two major stages:

- Pre-Training: In this stage, the model learns language understanding by analyzing vast amounts of raw, unsupervised data from the internet (e.g., books, websites, articles). The goal here is to provide the model with a foundational understanding of language patterns.

- Fine-Tuning: Here, the base model is refined using supervised datasets, which include specific prompts and their corresponding correct answers. This process ensures the model can generate responses aligned with human expectations and ethical guidelines.

Word-by-Word Output: Why LLMs Generate Gradually

When you ask an LLM a question, it generates responses one word (or token) at a time. This step-by-step process allows the model to refine its output based on the preceding context, ensuring coherence and relevance. It’s also why you see the "typing" effect in tools like ChatGPT.

Prompt Engineering: Maximizing LLM Effectiveness

To get the most out of LLMs, prompt engineering is essential. This involves crafting highly specific input instructions to guide the model’s behavior. A well-structured prompt includes:

- Persona: Define the role the LLM should assume (e.g., "You are a physics teacher who explains concepts clearly with examples").

- Audience: Specify who the response is intended for (e.g., "Explain this for a 9th-grade student").

- Context: Provide background information to help the model generate a tailored response.

- Tone: Choose a tone such as formal, casual, or professional.

- Constraints: Outline any restrictions (e.g., "Avoid mathematical formulas" or "Do not use examples involving dogs").

- Output Format: Specify the desired structure, such as bullet points, tables, or paragraphs.

Here’s an example prompt:

Persona: You are a friendly physics teacher. Audience: 9th-grade students in Bangladesh. Context: The student knows basic math but has not studied Newton’s laws. Tone: Casual and approachable. Constraints: Avoid using complex terminology.

This approach can dramatically improve the quality and relevance of the model’s responses.

Advanced Applications: Deep Research and Beyond

For professionals conducting in-depth research, tools like Google Gemini and Perplexity offer advanced capabilities. With "Deep Research" mode enabled, these tools can browse the web, analyze dozens of sources, and generate comprehensive reports. Examples of potential use cases include:

- Market Analysis: Comparing competitors in an industry and identifying market opportunities.

- Business Strategy: Crafting pathways for growth based on historical successes and failures of similar companies.

- Product Research: Gathering customer feedback and feature comparisons.

While these tools simplify research, it’s important to cross-check any findings with the original sources to ensure accuracy.

Key Takeaways

- LLMs combine language understanding and generation, enabling a wide range of applications from summarization to creative writing.

- Local LLM models like LM Studio prioritize data privacy by ensuring all processing occurs on your device.

- Effective prompt engineering involves defining persona, audience, context, tone, and constraints to guide the model's output.

- Tokenization and word-by-word generation are core components of how LLMs process and produce text.

- Tools like Google Gemini and Perplexity enable efficient deep research, saving hours of manual effort.

- Validate LLM outputs, especially in high-stakes use cases, to ensure accuracy and reliability.

- Use smaller models (e.g., 1 billion parameters) for lightweight tasks and scale up for complex workflows.

- Local LLMs are ideal for sensitive tasks like analyzing confidential documents or legal contracts.

Conclusion

Large Language Models are reshaping industries by making advanced AI accessible and adaptable. Whether you’re leveraging a cloud-based solution or a local model like LM Studio, understanding the underlying mechanics, training processes, and prompt engineering techniques can unlock transformative potential. As we continue to explore the capabilities of LLMs, they are poised to become as foundational as the internet itself - enabling innovation across every sector. Now is the time to embrace this revolution and build the future on top of these intelligent systems.

Source: "🚀 Getting Started with LLMs (Large Language Models)" - Salman Sayeed, YouTube, Aug 24, 2025 - https://www.youtube.com/watch?v=hpzzMndo1wM

Use: Embedded for reference. Brief quotes used for commentary/review.