Guide to Standardized Prompt Frameworks

Explore the essentials of standardized prompt frameworks for AI, enhancing efficiency, output quality, and safety in language model applications.

Prompt frameworks are structured systems for creating and managing prompts for large language models (LLMs). They help teams save time, improve output quality, and ensure safety. This guide explains the key components, tools, and benefits of using these frameworks.

Key Takeaways:

-

Why Use Prompt Frameworks?

They save time (up to 40% on large projects) and improve consistency. For example, Anthropic's 2023 framework cut harmful outputs by 87% while improving performance by 23%. -

Core Elements of Frameworks:

- Fixed Components: Style guidelines, safety protocols, few-shot examples.

- Variable Components: User inputs, task-specific data, real-time context.

- Safety Features: Content filtering, hallucination detection, bias reduction.

-

Tools for Development:

- Latitude: For team collaboration and testing.

- LangChain, Promptable, Haystack, LMQL: Open-source tools for modular prompt design.

-

Testing and Metrics:

- Use tools like Promptfoo, Helicone, and OpenAI Eval.

- Measure success with metrics like task completion and consistency.

-

Future Trends:

- Cross-platform frameworks.

- AI-driven prompt refinement.

- New benchmarks like the HELM framework and Prompt Quality Score (PQS).

Quick Comparison of Popular Frameworks:

| Framework | Core Components | Best For | Key Advantage |

|---|---|---|---|

| SPEAR | 5-step process | Beginners | Simple and repeatable |

| ICE | Instruction, Context, Examples | Complex tasks | Easy for detailed prompts |

| CRISPE | 6-component system | Enterprise use | Built-in evaluation tools |

| CRAFT | Capability, Role, Action, Format, Tone | Specialized tasks | Precise control |

First Steps:

Start with tools like Latitude or LangChain for a pilot project. Focus on creating templates for common tasks and documenting prompt structures. This ensures a scalable and reliable workflow.

Key Elements of Prompt Frameworks

Creating effective prompt frameworks requires attention to key components that ensure consistent and reliable outputs from language models (LLMs). Here's a breakdown of the essential elements that make these frameworks work in production settings.

Fixed vs. Variable Components

A strong framework separates static elements (like brand rules) from dynamic ones (like user inputs or live data). This distinction helps maintain consistency while adapting to specific tasks.

Fixed components often include:

- Brand/style guidelines

- Output format requirements

- Few-shot examples

- Safety protocols

Variable components include:

- User inputs

- Task-specific parameters

- Real-time data

- Dynamic context

This setup allows developers to preserve brand identity in templates while updating details, such as product information, as needed.

Parameter Settings and Context Management

Managing parameters effectively is key to balancing creativity with consistency in LLM outputs. Here’s a quick guide:

| Parameter | Suggested Range |

|---|---|

| Temperature | 0.1–0.5 (for precision), 0.7–1.0 (for creativity) |

| Top-p | 0.1–0.5 (narrow focus), 0.7–0.9 (broader focus) |

| Presence Penalty | 0.5–1.0 |

Optimizing context can improve relevance by 40–60%. Common techniques include:

- Using semantic compression to save token space

- Applying sliding window methods for ongoing conversations

- Prioritizing key details upfront

- Incorporating external memory systems to retain critical information

Safety Controls and Checks

A robust safety system is critical for responsible AI use. Modern frameworks include multiple safeguards to reduce risks.

Key measures include:

- Content filtering to block prohibited terms

- Output sanitization to protect sensitive data

- Hallucination detection through fact-checking tools

- Bias reduction using diverse training data

- User feedback systems for continuous refinement

These measures ensure the framework delivers secure and accurate results. For example, content filtering and output validation work together to prevent inappropriate responses while maintaining task performance. These technical foundations set the stage for collaborative engineering platforms discussed in the next section.

Development Tools and Platforms

Development tools play a critical role in making prompt frameworks scalable and efficient. They focus on three main areas: collaboration, modular design, and streamlined deployment.

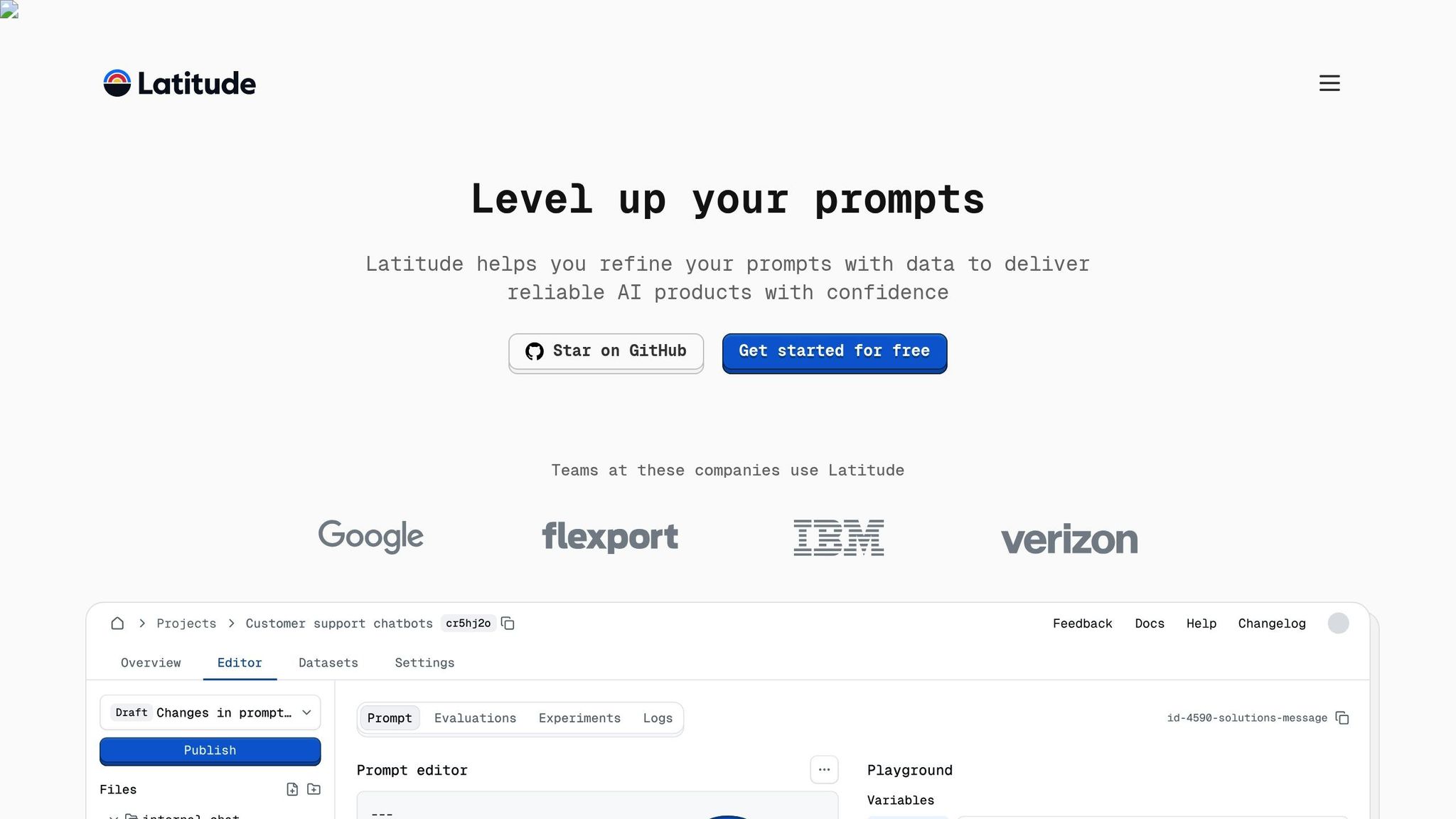

Latitude: Team Collaboration for Prompt Engineering

Latitude is designed for teams working on prompt development. Its standout features include:

- Role-based Access: Assign different permission levels to team members.

- Real-time Collaboration: Work together with live editing and commenting.

- Testing Environment: Use a sandbox to test and validate prompts.

- API Integration: Connect seamlessly with other systems.

This platform supports teamwork by incorporating version control and safety measures, ensuring prompts align with framework requirements.

Open-Source Framework Tools

The open-source community offers several frameworks for creating modular prompt templates. Here's a comparison of the leading options:

| Framework | Key Feature | Best Use Case | Learning Curve |

|---|---|---|---|

| LangChain | Comprehensive tools | Complex applications | High |

| Promptable | Visual interface | Rapid prototyping | Low |

| Haystack | Retrieval capabilities | Search-focused tasks | Medium |

| LMQL | Fine-grained control | Custom constraints | High |

LangChain is widely adopted in enterprise settings due to its extensive toolset. These frameworks bring the principles of parameter management and modular design to life.

Code Management and Deployment

Successful deployment of these tools requires strategies that align with framework principles:

-

Version Control

Use Git-based systems to track prompt changes and support collaborative development. -

CI/CD Automation

Integrate workflows with automated testing, performance benchmarking, safety checks, and rollback options. -

Environment Isolation

Leverage containerization to ensure consistent deployments across different environments.

These practices ensure smooth implementation and maintain the reliability of the tools and frameworks.

Testing and Improvement Methods

Testing prompt frameworks requires a structured approach that combines automated tools with human oversight. This ensures the frameworks deliver consistent and dependable results across different environments. These methods expand on earlier deployment strategies to refine performance.

Performance Metrics

Key metrics play a crucial role in evaluating the success of prompt frameworks. Research indicates that systematic testing can boost output quality by up to 30% [1].

Testing Approaches

Two main methods dominate prompt framework testing: dataset testing and live production testing. Each serves a unique purpose during development.

- Dataset Testing: This method uses predefined datasets to examine how prompts perform in controlled scenarios. It’s ideal for early-stage validation and allows for quick adjustments before deployment.

- Live Production Testing: This approach provides insights from real-world use. It highlights edge cases, uncovers user behavior patterns, and measures latency under actual workload conditions.

Testing Tools and Platforms

Several tools are available to streamline prompt testing. Here are three popular options:

- Promptfoo: An open-source tool designed for batch testing prompts in predefined scenarios. It integrates easily with CI/CD pipelines for automated checks.

- Helicone: Offers real-time monitoring with a dashboard that tracks metrics like latency and token usage in production settings.

- OpenAI Eval: Provides customizable templates for different use cases and supports model comparisons, making it a versatile evaluation suite.

These tools are essential for creating a continuous testing workflow. They help teams identify issues early, maintain quality, and adapt to changing requirements. Updating datasets regularly ensures the testing process remains effective as needs evolve. This approach aligns with the framework development strategies while staying flexible for future demands.

Next Steps in Prompt Engineering

Three key trends are shaping the future of prompt engineering, building on collaborative development tools:

Multi-Platform Standards

Efforts to create unified frameworks for prompts across various LLM platforms are gaining traction. Here's how:

- Abstraction layers like LangChain allow developers to craft prompts once and deploy them seamlessly across platforms like OpenAI and Anthropic.

- Cross-platform testing suites ensure consistent results, regardless of the platform used.

These initiatives align well with earlier discussions on parameter management strategies.

AI-Driven Prompt Updates

Automated systems are now taking the lead in refining prompts by leveraging:

- Adjustments based on performance data.

- Iterative testing to optimize prompt variations using specific metrics.

- Reinforcement learning that incorporates user feedback.

- Systems that adapt to emerging patterns.

This approach ensures prompts evolve in response to real-world performance.

Industry Testing Standards

New benchmarks are setting the bar for evaluating prompt quality, including:

- HELM framework: A system that evaluates performance across multiple dimensions.

-

Prompt Quality Score (PQS): Developed by the Prompt Engineering Institute, this metric assesses prompts based on:

"task completion, output consistency, and safety adherence".

These methods build on the performance metrics and testing strategies discussed earlier, providing a more structured way to measure effectiveness.

Summary

Main Advantages

Standardized prompt frameworks have changed the way organizations create and manage LLM applications. By using structured approaches for template design and testing, teams can achieve better results.

Here’s a quick look at the key benefits:

| Benefit | Impact | Implementation Example |

|---|---|---|

| Scalability | Ensures consistent processes across teams | Pre-designed safety protocols and testing workflows |

| Reusability | Reduces repetitive work with templates | Ready-to-use templates for tasks like classification or summarization |

| Quality Control | Adds safety and accuracy checks systematically | Automated tools to test for bias and ensure accuracy |

| Collaboration | Enhances communication across teams | Built-in feedback systems and review tools |

These benefits are tied to the core components discussed earlier, such as parameter management and safety measures, streamlining the entire development process.

First Steps

For teams just starting with prompt engineering, tools like Latitude offer a great starting point. They provide collaborative workspaces and built-in testing features to make the process smoother.

Kick off with a pilot project using Latitude or LangChain. Focus on documenting prompt structures and creating templates for common tasks like classification. This approach sets the foundation for scaling and improving your workflows.

FAQs

What are the recommended prompt engineering frameworks given the standardization principles discussed?

Prompt engineering frameworks have grown to meet diverse needs, offering structured approaches to crafting effective prompts. One standout is the SPEAR Framework (Start, Provide, Explain, Ask, Rinse & Repeat), created by Britney Muller. It aligns closely with the safety controls and testing methods mentioned earlier [1].

| Framework | Core Components | Best For | Key Advantage |

|---|---|---|---|

| SPEAR | 5-step process | Beginners & quick implementation | Easy-to-follow, repeatable process |

| ICE | Instruction, Context, Examples | Detailed prompt development | Simplifies creating prompts for complex tasks |

| CRISPE | 6-component system | Enterprise-level applications | Includes built-in evaluation for thorough testing |

| CRAFT | Capability, Role, Action, Format, Tone | Specialized AI interactions | Offers precise control over responses |

These frameworks work seamlessly with platforms like Latitude, using templates and testing workflows to ensure consistency. Your choice depends on your goals: SPEAR is ideal for fast, straightforward setups, while CRISPE is better for large-scale, enterprise systems requiring deeper evaluation.