How Real-Time Traffic Monitoring Improves LLM Load Balancing

Explore how real-time traffic monitoring enhances load balancing for large language models, optimizing performance and reliability.

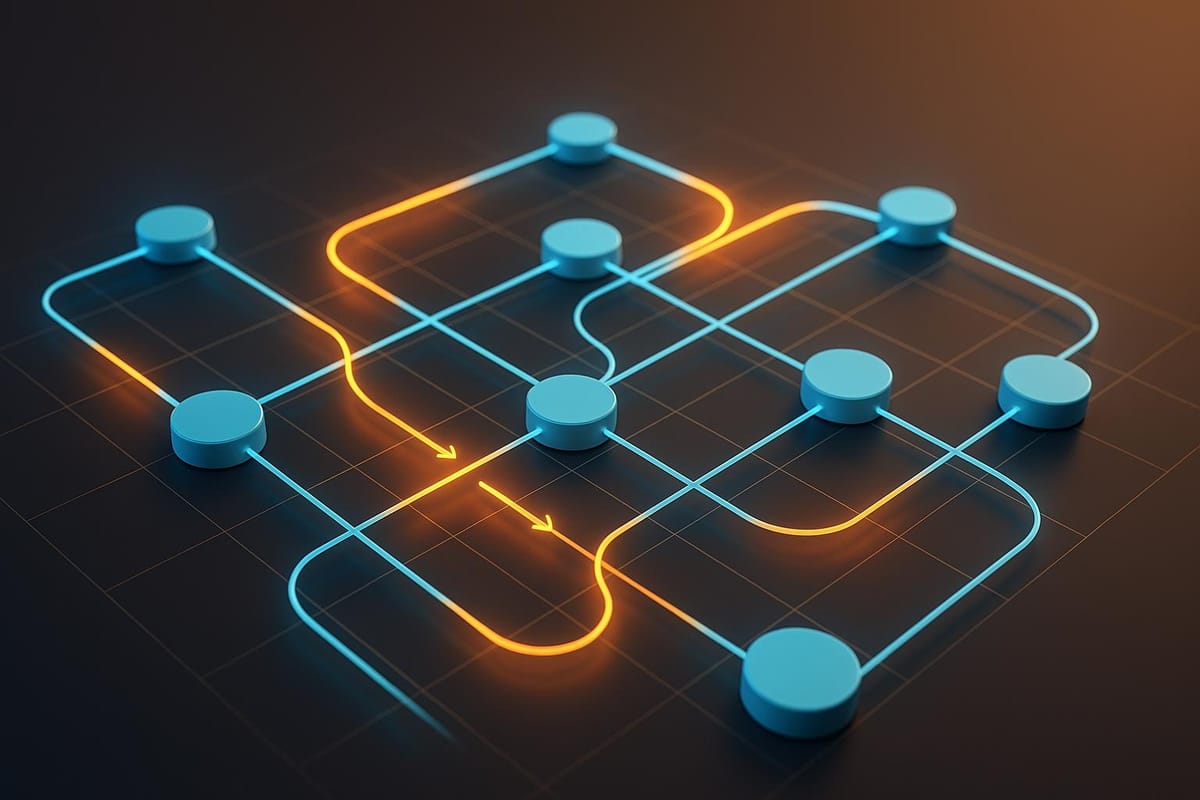

Real-time traffic monitoring transforms how large language models (LLMs) handle requests by enabling smarter, data-driven load balancing. Traditional static methods, like round-robin distribution, fail to address the unpredictable traffic patterns and resource demands of LLMs. In contrast, real-time monitoring continuously tracks metrics like latency, throughput, and error rates, allowing systems to make immediate adjustments.

Here’s how it works:

- Dynamic Routing: Routes requests to the most efficient models based on live metrics, reducing latency by over 30% during traffic surges.

- Proactive Issue Detection: Spots performance dips or errors instantly, preventing bottlenecks and ensuring smooth operations.

- Adaptive Resource Use: Balances workloads across models, avoiding overuse of high-cost models while maintaining performance.

- Automated Failover: Redirects traffic from failing models to healthier ones, maintaining uptime and reliability.

Though effective, real-time monitoring comes with challenges like added complexity, resource overhead, and maintenance efforts. Platforms like Latitude simplify this process by offering tools for tracking metrics, configuring routing strategies, and fostering collaboration between teams.

In short, real-time monitoring ensures LLMs meet high-performance demands while optimizing costs and reliability.

Core Principles of Dynamic Load Balancing in LLM Applications

Key Challenges in Load Balancing for LLMs

Large language model (LLM) applications come with their own set of challenges that make traditional load balancing methods fall short. These challenges include varying query complexities, unpredictable resource demands, and the need to maintain context across interactions - all while keeping costs in check. For instance, a simple task like summarizing text might complete in milliseconds, but a complex reasoning task could take several seconds or even longer.

Resource demands are equally unpredictable. A lightweight prompt may barely use GPU resources, but generating intricate code or handling large datasets can push processing capacity to its limits. This variability makes it difficult to evenly distribute workloads.

Another hurdle is statefulness. Many LLM applications require maintaining context over multiple interactions, which complicates workload distribution. Traditional methods like round-robin scheduling don’t account for the ongoing computational load on each instance, leading to potential bottlenecks.

Cost considerations add another layer of difficulty. LLM endpoints often have different rate limits, reliability levels, and pricing structures, meaning that effective load balancing must juggle performance, budget constraints, and usage quotas simultaneously.

Dynamic Load Balancing Strategies

Dynamic load balancing tackles these challenges with smarter, real-time routing techniques. One example is weighted round-robin, which assigns weights to model instances based on their capacity and performance. This ensures that more capable instances handle a larger share of the workload.

Another approach, latency-based routing, directs traffic to the models that respond the fastest. By continuously monitoring response times, this strategy helps minimize delays, especially during traffic surges.

Queue-based balancing is another effective method, where new requests are routed to instances with the shortest queues, ensuring smoother traffic flow. Similarly, usage-based routing considers real-time quotas and rate limits. For example, if a premium model is nearing its usage cap, the system can automatically redirect traffic to backup models, maintaining performance without exceeding budget limits.

Often, these strategies are combined into sophisticated systems. For instance, a load balancer might primarily use latency-based routing but switch to queue-based balancing during traffic spikes, all while keeping usage limits in mind. The key is to rely on real-time metrics to adapt dynamically to changing conditions.

Real-Time Metrics for Decision-Making

The success of dynamic load balancing lies in monitoring critical metrics that reflect the system's health and performance. Metrics like GPU utilization, throughput, and response latency are essential for identifying overloaded instances and redistributing traffic as needed.

Tracking error rates is equally important. A sudden increase in errors from a specific model could indicate hardware malfunctions, memory issues, or other problems that need immediate resolution. Additionally, keeping an eye on model-specific usage limits helps prevent interruptions by ensuring usage stays within allowable thresholds.

A great example is the SkyLB cross-region load balancer, which demonstrates the power of real-time metrics. By analyzing traffic patterns and adjusting routing strategies dynamically, it achieved 1.12–2.06× higher throughput and 1.74–6.30× lower latency compared to traditional load balancers. These improvements highlight the importance of adaptive decision-making over static rules.

Modern dashboards further enhance these capabilities by providing operators with real-time visibility into system performance. These tools enable quick responses to changing conditions and allow centralized monitoring of all instances, making traffic distribution and resource allocation more informed and efficient.

Step-by-Step Guide to Implementing Real-Time Traffic Monitoring

Preparing Your Infrastructure

Getting started with real-time traffic monitoring for LLM load balancing requires laying a solid groundwork. At its core, your infrastructure needs three essential components: metrics collection tools, monitoring systems, and integration layers for your LLM endpoints.

Start by deploying metrics collection agents like Prometheus or OpenTelemetry across your system. These tools gather the data needed for routing decisions. Make sure your LLM endpoints provide relevant metrics - whether through standardized protocols like OpenMetrics or custom REST endpoints - so they can seamlessly connect to your monitoring dashboards and alerting systems.

Your monitoring system should be capable of ingesting and displaying this data in real time. Using API gateways or service meshes as integration layers between your LLM endpoints and monitoring tools can help capture and process traffic data as it flows through the system.

Finally, ensure smooth communication across all components. Metrics collection tools must capture key LLM metrics, enabling precise monitoring and automated adjustments to traffic changes. Once this foundation is in place, you're ready to move on to instrumenting your endpoints for real-time monitoring.

Setting Up Real-Time Monitoring

With the infrastructure in place, the next step is to instrument your LLM endpoints and configure dashboards for live tracking. Add agents to log critical metrics such as response times, error codes, and resource usage.

Define per-model constraints using YAML files - for example, set limits on tokens-per-minute or specify acceptable failure thresholds. Use these constraints to create routing rules that automatically adjust traffic based on latency or usage levels.

Set up dashboards with tools like Grafana or Kibana to visualize these metrics. These dashboards should display aggregated data, including active requests, average and tail latencies, error rates, and model utilization. They should also allow you to drill down by model, endpoint, or time window to quickly identify and resolve issues.

"Built-in run tracking, error insights. Designed for fast debugging & iteration." - Latitude

Incorporate automated health checks and failover mechanisms into your endpoint instrumentation. These features detect issues early and enable the system to adapt automatically to changing conditions, minimizing disruptions for users.

Integrating Traffic Data with Load Balancers

The final step is integrating real-time metrics into your load balancing system. Feed live traffic and performance data into the load balancer through APIs or plugins that dynamically update routing rules based on the latest metrics.

Configure the load balancer to redirect traffic away from overloaded or failing models. Use latency-based routing to send requests to the fastest-responding models, or usage-based routing to reroute traffic when a model is nearing its quota. For testing new models, set up weighted canary rollouts - such as directing 90% of traffic to the primary model and 10% to the test model - to ensure stability while validating updates.

Leverage live metrics for predictive scaling. Your load balancer should automatically provision additional model replicas or reallocate resources when traffic spikes, ensuring consistent performance even during high-demand periods.

Address common challenges like data latency, system complexity, and false-positive alerts by optimizing data pipelines for minimal delay, using standardized interfaces like OpenMetrics, and fine-tuning alert thresholds. Research highlights that latency-aware routing can cut p95 latency by over 30% during sudden traffic bursts, improving both user experience and SLA compliance.

Advantages and Limitations of Real-Time Monitoring-Driven Load Balancing

Benefits of Real-Time Monitoring

Real-time monitoring goes beyond just tracking metrics - it actively improves system performance. One of the standout benefits is reduced latency. For example, research on vLLM, a high-performance inference engine, shows that latency-aware routing based on real-time metrics can cut p95 latency by over 30% during bursty workloads. That’s a significant improvement for systems handling unpredictable traffic.

System reliability also gets a major boost through automatic failover. When real-time monitoring detects a failing or underperforming model, traffic is immediately redirected to healthier alternatives. This quick response ensures the system meets the stringent SLA requirements that modern AI applications depend on.

Cost efficiency is another advantage. Real-time systems can route simpler queries to faster, less expensive models while saving more complex tasks for high-capability models. This approach helps organizations balance performance needs with budget constraints.

Additionally, real-time monitoring enables systems to adapt to sudden traffic surges. By continuously tracking metrics like response times, error rates, and model health, these systems maintain SLA compliance even during unexpected load spikes. Unlike static methods, which might struggle under such conditions, real-time solutions adjust instantly.

However, achieving these benefits isn’t without its challenges.

Challenges and Trade-Offs

Real-time monitoring introduces a layer of complexity to system architecture. It requires extra components for data collection, processing, and decision-making, which can increase the risk of failures. Teams also need specialized skills to manage and fine-tune these systems effectively.

The process itself consumes additional resources. Constant metric collection and transmission can strain compute and network capacities, especially in high-throughput environments. Beyond that, there are infrastructure costs for running monitoring agents, maintaining data pipelines, and dedicating engineering time to develop and refine monitoring logic.

Another issue is the risk of false alerts. Noisy data or temporary anomalies can trigger unnecessary failovers or traffic shifts, sometimes causing more harm than the initial issue. To address this, teams need to carefully tune alert thresholds and apply techniques to smooth out transient fluctuations.

Finally, ongoing maintenance adds to the workload. Regular testing of failover paths, monitoring system health, and optimizing routing rules require consistent effort and expertise. Many teams underestimate this operational burden during the planning stages. That said, the performance improvements can make these challenges worthwhile.

Comparison Table: Real-Time Monitoring vs. Static Load Balancing

Here’s a side-by-side look at how real-time monitoring compares to static load balancing:

| Feature | Real-Time Monitoring | Static Load Balancing |

|---|---|---|

| Latency Performance | Lower, adapts to traffic spikes | Higher, creates bottlenecks during surges |

| System Reliability | High with automatic failover | Lower, vulnerable to single points of failure |

| Cost Efficiency | Routes to cost-effective models | Often leads to suboptimal resource use |

| Implementation Complexity | Higher, requires monitoring infrastructure | Lower, simpler to implement and maintain |

| Resource Utilization | Maximized, prevents idle capacity | May leave resources underused |

| SLA Compliance | Easier to maintain under dynamic conditions | Harder to guarantee under variable load |

| Monitoring Overhead | Significant due to real-time data needs | Minimal monitoring requirements |

| False Alert Risk | Possible due to noisy metrics | Rare occurrence |

This comparison highlights the trade-off between the adaptability and efficiency of real-time monitoring and the simplicity of static load balancing. While real-time solutions deliver better performance and flexibility, they require a larger investment in infrastructure, expertise, and ongoing maintenance.

Using Latitude for Collaborative LLM Load Balancing

Introduction to Latitude

Latitude is an open-source platform designed to help domain experts and engineers work together on developing and managing production-grade LLM (large language model) features. Its standout feature is its emphasis on teamwork and transparent workflows, which makes it especially appealing to US-based teams. These teams often need to customize their infrastructure while staying compliant with local data privacy regulations.

What makes Latitude different is its collaborative approach. Domain experts focus on defining business logic and crafting prompt strategies, while engineers handle the implementation and fine-tuning of load balancing rules. By separating these responsibilities, Latitude reduces miscommunication and speeds up iteration cycles. This is particularly important when handling the complexities of LLM deployments. This collaborative framework is at the heart of its capabilities for real-time load balancing.

Using Latitude for Real-Time Monitoring and Load Balancing

Latitude takes real-time monitoring to the next level by integrating tools that simplify load balancing. It offers dashboards that provide a clear view of key metrics like request volume, latency, error rates, and model health. These tools allow for dynamic routing, automatically directing traffic to the best-performing LLM instances based on current traffic conditions and pre-set rules.

The platform supports configurable routing strategies, including weight-based and latency-based routing, with automatic failover capabilities. For instance, a US-based team might route most traffic to cost-efficient models during regular business hours (9:00 AM to 5:00 PM EST) and shift to higher-capacity models during peak periods or when latency exceeds 600 ms.

Latitude’s shared workspace fosters real-time collaboration among team members. When performance dashboards highlight issues, domain experts and engineers can work together to troubleshoot and update routing configurations. Features like version control and commenting ensure that changes are well-documented and can be easily rolled back if needed.

The real-time dashboards also provide detailed metrics such as request throughput, average and tail latency (e.g., p95/p99), error rates, and model-specific usage. Visual aids like time-series graphs and heatmaps make it easy to spot bottlenecks, while an alert system notifies the right team members when anomalies occur. This level of visibility is essential for quick responses, helping teams maintain high availability and meet strict service-level agreements.

Community Resources for US Teams

Latitude goes beyond its technical features by offering extensive support tailored to US-based teams. Its documentation adheres to US conventions for dates, currency, and measurements, making it straightforward for local users.

The platform’s GitHub repositories are packed with example configurations and templates for common load balancing scenarios. These resources include ready-made setups for industries like healthcare, finance, and customer service - sectors where dynamic load balancing must meet strict compliance and performance standards.

Latitude also boasts an active Slack community where users can connect with peers and the platform’s development team. US-based users frequently share troubleshooting tips, best practices, and real-world insights. This community-driven support proves invaluable for tackling challenges like managing cold starts during model scaling or fine-tuning routing rules for diverse workloads.

Experienced users have observed tangible benefits from Latitude’s collaborative approach, including faster deployment times, fewer operational errors, and more reliable services. This makes it a practical choice for teams looking to optimize their LLM deployments while maintaining high performance and compliance.

Conclusion

Real-time traffic monitoring has reshaped how LLM load balancing operates, turning it into a system that actively adapts to changing demands. By keeping a close eye on model health, latency, and throughput, teams can implement dynamic routing strategies that reduce p95 latency by over 30% and deliver 99.9% uptime.

Shifting from static to dynamic, real-time load balancing brings tangible improvements across key areas like latency, reliability, and cost management. Teams benefit from smarter routing decisions that control costs, automated failover mechanisms that enhance fault tolerance, and consistent response times that ensure a better user experience - even during heavy traffic spikes. That said, these advantages come with their own set of operational challenges.

Implementing real-time monitoring introduces added complexity. It demands careful configuration, fine-tuning thresholds based on real-world traffic patterns, and addressing hurdles like cold starts when scaling new model instances. These challenges require a thoughtful approach and significant investment in system optimization.

To navigate these complexities, platforms like Latitude offer collaborative tools designed to simplify the process. Real-time monitoring becomes more accessible when engineers and domain experts can work together seamlessly. Latitude’s open-source framework and focus on production-ready LLM features empower teams to adopt advanced monitoring and routing strategies with confidence, backed by a supportive community.

For US-based teams, real-time load balancing isn’t just a technical upgrade - it’s a necessity for running scalable and dependable LLM operations. Combining intelligent routing, automated failover, and collaborative tools lays the groundwork for meeting high performance demands while staying cost-effective and adhering to regulatory standards.

FAQs

How does real-time traffic monitoring improve load balancing for large language models?

Real-time traffic monitoring plays a key role in improving load balancing for large language models (LLMs). It allows systems to adjust dynamically to shifts in traffic patterns, moving away from older methods that often depend on static setups or slow-to-update configurations. This means resources can be distributed more efficiently as demand fluctuates.

By constantly analyzing incoming traffic, real-time monitoring helps reduce latency, avoids overloading, and keeps LLM applications running smoothly. Tools like Latitude make this process even more effective, offering teams the ability to build and manage production-level LLM features with improved accuracy and collaboration.

What challenges arise when implementing real-time traffic monitoring for LLM load balancing, and how can they be solved?

Implementing real-time traffic monitoring for load balancing in large language model (LLM) applications isn’t exactly a walk in the park. It demands precise data collection, ultra-fast processing, and a scalable setup. Why? Because the traffic patterns in LLM applications can be wildly unpredictable, and keeping everything running smoothly requires constant, quick adjustments.

To tackle these challenges, it’s smart to lean on tools designed for real-time analytics and automated decision-making. For instance, monitoring systems that track metrics like latency, throughput, and server load can quickly pinpoint problem areas. Pair that with dynamic load-balancing algorithms, and you’ll have a system that spreads traffic efficiently across servers, cutting down on downtime and speeding up response times.

Platforms such as Latitude can make this process even easier. By fostering collaboration between domain experts and engineers, they help speed up the rollout of production-ready LLM features.

How can teams keep real-time traffic monitoring systems efficient and reliable without adding unnecessary complexity or overusing resources?

To keep real-time traffic monitoring systems running smoothly and efficiently without making them overly complicated, teams can focus on a few key approaches:

- Keep designs straightforward: Opt for modular, scalable system architectures that are easy to update and maintain. Avoid adding unnecessary features that don’t directly solve critical problems.

- Automate repetitive tasks: Automate processes like data collection and anomaly detection to minimize manual work and save resources.

- Track performance regularly: Monitor system performance and resource usage continuously to spot and address potential bottlenecks or inefficiencies early on.

Striking the right balance between functionality and simplicity helps ensure these systems stay reliable and cost-effective over time.