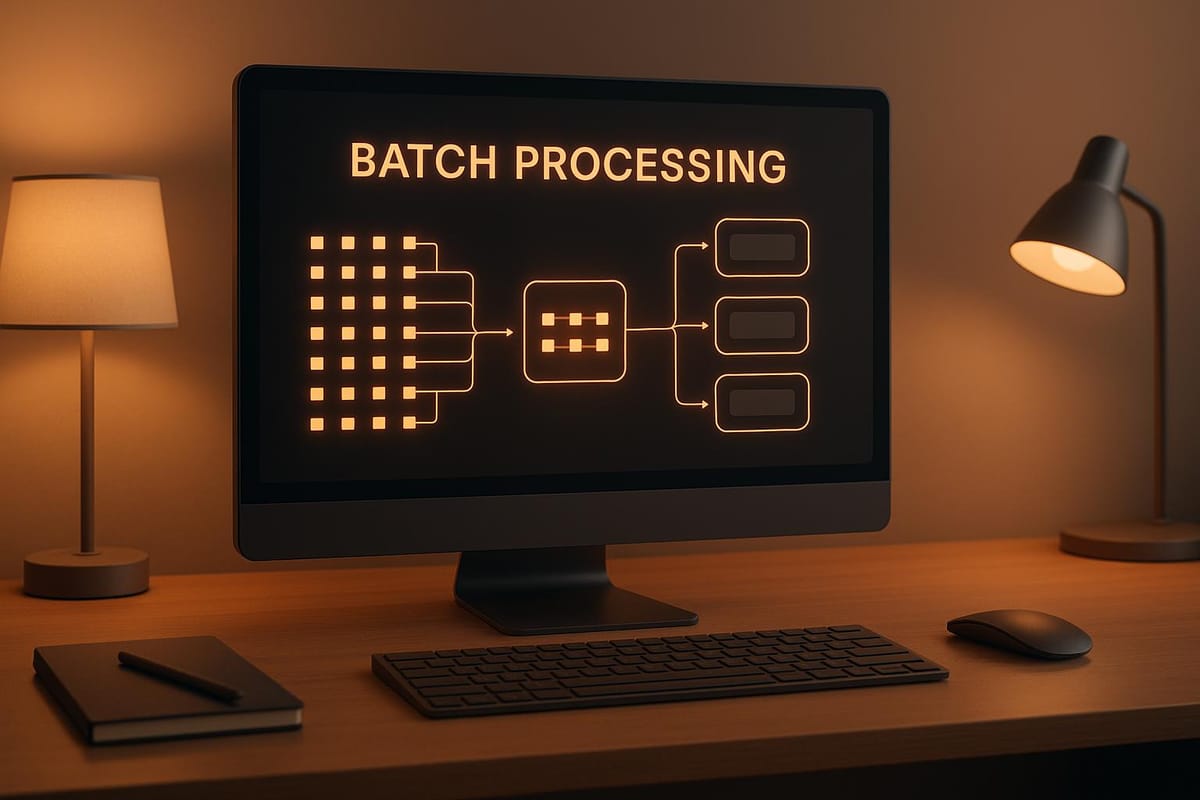

How to Optimize Batch Processing for LLMs

Explore effective strategies for optimizing batch processing in large language models to enhance throughput and resource utilization.

Batch processing is essential for running large language models (LLMs) efficiently, especially when handling multiple requests. By grouping tasks into batches, you can improve throughput, reduce costs, and make better use of GPU resources. However, challenges like memory constraints, variable sequence lengths, and latency trade-offs need careful management. Here’s what you need to know:

- Static Batching: Processes fixed-size batches, best for predictable workloads but may waste resources due to padding.

- Dynamic Batching: Adjusts batch size in real-time, balancing throughput and latency for fluctuating traffic.

- Continuous Batching: Adds/removes requests dynamically, ideal for real-time systems but requires advanced scheduling.

Optimization Techniques:

- Prompt Prefix Sharing: Reuse common prompt parts to save memory.

- Token-Level Scheduling: Dynamically manage tokens to maximize GPU usage.

- Memory-Based Batching: Batch requests based on memory usage to avoid overloading GPUs.

Each method has trade-offs. Static batching is simple but less efficient, while dynamic and continuous batching offer better performance at the cost of complexity. Choose based on your workload and system needs.

Batch Processing Methods for LLM Inference

Selecting the right batching method is a key decision that influences the performance of your LLM system. Each approach processes requests differently, impacting throughput, latency, and resource efficiency. Below, we explore three primary batching methods to help you determine the most suitable strategy for your workload and performance needs.

Static Batching

Static batching is the simplest approach to batch processing. Here, the system collects a fixed number of requests or waits for a set time window before processing all requests together. Once the batch is formed, it remains unchanged throughout the inference process.

This method is ideal for workloads with predictable and consistent request patterns. Its straightforward design makes it easy to implement and debug, with minimal complexity. You can also plan capacity more effectively since batch sizes and processing times are fixed.

However, static batching has its downsides. It may lead to wasted resources due to padding, especially when requests have varying prompt lengths. Additionally, it introduces delays for individual requests, as the first request in a batch has to wait for others to arrive before processing begins.

Static batching is best suited for offline tasks where low latency isn’t a priority. Examples include bulk document analysis, dataset preprocessing, and batch translation. It’s also a good fit for applications with steady traffic and predictable workloads.

Dynamic Batching

Dynamic batching adjusts batch formation in real time, adapting to current system load, queue length, and timing constraints. Instead of sticking to fixed batch sizes, it balances throughput and latency by dynamically optimizing batch composition.

With this method, batches are processed when they reach a size or time threshold, or when certain efficiency criteria are met. This flexibility allows dynamic batching to handle traffic spikes more effectively than static methods. During busy periods, batches fill up and process quickly, while during slower times, smaller batches ensure that response times remain reasonable.

While dynamic batching introduces additional complexity, such as real-time scheduling and queue management, the performance improvements often outweigh the effort. This method is well-suited for production environments with fluctuating traffic, such as customer-facing chatbots, content generation APIs, and interactive applications where user experience is critical.

Continuous Batching

Continuous batching takes batch processing a step further by dynamically adding and removing requests from active batches as they complete. Unlike traditional methods, it doesn’t wait for an entire batch to finish before starting new requests. Instead, it replaces completed requests immediately, maintaining high GPU utilization.

This approach is particularly effective for variable-length outputs, such as text generation. Some requests may produce only a few tokens, while others generate significantly more. Continuous batching adapts in real time to these differences, ensuring efficient resource use regardless of output length.

Key to this method is token-level scheduling, where decisions are made after each token generation step. The system determines which sequences should continue, which should finish, and when to add new requests. This level of control maximizes throughput without compromising response quality.

Continuous batching is especially beneficial for conversational AI systems, code generation tools, and creative writing platforms, where output lengths can vary widely. Although it requires advanced memory management and precise scheduling, the performance gains - particularly in GPU utilization - make it a preferred choice for organizations looking to scale their LLM operations efficiently.

While its implementation is more complex than static or dynamic methods, continuous batching delivers substantial benefits in production environments where hardware efficiency directly affects operational costs.

Batch Processing Optimization Techniques

Once you've chosen a batching method, the next step is to implement strategies to minimize computational overhead and make memory usage more efficient. These techniques build on the batching methods already discussed, helping you fine-tune your system for better performance.

Prompt Prefix Sharing

When multiple requests share similar prompt beginnings, prefix sharing can help reduce redundant computations. This technique groups requests with common prefixes and reuses the key-value cache memory already computed for the shared portion.

Take the example of a customer service chatbot where every request starts with the same system prompt: "You are a helpful customer service representative for TechCorp. Always be polite and professional." Instead of processing this identical prefix for every request, the system calculates it once and shares the resulting key-value cache across all requests in the batch.

This saves memory, allowing you to handle larger batch sizes or longer conversations. For instance, if your system prompt contains 200 tokens and you’re processing 32 requests at once, prefix sharing eliminates the need to store 6,400 redundant tokens in memory.

To implement this effectively, robust cache management is essential. The system should track which tokens are shared and which are unique. When a request is completed, only the unique portions should be cleared from memory, while shared prefixes remain available for other requests.

This method is especially useful for fine-tuned models where consistent system prompts are common, or for applications like code generation, where boilerplate instructions are frequently repeated. Next, let’s look at token-level strategies to further optimize performance.

Token Batching and Scheduling

Traditional batching groups entire requests together, but token-level batching takes optimization a step further by organizing individual tokens across multiple requests. This approach dynamically reorders and schedules tokens to keep GPU utilization high throughout the inference process.

Requests often vary in prompt length and generation requirements. For example, a request with a 50-token prompt will finish its input phase much sooner than one with a 500-token prompt. Token batching addresses this by interleaving tokens from requests at different stages of processing.

During the prefill phase, shorter prompts finish processing their input tokens first. Instead of leaving GPU cores idle while waiting for longer prompts, the system immediately starts generating output tokens for the completed prompts. Meanwhile, other GPU cores continue processing input tokens for the longer prompts.

This requires sophisticated scheduling algorithms to track each request’s progress and dynamically allocate resources. These algorithms must balance fairness, memory efficiency, and throughput.

Token batching works particularly well when combined with attention mechanisms that handle both prefill and generation phases simultaneously. Together, they maximize hardware utilization across diverse workloads.

Memory-Based Batching

Instead of batching requests based solely on their count, memory-based batching uses actual key-value cache memory consumption as the primary criterion. This ensures optimal memory usage while avoiding out-of-memory errors that could crash the server.

The memory footprint of a request depends on factors like prompt length, generation limits, model size, and precision. For instance, a batch of 16 short requests might use less memory than a batch of 4 long requests. Memory-based batching calculates the total memory requirement for each batch, ensuring it fits within the available GPU capacity.

A good rule of thumb is to keep memory usage at 80-90% of GPU capacity, leaving some room for temporary allocations. To do this, the system must accurately estimate how much memory each request will consume based on its parameters. Overestimating memory needs can lead to underutilized hardware, while underestimating risks memory overflow.

This technique pairs well with garbage collection strategies that immediately free up memory from completed requests. This allows new requests to join active batches as soon as memory becomes available, instead of waiting for an entire batch to finish.

Memory-based batching is particularly effective in production systems dealing with a wide range of request sizes. It ensures stable memory usage and prevents crashes, even under unpredictable workloads. By combining these techniques, you can make the most of your resources while staying within memory limits.

Implementation and Performance Monitoring

After setting up your batching strategy, keeping an eye on its performance is key to ensuring it works as intended. Monitoring helps you validate your approach and make adjustments to optimize the balance between throughput, latency, and resource usage.

Choosing the right batch size is a critical step. While larger batches can improve throughput by leveraging parallel computation, they also come with potential trade-offs like higher latency and increased memory usage. To find the sweet spot, you'll need to rely on benchmarking and profiling specific to your deployment. Combine these findings with continuous performance monitoring to fine-tune your batch processing over time.

Batch Processing Method Comparison

When deploying large language models (LLMs), choosing the right batching method is crucial. Let’s break down the differences between static, dynamic, and continuous batching to help you decide which approach fits your needs best.

Batching Methods Comparison Table

Each method comes with its own strengths and trade-offs. Understanding these can ensure your deployment runs smoothly and efficiently.

| Aspect | Static Batching | Dynamic Batching | Continuous Batching |

|---|---|---|---|

| Throughput | Moderate - Fixed batch sizes limit optimization | High - Adaptive sizing maximizes GPU usage | Highest - Processes requests without idle time |

| Latency | High - Requests wait for full batches | Medium - Reduced waiting with flexible sizing | Low - Processes requests as they arrive |

| Resource Utilization | Low to Medium - Underutilization when batches aren't full | High - Efficient use of GPU memory and compute | Highest - Fully optimizes hardware efficiency |

| Implementation Complexity | Low - Simple to set up and debug | Medium - Requires batching logic and scheduling | High - Needs advanced scheduling and memory management |

| Memory Management | Predictable - Fixed memory allocation | Variable - Adjusts based on batch size | Advanced - Demands complex memory handling |

| Best Use Cases | Offline tasks with predictable workloads | APIs with varying traffic patterns | Real-time applications needing high throughput |

This table highlights the trade-offs, helping you match the method to your workload's demands.

Choosing the Right Batching Method

The decision largely depends on your workload type, performance goals, and available resources.

- Static batching is ideal for predictable, offline tasks where simplicity and reliability matter more than speed. For example, it works well for processing large datasets during non-peak hours, where higher latency is acceptable.

- Dynamic batching strikes a balance between performance and complexity. It’s a great fit for production APIs that handle variable traffic patterns. While it introduces moderate latency, it significantly improves resource utilization, making it a go-to choice for most user-facing applications.

- Continuous batching is the most advanced option, offering the highest performance and lowest latency. However, it requires a deep investment in engineering expertise. This method is best for real-time applications with strict latency requirements, such as live chatbots or streaming services, where every millisecond counts.

Your choice should also consider hardware constraints and team capabilities. Static batching is straightforward and predictable, dynamic batching offers flexibility for scaling, and continuous batching maximizes efficiency when you have the resources and expertise to manage its complexity.

Conclusion and Next Steps

Let’s pull everything together and recap the key strategies for efficient batching with LLMs. The goal here is simple: align your batch processing methods with the specific demands of your workload to get the best results.

Batch Processing Optimization Summary

Efficient batch processing hinges on pairing the right methods with smart techniques. For most production setups, dynamic batching strikes a great balance, offering flexibility and performance. If you have the engineering resources, continuous batching can push performance to its peak.

Other techniques, like prompt prefix sharing, are game-changers for reducing memory usage and improving throughput, especially when handling similar requests. Token batching and scheduling help maximize GPU usage by grouping requests based on their computational needs. Meanwhile, memory-based batching ensures you get the most out of your hardware without running into bottlenecks.

But here’s the thing: optimization isn’t a one-and-done deal. It requires constant monitoring. Regular profiling can help you spot bottlenecks early, and adjusting batch sizes and scaling strategies ensures your system stays efficient, even as demands grow.

Using Latitude for Batch Processing

Latitude’s platform is designed to make these advanced techniques easy to implement. It’s an open-source solution tailored for production-grade LLM deployments, offering tools and resources to streamline batch processing.

Latitude’s prompt engineering tools help fine-tune prompt designs for better batching efficiency, while its collaboration features make it easier for teams to work together on optimizations. Plus, the platform’s community resources provide a wealth of shared knowledge to guide you as you refine your techniques. With Latitude, you’re working with a system built to handle the performance demands of batch processing.

Get Started with Latitude

Ready to dive in? Latitude’s GitHub page is packed with documentation and code examples to help you hit the ground running. Need advice or troubleshooting? Join the Slack community to connect with engineers tackling similar challenges and share real-world insights.

Whether you're just starting out or fine-tuning your existing batch processing setup, Latitude’s open-source approach gives you the flexibility to adapt the platform to your needs while benefiting from ongoing community contributions and updates.

FAQs

Why is dynamic batching better at managing fluctuating traffic in LLM workloads compared to static batching?

Dynamic batching stands out in handling fluctuating traffic because it adjusts in real time, grouping incoming requests as they come in. This approach ensures efficient use of resources and minimizes delays, even during sudden traffic surges.

Unlike static batching - which depends on predetermined batch sizes and often falters with inconsistent workloads - dynamic batching adapts to the current traffic flow. It strikes a balance between throughput and latency by processing requests either once a batch hits a specific size or after a set maximum wait time. This adaptability helps maintain steady performance, even when traffic patterns are unpredictable.

What challenges arise with continuous batching in LLMs, and how can they be solved?

Continuous batching in large language models (LLMs) comes with its own set of hurdles. These include juggling computational and memory requirements during both the prefill and generation stages, as well as dealing with requests that complete at different times. Such challenges can result in increased latency and less efficient use of resources.

To tackle these problems, strategies like KV caching are often employed to cut down on redundant computations. Moreover, techniques such as iteration-level scheduling and selective batching can dynamically adapt to requests finishing at different times. These methods help boost throughput while keeping latency in check, leading to better performance and smarter resource management.

How does prompt prefix sharing improve memory usage, and when is it most effective?

Prompt prefix sharing optimizes memory usage by reusing shared key-value (KV) caches across multiple requests. This reduces unnecessary computations and helps conserve resources. It's especially useful in batch processing scenarios where sequences have overlapping prefixes - think chat systems, few-shot learning, or instruction-based prompts.

By using a shared context, this method boosts throughput and lowers latency. It’s a practical way to handle large volumes of similar requests more efficiently during LLM inference.