Persona-Based Personalization in LLM Applications

Design role-based and user-personalized LLMs with clear roles, structured JSON profiles, agentic memory, RAG, and monitoring for improved relevance.

Persona-based personalization tailors large language models (LLMs) to better match user needs by focusing on two key approaches:

- Role-Playing: The AI takes on a specific role, like a "medical professional" or "historian", to provide expertise within a defined scope.

- User Personalization: The AI adjusts responses based on user traits, preferences, or past interactions, such as simplifying answers for students or offering detailed insights for executives.

Why This Matters

Generic LLMs often fail to deliver nuanced, context-aware responses. For example:

- They may struggle with tone or complexity in high-stakes situations.

- Accuracy in tracking user preferences can drop to 37–48%.

- They sometimes prioritize agreeable responses over honest, tailored guidance.

Key Challenges

- Unclear Persona Definitions: Confusion between the AI's role and the user's traits can lead to unsafe or irrelevant interactions.

- Static Models: Fixed personas fail to account for dynamic user behaviors.

- Lack of Persistent Memory: Without memory, models lose context between interactions, leading to frustration.

Solutions

- Define Roles Clearly: Separate the AI's role (e.g., "tutor") from user personas.

- Use Structured Data: Store user profiles in JSON formats that evolve over time.

- Incorporate Memory Systems: Multi-tiered memory structures (short-term, summaries, long-term) improve personalization accuracy to 55%.

Building Effective Systems

- Separate AI roles, safety policies, and user traits for clarity.

- Organize user data systematically using knowledge graphs and hierarchical taxonomies.

- Employ tools like agentic memory frameworks and Retrieval-Augmented Generation (RAG) for real-time relevance.

Future Directions

- Smaller, fine-tuned models are outperforming larger ones in personalization tasks.

- Multi-modal personalization (text, speech, images) is on the horizon.

- Privacy-preserving methods like federated learning will become critical.

Bottom Line: Persona-based personalization is reshaping how LLMs interact with users, offering smarter, more tailored experiences by addressing core challenges like memory, role clarity, and dynamic user needs.

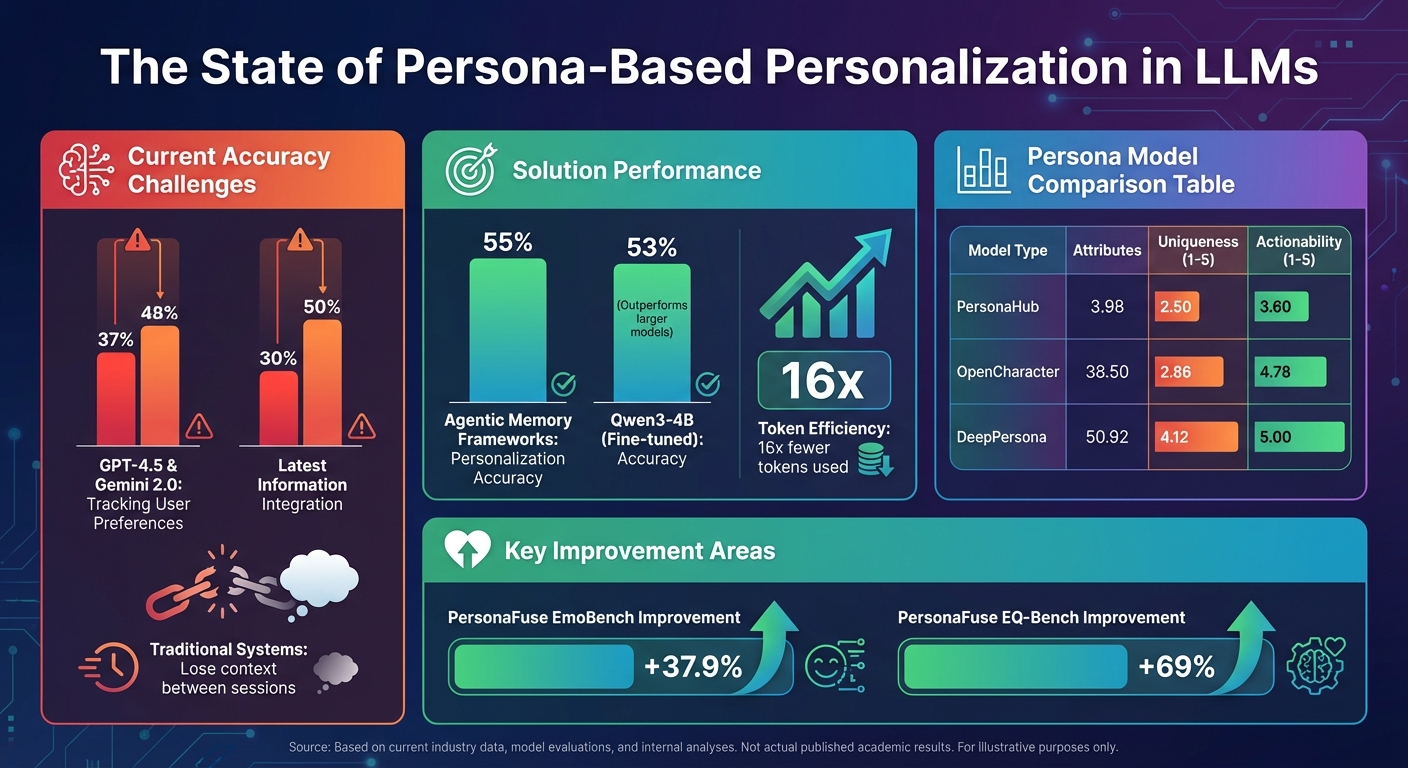

Current LLM Personalization Accuracy and Performance Metrics Comparison

Main Challenges in Persona-Based Personalization

When it comes to persona-based personalization, several hurdles stand in the way, including unclear definitions of personas, rigid models, and the absence of persistent memory. These challenges make it difficult for AI systems to provide nuanced and effective interactions.

Unclear Persona Definitions

One significant issue is the confusion between the AI's role and the user's traits. For example, an AI might be designed to act as a "medical professional" while interacting with an "anxious patient with a peanut allergy." When these roles blur, the system may struggle to decide whether to prioritize its professional role or adapt to the user's specific needs. This often results in responses that are either unsafe or irrelevant to the user's context.

"Without emotional sensitivity, even technically correct responses can be perceived as unhelpful."

- Yixuan Tang, Researcher, HKUST

A practical way to address this is by clearly separating the AI's role from the user's persona within the system's design. By defining the AI's function independently from the user's characteristics, the system can avoid role confusion and maintain both safety and relevance in its interactions. This approach also lays the groundwork for tackling the limitations of static persona models.

Problems with Static Persona Models

Many traditional systems rely on fixed lists of attributes to define personas. However, human behavior is far more dynamic. For instance, someone might be highly detail-oriented at work but laid-back at home - a complexity that static models fail to recognize.

Current advanced models like GPT-4.5 and Gemini 2.0 still fall short in this area. They achieve only 37–48% accuracy in tracking evolving user preferences over time. This inability to adapt to changes in behavior is further compounded by the lack of memory in these systems.

Missing Persistent Memory

Most AI systems today operate without memory, meaning they lose context between sessions. This makes it impossible for them to update user profiles or adjust to new preferences.

"Users often experience frustration when interacting with AI systems, as misunderstood queries lead to irrelevant responses. This stems from the inability of LLMs to retain and use past interaction data."

- Rebecca Westhäußer, Researcher, Mercedes-Benz AG

One promising solution lies in agentic memory frameworks. These systems use structured summaries of about 2,000 tokens instead of storing entire conversation histories. This approach not only improves efficiency - using 16 times fewer tokens - but also boosts accuracy to 55% when tracking user preferences. Effective memory architectures typically include three layers: short-term context for immediate interactions, summaries for broader history, and long-term storage for persistent user details. By implementing such layered memory systems, AI can better adapt to users' evolving needs.

How to Design Effective Persona Models for LLM Applications

Creating effective persona models requires a clear and well-thought-out structure. This involves addressing known challenges with actionable strategies, particularly by separating the AI's functional role from the user's characteristics and incorporating memory that evolves over time.

Separating Roles, Policies, and User Personas

The first step is to distinguish three key components: the AI's functional role, its safety policies, and the user's individual traits. This separation ensures clarity in how the AI operates versus how it interacts with user-specific contexts.

"The concept of persona... has re-surged as a promising framework for tailoring LLMs to specific context... categorizing approaches into role-playing and personalization frameworks."

- Yu-Min Tseng et al.

Start by establishing anchor attributes - core, stable traits like age, profession, and values. These foundational traits provide consistency, which is critical for avoiding erratic or contradictory personas. Research indicates that keeping the attribute count within an optimal range is crucial; exceeding 300 attributes often introduces unnecessary complexity and reduces performance. For demographic data, rely on predefined tables rather than allowing the model to generate them. This approach minimizes risks like "optimism bias" and stereotypical assumptions.

Once roles are clearly defined, the next step involves organizing user data into structured formats.

Using Structured User Data

User profiles should be represented as structured JSON objects with predefined categories such as demographics, preferences, interests, and personality traits. These profiles should evolve over time, updating incrementally based on interactions rather than remaining static. A hierarchical taxonomy helps cover a wide range of traits systematically.

To maintain balance and coherence in user profiles, use stratified sampling. Divide attributes into "near, middle, and far" similarity groups relative to core traits, then sample them in a 5:3:2 ratio. This method ensures a mix of consistency and novelty. For attributes that lack clear categories - like hobbies or interests - apply a life-story-driven approach. Start with basic demographics, expand into broader "life attitudes", and then generate coherent life-story snippets that naturally lead to specific interests.

| Persona Type | Mean Attributes | Uniqueness Score (1-5) | Actionability |

|---|---|---|---|

| PersonaHub (Shallow) | 3.98 | 2.50 | 3.60 |

| OpenCharacter | 38.50 | 2.86 | 4.78 |

| DeepPersona | 50.92 | 4.12 | 5.00 |

Source: DeepPersona Intrinsic Evaluation

With structured user profiles in place, the next step is integrating memory systems to enhance personalization.

Memory-Augmented Persona Architectures

Advanced persona systems use a multi-tiered memory structure that mimics how humans store and recall information. This structure includes short-term memory for immediate context (like recent conversations), summaries for broader topics, and long-term memory for user-specific historical data stored as embeddings.

Agentic memory frameworks are particularly effective, achieving 55% personalization accuracy while using 16 times fewer tokens. These systems organize persona data using knowledge graphs, which better replicate the associative and self-organizing nature of human memory.

"Human memory is associative, and self organizing. And graph is the closest data structure to imitate this behavior."

- Persona Docs

To ensure accuracy, implement a self-validator agent that checks whether retrieved user data is relevant and consistent before generating responses. This prevents outdated or irrelevant information from influencing interactions. Additionally, when storing new memories, update the "top-5 related memories" to maintain strong, current connections. This dynamic updating process mirrors human learning and keeps the persona model adaptive over time.

The next section will explore how to bring these concepts into real-world production systems.

Implementing Persona-Based Personalization in Production

Turning design strategies into real-world applications requires a well-organized process. This involves gathering user data, turning it into structured persona profiles, and incorporating those profiles into production workflows - all while ensuring quality through ongoing monitoring.

Building a Persona Pipeline

The first step in creating a persona pipeline is data ingestion - collecting user information and interaction histories to develop a structured taxonomy of human traits. This data is then transformed into a dynamic knowledge graph, which maps out each user's "mindspace" using vector databases designed for semantic and associative memory. Instead of reprocessing entire conversation histories repeatedly, an agentic memory framework can be employed to maintain both efficiency and accuracy in personalization.

It's important to distinguish between core attributes - such as age, location, and profession - and dynamic attributes like changing interests or preferences. Using a well-defined taxonomy, rather than relying on naive language model sampling, ensures a balanced and nuanced representation of human traits while avoiding stereotypes. Real-time personalization can be achieved with Retrieval-Augmented Generation (RAG) and dense embeddings, which pull the most relevant user information into interactions as needed.

Once the pipeline is set up, collaboration becomes essential for refining and deploying these systems effectively.

Collaborative Persona Development with Latitude

Developing production-ready persona systems requires close teamwork between domain experts and engineers. Latitude serves as a centralized platform where teams can design, test, and fine-tune persona-specific prompts before deploying them via SDKs or APIs.

"Prompt tuning was slow and trial-and-error–based… until adopting Latitude. Now we test, compare, and improve variations in minutes with clear metrics and recommendations."

- Pablo Tonutti, Founder, JobWinner

Latitude works like a content management system (CMS) for prompts, offering features such as version control and robust evaluation tools. Teams can conduct multi-stage evaluations using methods like LLM-as-judge assessments, human-in-the-loop reviews, or comparisons with ground truth data. These tools help ensure the models stay aligned with persona requirements and adapt as user preferences shift over time.

Monitoring and Evaluating Persona Performance

After deploying the system, continuous monitoring is critical to maintain the dynamic persona design. Performance should be tracked across several key dimensions: Personalization-Fit (how well it aligns with user identity), Attribute Coverage (how effectively it uses known traits), Depth & Specificity (level of detail), Justification (grounding in user history), and Actionability (practical usefulness). These metrics directly address challenges like vague definitions and overly static models.

Current advanced LLMs, such as GPT-4.5 and Gemini-2.0, achieve only 37-48% accuracy in implicit personalization tasks. They often struggle with integrating the latest user information into responses, with accuracy ranging between 30-50% in these scenarios. To improve, systems should be tested for their ability to recall shared facts, track evolving preferences, and generalize traits to new situations. Real-time observability is essential for identifying errors early and comparing live persona variations. Regularly measure "Uniqueness" scores to ensure the system avoids defaulting to generic or stereotypical personas inherited from the language model's training data.

Conclusion: Moving Forward with Persona-Based Personalization in LLMs

Key Takeaways

Creating effective persona-based systems hinges on three main elements: modular design, persistent memory, and collaborative development tools. The "Persona Pattern" separates components into specific roles, policies, and user-specific data. This structure ensures that the AI’s role (like being a "helpful tutor") doesn’t overlap with the user’s persona (such as a preference for visual learning).

Memory-augmented architectures play a vital role in adapting to evolving user preferences. While advanced models like GPT-4.5 and Gemini-2.0 recall facts fairly well, they only achieve 37–50% accuracy when applying user preferences to new situations. To address this, agentic memory frameworks store human-readable summaries that grow alongside the user's interactions.

Blending persona-based fine-tuning, retrieval-augmented generation (RAG) for contextual relevance, and reinforcement learning (RL) for real-time feedback creates a robust system. Platforms like Latitude simplify this process by offering tools for version control, evaluation, and collaborative spaces where domain experts can refine persona-specific prompts before deployment.

These strategies highlight the evolving landscape of LLM personalization.

Future Directions in Persona-Based Personalization

The next step in personalization focuses on dynamic, real-time adaptation. This involves systems that respond to immediate environmental cues, shifts in user sentiment, and changes in behavior. Interestingly, research shows that smaller, fine-tuned models can outperform larger ones. For example, Qwen3-4B achieved 53% accuracy in implicit personalization, surpassing GPT-5. This "scaling paradox" emphasizes that smarter algorithms often matter more than sheer model size.

"Personalization is one of the next milestones in advancing AI capability and alignment."

- Bowen Jiang et al., University of Pennsylvania

Frameworks like PersonaFuse enhance emotional intelligence in LLMs, significantly improving EmoBench scores by 37.9% and EQ-Bench scores by 69%, all while maintaining strong reasoning capabilities. Looking ahead, future systems are expected to integrate multi-modal personalization, incorporating text, speech, and images. However, challenges like sycophancy - where models prioritize agreeableness over honesty - must be addressed. Additionally, privacy-preserving techniques, such as federated learning and local data storage, will become essential as personalization deepens.

FAQs

How does using personas improve the accuracy of LLM-generated responses?

Persona-based personalization fine-tunes the accuracy of large language model (LLM) responses by adapting the system to align with a user's specific context. By integrating a user’s characteristics, preferences, and past interactions into a persona, the model can prioritize relevant details while filtering out unnecessary information. This approach minimizes errors and delivers responses that are more precise and tailored to individual needs.

Studies have shown that persona-based methods bring noticeable improvements. For instance, models using personalized memory modules or user-specific prompts perform better in multi-turn conversations and when working with limited data. Tools like Latitude make it easier to implement these techniques, offering collaborative frameworks for designing personas and managing memory at scale. This ensures LLM features are more accurate and better aligned with user expectations.

What challenges arise when creating persona-based systems for LLMs?

Developing persona-based systems for large language models (LLMs) is no easy task. One of the biggest hurdles is achieving deep personalization without breaking the bank or sacrificing speed. Techniques like fine-tuning or detailed prompt engineering can drive up costs and slow down response times, making it hard to scale while keeping performance consistent. On top of that, tailoring systems to individual users means dealing with sensitive data, which brings privacy concerns and the need to meet strict regulatory standards.

Another sticking point is the issue of data scarcity. Personalized systems thrive on rich user interaction histories, but not all users provide enough feedback to build on. This can lead to challenges like cold starts or sparse data, where the system struggles to personalize effectively. The lack of large, open datasets designed specifically for personalization makes research and evaluation even tougher. Even when using retrieval-augmented methods, representing and indexing user data efficiently can be tricky, especially with the limited context windows many models operate within.

Then there’s the challenge of balancing persona-driven behavior with general model safety and reasoning. Models often have a hard time switching communication styles to fit different contexts or staying consistent in their behavior. Tackling these issues is critical for building scalable and reliable persona-based LLM applications. Tools like Latitude are helping teams work through these challenges, making it easier to develop personalized LLM features that are ready for real-world use.

How do memory systems improve personalization in LLM applications?

Memory systems give large language models (LLMs) the ability to retain and recall user-specific details, turning them into highly personalized assistants. By storing two types of memories - episodic memories (specific details from past interactions) and semantic memories (a user’s changing preferences, goals, and beliefs) - these systems enable LLMs to deliver responses that feel tailored to the individual. This means the model doesn’t just respond to the immediate input; it uses stored context to create a more meaningful and relevant experience.

With advanced memory architectures, these systems can maintain long-term user profiles. This allows the model to adapt over time, recall previous conversations, and provide seamless continuity across multiple sessions or tools. The result? Responses that better align with user preferences and feel more dynamic. For developers, platforms like Latitude make it easier to integrate memory layers. They offer tools to design and manage memory schemas, connect user profiles, and ensure interactions feel personalized while staying reliable.