Proprietary LLMs: Hidden Costs to Watch For

Break down hidden token fees, vendor lock‑in, scaling spikes, and compliance costs of proprietary LLMs, plus practical ways to reduce spending.

Using proprietary LLMs might seem straightforward, but hidden costs can quickly spiral out of control. Here's what you need to know:

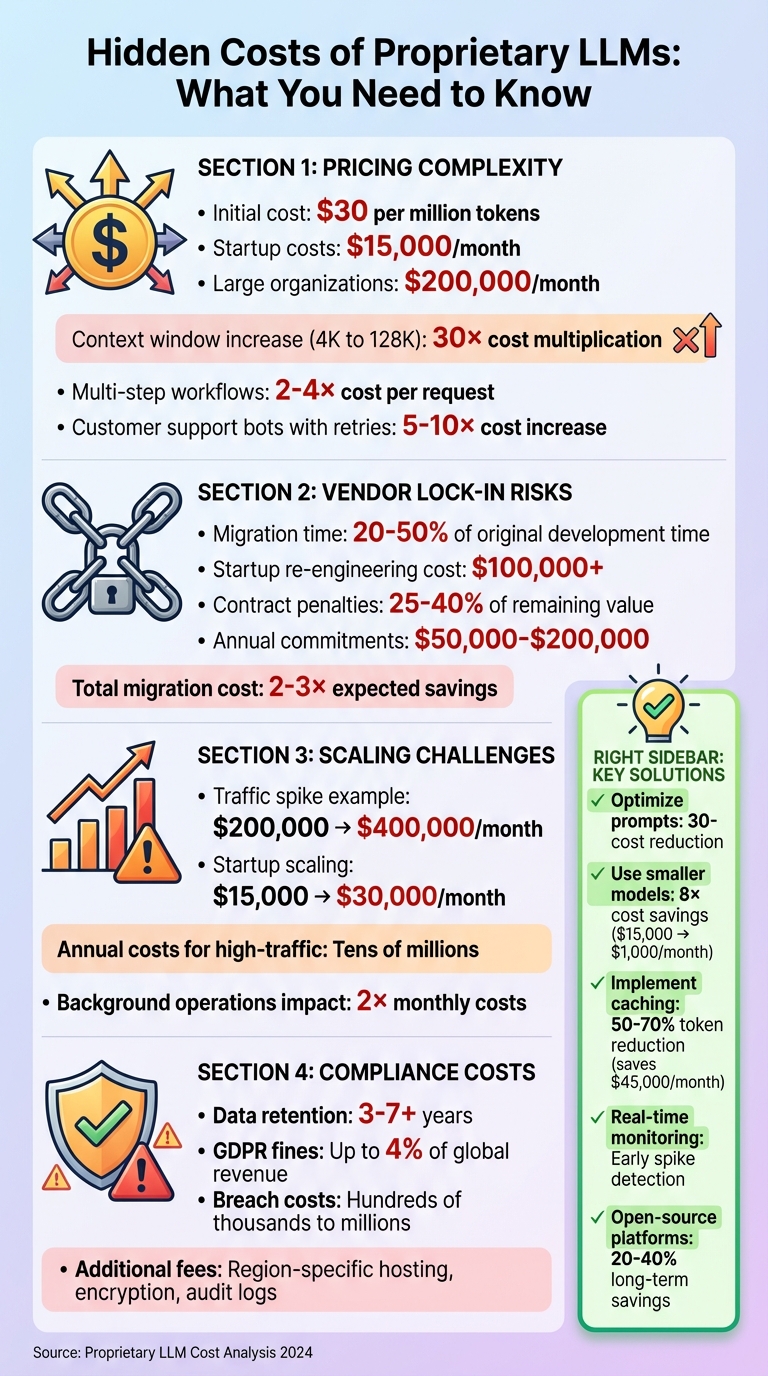

- Pricing Complexity: While initial costs appear low (e.g., $30 per million tokens), scaling production often leads to unpredictable expenses - ranging from $15,000/month for startups to $200,000/month for larger organizations.

- Vendor Lock-In Risks: Switching providers can be costly and time-consuming, with migration expenses consuming 20–50% of original development time and potential penalties for breaking contracts.

- Scaling Challenges: High traffic, larger context windows, or multi-step workflows can multiply costs significantly - sometimes doubling or tripling monthly bills.

- Compliance Costs: Meeting regulations like GDPR or HIPAA often adds unexpected expenses for region-specific hosting, encryption, and data storage.

Key Solutions:

- Optimize Prompts: Shorter prompts and fewer tokens can cut costs by 30–50%.

- Use Smaller Models: Task-specific models save up to 8× in expenses.

- Monitor Usage: Real-time dashboards help track and control token consumption.

- Consider Open-Source Tools: Platforms like Latitude reduce vendor dependency and compliance costs.

Proprietary LLMs offer convenience, but understanding and managing these hidden costs is crucial for long-term success.

Hidden Costs of Proprietary LLMs: Key Statistics and Cost-Saving Solutions

Hidden Costs in Pricing Models

Common Pricing Models and Their Problems

Proprietary large language model (LLM) providers usually stick to three pricing structures: per-token usage, subscription tiers, and enterprise service-level agreements (SLAs). Here's how they work:

- Per-token pricing: Charges separately for input and output tokens. For instance, a high-end model might cost about $0.084 per query.

- Subscription tiers: These come with a fixed monthly fee and usage caps. If demand increases, you may need to upgrade to a higher tier.

- Enterprise SLAs: These add premiums for better support and uptime guarantees. They often include variable fees for high-volume access.

While these models may seem straightforward, costs can spiral as usage grows. For example, switching from a 4K to a 128K context window can increase token usage - and costs - by over 30× [8]. Subscription tiers, although predictable at first, can lead to unexpected overage fees or wasted capacity when usage fluctuates.

These pricing structures barely scratch the surface. The real expenses multiply as operational complexities come into play.

How Costs Multiply During Operations

As usage scales, additional factors can significantly increase token costs. Workflows using retrieval-augmented generation (RAG) or tool-calling often require multiple model calls for a single user action. For example, one call might rewrite the query, another retrieves relevant data, and a final call generates the answer. This can double, triple, or even quadruple the cost per request. Larger context windows further amplify token usage, sometimes by 10×.

Retry attempts and error handling add another layer of expense. For example, customer support bots using reasoning chains may see costs rise by 5–10× when retries are needed to correct partial or inaccurate responses. Without proper optimization, a single use case can cost tens of thousands of dollars per month. If you’re managing multiple use cases, expenses can easily climb into the hundreds of thousands. Additionally, maintaining key-value cache storage can push costs even higher.

How to Control Pricing Surprises

To keep these hidden costs in check, cost management is essential. Here are some practical strategies:

- Optimize prompts: Simplify and shorten system and user prompts, remove unnecessary context, and structure outputs more efficiently. These adjustments can reduce token usage and cut costs by 30–50%.

- Right-size your models: For simpler tasks like classification or summarization, smaller models can deliver similar accuracy at a fraction of the cost. For instance, using distilled 8B models instead of 400B ones can lower startup costs from roughly $15,000 to under $1,000 per month - an 8× reduction.

- Track usage in real time: Dashboards that monitor token consumption, request volumes, and cost breakdowns can help teams spot spikes and anomalies early.

Platforms like Latitude simplify this process by combining prompt optimization, workflow management, and usage tracking. They make it easier for domain experts and engineers to collaborate on designing efficient prompts and comparing model performance - all without relying on a specific vendor.

Vendor Lock-In and Its Financial Impact

What Is Vendor Lock-In?

Proprietary large language models (LLMs) often come with hidden costs, and one of the biggest culprits is vendor lock-in.

Vendor lock-in happens when a company becomes dependent on a specific LLM provider's proprietary technology, including their SDKs, custom code, and model-specific dependencies. For example, if you've built your system using OpenAI's SDK, switching to Anthropic's Claude isn't as simple as flipping a switch. Each provider uses unique completion APIs and tokenization libraries, meaning you'd need to rework your entire prompt chain to make the switch. Similarly, companies that rely on Claude-specific tools often find their data pipelines tied to proprietary vector stores and fine-tuning formats.

This reliance creates a technical bottleneck, turning what might seem like a simple migration into a costly and complicated endeavor.

The Cost of Being Locked In

Breaking free from vendor lock-in can be expensive - often much more than anticipated. Migrating to a new provider can consume 20–50% of the original development time. For startups, this could mean spending over $100,000 just on re-engineering. On top of that, breaking multi-year contracts can come with hefty penalties, ranging from 25–40% of the remaining contract value.

And it doesn’t stop there. During migration, overlapping contracts with both vendors often mean paying double. With minimum annual commitments ranging from $50,000 to $200,000 - even if usage drops - your total costs can balloon to two or three times what you initially expected to save.

Using Open-Source Tools to Avoid Lock-In

Open-source tools offer a way out of this costly cycle by allowing companies to build systems that aren’t tied to one specific LLM provider. Platforms like Hugging Face Transformers and ONNX provide standardized interfaces, making it easier to swap out LLMs without needing to rewrite your code. This can reduce migration time from months to just days.

One standout example is Latitude, an open-source platform designed to separate prompts from proprietary SDKs. By using Latitude, organizations can cut long-term costs by 20–40% and drastically reduce migration challenges. With features like quarterly migration tests and 80% code reusability, it’s a practical solution for maintaining flexibility and avoiding the financial strain of vendor lock-in.

Scaling Challenges and Cost Unpredictability

How Scaling Increases LLM Costs

When it comes to proprietary large language models (LLMs), costs can escalate quickly due to the per-token pricing for both inputs and outputs. During high-traffic periods like Black Friday or the holiday season, expenses can skyrocket. For example, workflows that involve multiple LLM calls - such as running a summarization followed by an analysis - can double monthly costs, pushing a $200,000 bill to $400,000 for a 400B parameter model. Unfortunately, providers rarely offer volume discounts during these peak times, meaning every extra API call comes at full price.

For businesses with high visitor numbers, this can lead to annual costs reaching tens of millions of dollars. Background operations like automated data processing, real-time analytics, or ongoing model retraining only add to the token usage. A startup handling 10,000 daily requests, for instance, might see their monthly costs jump from $15,000 to over $30,000 when these tasks are factored in.

Methods for Cost-Efficient Scaling

To tackle these scaling challenges, businesses need strategies that keep costs under control. One effective approach is caching frequently used responses, which can cut token usage by 50–70% during traffic spikes. Another option is retrieval-augmented generation (RAG), which reduces input length and can save businesses as much as $45,000 per month.

Optimizing prompts is another way to manage expenses. Techniques like compressing prompts and using concise few-shot learning can reduce token usage by half. Additionally, downsizing from a large model to an 8B parameter model can result in significant savings - up to an 8× cost reduction. For example, monthly costs of $30,000 could drop to just $1,000 with this adjustment.

Using Dashboards to Track Costs

While optimization methods are essential, keeping a close eye on costs is equally important. Real-time monitoring through centralized dashboards can help businesses avoid unexpected expenses. Tools like OpenAI's usage dashboard or third-party monitoring solutions allow users to track API activity and set alerts when token usage crosses certain thresholds. This kind of visibility is especially helpful for enterprises with monthly bills that exceed $200,000, as it enables them to catch and address cost spikes early.

Platforms like Latitude take monitoring a step further by offering detailed logs, custom checks, and prompt version control. These features allow businesses to adjust token usage in real time. Alfredo Artiles, CTO at Audiense, highlights the platform’s capabilities:

"Latitude is amazing! It's like a CMS for prompts and agents with versioning, publishing, rollback… the observability and evals are spot-on, plus you get logs, custom checks, even human-in-the-loop".

Data Compliance and Long-Term Costs

Compliance Costs for Proprietary LLMs

When U.S. companies rely on proprietary large language models (LLMs), they face the challenge of meeting regulations like HIPAA (healthcare data), GLBA (financial data), FERPA (education records), and CCPA/CPRA (California consumer data). For businesses dealing with EU residents, GDPR adds another layer of complexity, requiring strict controls over data usage, audit trails, security measures, and formal agreements with LLM providers. These regulatory requirements not only make operations more intricate but also lead to substantial ongoing costs.

For example, HIPAA compliance often means using higher-cost "HIPAA-eligible" cloud regions or services, while GDPR compliance may demand data localization within EU-based data centers and adherence to Standard Contractual Clauses for cross-border data transfers. Proprietary LLM vendors typically charge extra for features like region-specific hosting, dedicated tenancy, customer-managed encryption keys, and detailed audit logs - services essential for regulatory compliance.

Hidden Risks in Data Handling

Legal and regulatory requirements often compel companies to store raw prompts, outputs, and embeddings for extended periods - sometimes as long as 3 to 7 years or more. For high-volume applications, this can result in terabytes of data, leading to significant storage expenses. These costs include backups, disaster recovery replicas, and encryption-key rotations, which can sometimes rival or even surpass the charges for API usage.

The risks of non-compliance are equally daunting. Sending sensitive or regulated data to LLM endpoints in regions that fail to meet data residency requirements can violate GDPR and result in fines as high as 4% of global annual revenue. A single data breach could trigger a cascade of expenses: breach notifications, forensic investigations, third-party security assessments, and hefty legal fees. These costs can easily climb into the hundreds of thousands - or even millions - of dollars. Beyond financial penalties, the exposure of trade secrets or proprietary data for vendor model training could lead to competitive disadvantages, contract disputes, and a loss of customer trust.

Lowering Compliance Costs with Open-Source Platforms

Adopting open-source platforms can help organizations tackle these rising costs and risks head-on. Tools like Latitude provide a practical solution by enabling companies to enforce consistent compliance measures. For instance, Latitude supports features like automatic PII redaction, data classification tagging, and routing requests to region-specific endpoints - all before data ever reaches a proprietary LLM. Because the platform is self-hosted and open, compliance and security teams can implement custom checks without waiting for vendor updates.

Latitude’s policy-aware pipelines take a proactive approach by inspecting prompts and outputs for sensitive information, automatically masking high-risk fields, and directing traffic only to endpoints that meet specific regulatory standards (e.g., HIPAA-ready, EU-only, or finance-approved models). The platform also maintains detailed metadata logs for every interaction, capturing information such as user details, purpose, data categories, selected model, region, and decision outcomes. This centralized audit trail simplifies regulatory audits and Data Protection Impact Assessments, giving organizations a single, streamlined system for oversight and control.

Conclusion: Building a Cost-Effective LLM Strategy

Let’s break down the key challenges and practical steps to deploying a cost-effective LLM strategy while keeping hidden expenses in check.

Key Hidden Costs to Watch For

There are three major hidden cost areas to consider: vendor lock-in, scaling unpredictability, and compliance and data-handling risks [8]. Vendor lock-in can lead to pricey migration efforts, re-integration headaches, duplicated infrastructure, and penalties for breaking contracts when shifting providers. Scaling unpredictability often results in ballooning per-token API charges, extra fees for capacity upgrades, or higher enterprise-tier pricing as usage grows [8]. Without proper monitoring, pilot projects can quickly spiral from a few hundred dollars to tens of thousands per month.

Practical Steps to Cut Costs

- Shorten prompts and limit outputs to reduce per-request costs.

- Choose smaller, task-specific models for simpler operations to avoid overpaying for unnecessary capabilities.

- Use caching to eliminate redundant API calls.

- Monitor expenses with real-time dashboards to stay on top of usage and spending [8].

These steps help create a more efficient, scalable, and cost-conscious LLM deployment.

Open-Source Platforms: A Long-Term Solution

Open-source platforms, such as Latitude, can significantly cut hidden costs while offering greater flexibility. Latitude provides centralized tools for designing, versioning, and testing prompts at scale, which reduces the need for repetitive trial-and-error processes. It also prevents vendor lock-in by allowing teams to switch between models without overhauling their architecture. With full observability, developers gain access to detailed logs of every reasoning step, making it easier to identify issues, optimize token usage, and minimize operational waste. These features make open-source platforms a smart choice for controlling costs and maintaining adaptability over time.

FAQs

What hidden costs should you consider with proprietary LLMs?

Proprietary LLMs can come with some hidden expenses that might strain your budget and limit your flexibility. A major issue is vendor lock-in, which makes it tough to switch providers without significant effort or cost. Then there are usage fees, which can rise sharply as your usage increases over time. Lastly, scaling limitations may hinder your ability to grow without facing hefty additional costs.

Being aware of these potential pitfalls ahead of time allows you to plan smarter and make decisions that help you sidestep unforeseen financial and operational hurdles.

What steps can businesses take to avoid vendor lock-in with proprietary LLMs?

To steer clear of vendor lock-in with proprietary LLMs, businesses should prioritize strategies that encourage flexibility and independence. Begin by adopting open standards for integration - this makes switching providers more straightforward if the need arises. It's also crucial to maintain complete control over your data and prompts, ensuring they're portable and adaptable. On top of that, utilizing tools that facilitate version control, experimentation, and smooth deployment can help you scale AI solutions without sacrificing autonomy.

How can I manage compliance costs when using LLMs?

To keep compliance costs in check when working with LLMs, start by establishing clear usage policies. This helps prevent unnecessary spending and keeps operations streamlined. Fine-tune your prompts to minimize token usage, which can significantly lower expenses. Keep an eye on system performance to ensure you're not scaling beyond what’s actually required.

Consider incorporating open-source tools into your development process. They can offer cost-effective solutions while still allowing for adaptability. Finally, implement version control and conduct thorough testing. This reduces the risk of costly errors that could lead to compliance headaches down the road.