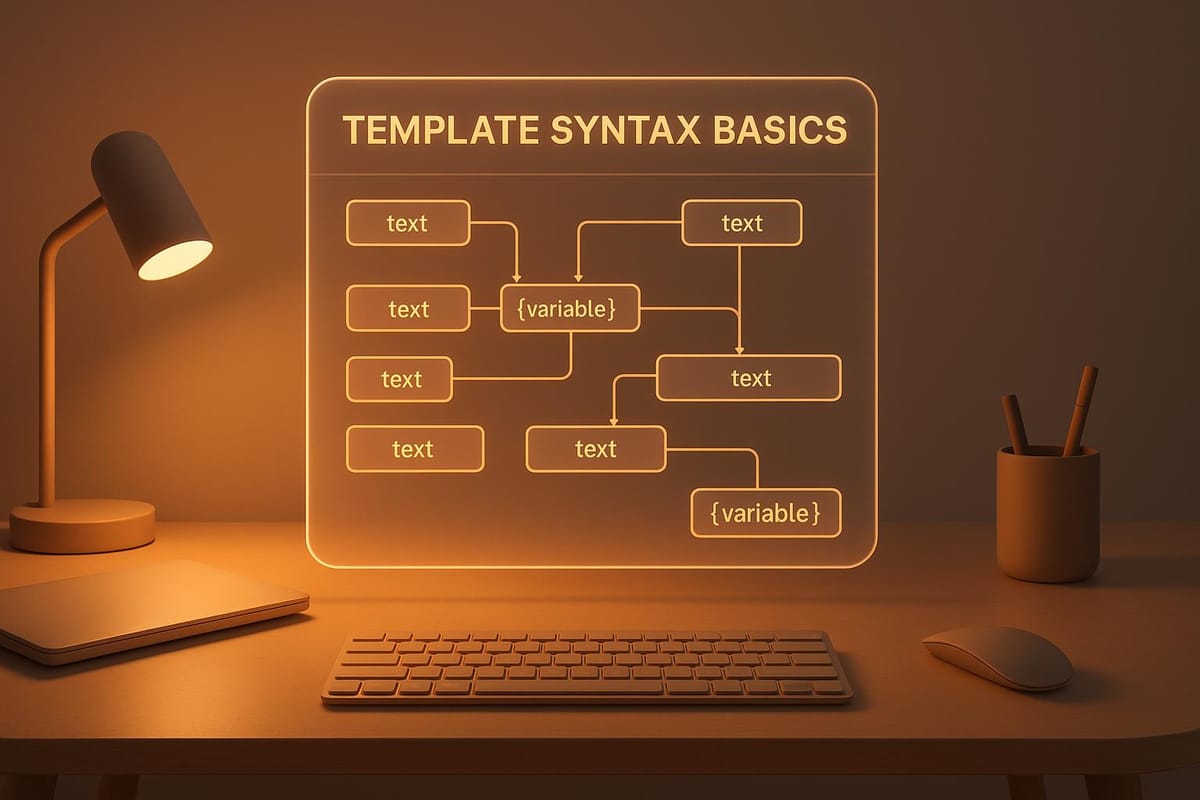

Template Syntax Basics for LLM Prompts

Learn how template syntax enhances AI prompt creation, making it efficient and scalable with dynamic content and advanced features.

Template syntax makes creating prompts for AI models simpler and more efficient. Instead of writing new prompts for every situation, you can use templates with placeholders (like {customer_query}) to insert dynamic content. This saves time, ensures consistency, and works well for scaling AI applications.

Here’s what you’ll learn:

- What it is: Templates use variables to create flexible prompts that adjust to different inputs.

- Why it matters: It helps manage prompts at scale, keeps interactions consistent, and reduces manual work.

- Who benefits: Developers, domain experts, and anyone working with AI can use it to build smarter systems.

- Key features: Variables, control flow (like if/else), and formatting rules.

- Advanced tools: Modular templates, dynamic data injection, and error handling for reliability.

Want to improve your AI prompts? Start by mastering template basics and experimenting with tools like LangChain or Semantic Kernel for better results.

Basic Principles of Template Syntax

Getting a good grasp of the basics of template syntax is key to crafting effective prompts for large language models (LLMs). These principles lay the groundwork for building flexible, reusable templates that can handle different scenarios and inputs with ease.

Variables and Interpolation

At the heart of any template system are variables. These act as placeholders that are swapped out with actual values during processing - a technique known as string interpolation. This method allows you to create prompts that are both dynamic and context-aware by embedding specific details into a consistent structure.

Take, for instance, the open-source framework LangChain, which simplifies LLM application development. Here’s an example of how variables work in an ecommerce customer service template:

ecommerce_prompt_template = PromptTemplate(

input_variables=["customer_query"],

template="""

You are an AI chatbot specializing in ecommerce customer service.

Your goal is to assist customers with their inquiries related to products, orders, shipping, and returns.

Please respond to the following customer query in a helpful and friendly manner:

Customer Query: {customer_query}

"""

)

In this template, {customer_query} serves as the placeholder, marked by curly braces to indicate where specific input will replace static text.

For scenarios requiring multiple inputs, templates can include several placeholders. Here’s an example focused on shipping inquiries:

shipping_prompt_template = PromptTemplate(

input_variables=["customer_location", "shipping_method"],

template="""

You are an AI assistant helping customers track and estimate shipping times for their ecommerce orders.

Customer's Location: {customer_location}

Selected Shipping Method: {shipping_method}

Based on this information, provide an estimated delivery time, mention any potential delays, and offer reassurance about the store's commitment to timely delivery.

"""

)

As Microsoft Learn explains, "String interpolation provides a more readable, convenient syntax to format strings. It's easier to read than string composite formatting."

Control Flow Basics

Control flow elements bring logical decision-making into templates. They allow you to customize outputs by showing or hiding content based on specific conditions or by looping through collections of data. This flexibility reduces the need for multiple templates, simplifying maintenance.

- Conditionals: These let you tailor messages by displaying content only if certain conditions are met. For example, you might show different responses depending on a customer’s location or membership status.

- Loops: These are useful for handling lists, such as product recommendations or order details, by iterating through each item efficiently.

By integrating these elements, you can design templates that adapt to various situations without requiring separate versions for every possible case.

Whitespace and Formatting Rules

Good whitespace management is more than just aesthetics - it ensures your outputs are clean, professional, and easy to read. Consistency in formatting, like where you place braces or how you indent, makes your templates simpler to debug and maintain.

- Vertical spacing: Use blank lines to separate logical sections, making the template easier to navigate.

- Line length: Keep lines within 100–120 characters to improve readability across different screens and tools.

- Indentation: Consistent indentation clarifies the structure of loops, conditionals, and other blocks, reducing errors and confusion.

Advanced Template Syntax Features

Once you've got the basics down, advanced template syntax features can take your prompts to the next level. These tools let you create more sophisticated, production-ready templates that can handle complex tasks, scale efficiently, and adapt to various scenarios.

Template Inheritance and Modularity

Template inheritance allows you to build a hierarchy where child templates extend a shared base, reducing repetitive instructions and promoting a modular design approach. It works much like object-oriented programming principles.

Here’s how it works: you create a base template that contains common elements, then extend it with specific variations for different use cases. This ensures consistency across your templates while still allowing for customization.

For example, imagine you need consistent response styling across an application. Instead of duplicating the same instructions in every prompt, you can create a base_template.txt file with shared guidelines:

- You are an AI assistant that helps to solve problems in various fields.

- Please, answer using formal language.

- Use short and direct sentences.

{% block user_type %}

{% endblock %}

From there, you can create specialized templates that inherit from this base. For instance, a specific_template.txt might look like this:

{% extends "base_template.txt" %}

{% block user_type %}

{% if is_user_technical == "True" %}

- The user is technical, you can answer the user using technical terms.

{% else %}

- The user is non-technical, please answer them using non-technical terms.

{% endif %}

{% endblock %}

When rendered, the specific template automatically includes the core instructions from the base template. Tools like Jinja2 make this process seamless, enabling you to build complex template hierarchies that meet diverse application needs.

Next, let’s look at how dynamic context injection can make these templates even more adaptable.

Dynamic Context Injection

Dynamic context injection allows templates to adjust in real time by incorporating data based on current conditions. Python functions like locals(), __dict__, and dir() simplify this process.

For example, if you're working with datasets that have varying structures, Python's dir() function can help. A generate_data_analysis_prompt function could use dir() to identify the attributes of a dataset object and automatically include relevant ones in the template. This flexibility lets your templates handle different dataset structures without needing to know their composition beforehand.

A more advanced technique is mid-generation context switching. Yair Stern describes this method as follows:

"Dynamic context switching offers a more intelligent and efficient approach by pre-calculating and storing embeddings of static contexts like code files. Instead of sending everything at once, we dynamically inject the model's context mid-generation based on the generated tokens."

This approach uses callback functions to analyze the model's output during each generation step. Pre-calculated embeddings are injected as needed, ensuring the model gets the right context at the right time without overwhelming the initial prompt.

While dynamic templates are incredibly flexible, they also introduce the need for robust error handling, which we’ll discuss next.

Error Handling and Validation

To ensure your templates are reliable in production, strong error handling is a must. This includes validating inputs, enforcing type checks, and setting fallback defaults to maintain stable output.

Fallback mechanisms are key. They allow your application to keep functioning even when some context elements are missing. Templates should provide default values or alternative content paths when primary data sources fail.

Additionally, implementing logging can help you track errors, identify missing variables, and monitor performance for ongoing improvements.

Schema validation is another critical step. It ensures that complex data structures, like API responses or user-generated content, meet expected formats before the template processes them. This is especially important when working with unpredictable or variable inputs.

Best Practices for Production Templates

When moving from development to production, templates need to meet higher standards of reliability, scalability, and maintainability. What works in testing environments may falter under the weight of real-world demands, making it essential to adjust your approach. Production templates must be designed to handle diverse environments, heavy traffic, and collaboration across teams.

Ensuring Compatibility Across Platforms

Maintaining template integrity is non-negotiable when it comes to avoiding compatibility issues. Each fine-tuned LLM version relies on a specific chat template that dictates the expected input and output formats during interactions. Even minor deviations from this structure can significantly impact performance. When fine-tuning or implementing a model, preserving the original chat template's structure is critical.

For new implementations, the ChatML format is a solid starting point because it works well across various platforms. Additionally, avoiding vendor lock-in is a smart strategy - it allows you to switch LLM providers without needing to rewrite extensive portions of your codebase. Consistency in output formats is equally important to ensure they align with the model's expected parameters. It's also wise to establish clear guidelines for managing libraries and frameworks to sidestep common pitfalls like version conflicts, which can derail LLM-driven projects.

Once compatibility is ensured, the focus should shift to optimizing performance for efficiency and speed.

Optimizing Performance and Efficiency

Performance optimization is all about reducing processing overhead while retaining functionality. Start by simplifying your template logic. Complex conditional statements and deeply nested loops can bog down rendering times, especially when dealing with large prompt batches. Streamlining selectors can also cut down parsing time and make templates easier to maintain.

Caching is another powerful tool. Just as web developers use rel="preload" to load essential assets early, you can cache frequently used template components or variable sets to accelerate prompt generation. Pay attention to your templates' compilation times as well. Optimized code should strike a balance - improving speed and performance without causing delays in the overall process.

"Technology is changing business, the workplace, and the workforce. With increasing demand to get things done fast to adapt to customer needs, businesses need to do whatever they can to reduce waste."

- David Singletary, Founder and CEO of DJS Digital consultancy [9]

Regular monitoring is essential. Track metrics like template compilation time, prompt generation speed, and memory usage to catch performance issues before they affect your production systems.

Maintaining Collaboration and Version Control

Once your templates are running efficiently, collaboration becomes the cornerstone of sustainable development. Structured commit messages can significantly reduce misunderstandings within teams - by as much as 30%. Make it a habit to document all template changes clearly, including details about modified variables, added functionality, and any breaking changes.

Pull requests are another critical tool for collaboration. Organizations using standardized pull request templates see approvals happen 40% faster. These templates should prompt contributors to explain their changes, test their templates, and document any new dependencies, fostering a smoother review process.

Branching strategies are a lifesaver for managing multiple template versions, feature updates, and client-specific customizations. They allow parallel development without disrupting the main template library.

Comprehensive documentation is equally vital. A well-documented codebase can boost developer productivity by 55%. Include details like a template's purpose, required variables, expected outputs, and practical examples. Store this documentation alongside your templates in version control for easy access.

Regular team communication is another key to success. Teams that hold weekly check-ins see a 25% productivity increase. These meetings can address template performance, upcoming changes, and compatibility issues before they escalate.

Role-based permissions add an extra layer of control. Assign modification rights only to team members who need them, while others can be given read-only access. This prevents unauthorized changes to critical production templates.

"Good commit messages are a gift to your future self."

- Software Engineering Guy!!

Finally, keep an eye on version control activity to quickly identify and address security concerns. This is especially important when templates include sensitive prompt engineering techniques or proprietary business logic that could provide a competitive edge.

Latitude's Approach to Template Syntax

Latitude tackles the challenges of building production-ready LLM features by offering tools that enable dependable and collaborative workflows for template creation.

Features for Template Validation

Latitude provides a framework to evaluate prompt performance using three methods: LLM-as-Judge, Programmatic Rules, and Human-in-the-Loop. These evaluations can be set up for individual prompts and run either manually during development or automatically on incoming logs. This process helps measure quality, uncover weaknesses, and guide improvements.

Collaborative Development Workflows

Latitude's workspace is designed to make prompt design and versioning a collaborative effort among developers, product managers, and domain experts. At the heart of this setup is PromptL, a templating language that simplifies the process for non-technical team members while still offering advanced features for engineers.

These tools are tightly integrated with a version control system, ensuring templates evolve in a reliable and traceable manner.

Version Control and Community Support

As templates become more intricate, version control becomes a necessity. Latitude addresses this with PromptHub, a Git-like versioning system that uses SHA hashes. This system allows teams to track changes, follow template evolution, and quickly roll back to stable versions when needed.

"Version control protects source code from both catastrophe and the casual degradation of human error and unintended consequences." – Atlassian

Latitude also fosters a strong community through its open-source platform. Users can access detailed documentation, contribute to a GitHub repository, and participate in an active Slack community. These resources promote knowledge sharing and continuous growth in the field of prompt engineering.

Conclusion and Key Takeaways

Recap of Template Syntax Basics

Template syntax transforms static prompts into flexible, reusable tools by swapping placeholders with values at runtime. The key principles include designing clear variable structures, using proper control flow, and ensuring consistent formatting. Advanced features enhance flexibility and reliability, making it easier to handle a range of scenarios. Understanding these basics is crucial for building scalable and efficient AI-driven interactions.

How Latitude Simplifies Prompt Engineering

A platform like Latitude takes these principles and makes them easier to implement in real-world projects. Its PromptL templating language offers advanced features tailored to meet the needs of engineers working on complex systems.

Latitude combines essential tools like evaluation frameworks, version control, and collaboration features into one cohesive platform. This ensures production-level reliability with robust version tracking and traceability.

What sets Latitude apart is its collaborative workspace, which allows technical and non-technical team members to work together seamlessly. Domain experts can actively contribute to prompt development without needing extensive technical expertise.

Next Steps for Mastery

Now that you’ve got a strong foundation in template syntax, it’s time to put these concepts into action. Start by applying the basics: write clear instructions, separate static details from variables, and refine your prompts based on outcomes.

Experiment with different prompt formats to see what works best. For example, research indicates a 42% improvement in accuracy when using JSON instead of Markdown with certain models. Testing and comparing formats can lead to significant performance gains.

If you’re working with a team or creating production-level applications, consider using a platform like Latitude. Its collaborative tools, version control, and evaluation features can save time and minimize errors during development.

Finally, keep evolving your approach. Stay connected with the prompt engineering community, try out new techniques, and measure how your changes impact performance. Consistent iteration and learning will help you stay ahead in this rapidly changing field.

FAQs

How does using template syntax make creating AI prompts more efficient?

Template syntax makes creating AI prompts faster and more efficient by providing a clear, reusable structure. Instead of starting from scratch every time, templates let you set up consistent formats that can be customized with specific variables. This approach not only saves time but also ensures that prompts remain clear and consistent across different scenarios.

Using templates, you can easily adjust prompts to fit various contexts while preserving their original purpose. This method simplifies the process of managing and updating prompts, allowing you to focus more on improving AI outputs. Templates also help streamline workflows, reduce mistakes, and improve teamwork by keeping everyone on the same page.

What advanced template syntax features can make AI prompts more flexible and reliable?

Advanced template syntax features can make AI prompts much more versatile and dependable. For instance, Chain of Thought (CoT) prompting is a method that breaks complex tasks into smaller, logical steps. This structured approach helps the AI generate responses that are both clear and well-organized, boosting its ability to solve problems and reason through challenges effectively.

Another handy tool is conditional logic within templates. Using elements like if statements or loops allows prompts to adapt dynamically based on different inputs. This flexibility ensures that the AI can tailor its responses to specific user needs, delivering more accurate and relevant results.

By weaving these techniques into your prompts, you can design smarter and more efficient interactions, ultimately getting the most out of your AI models.

How does control flow in template syntax make AI prompts more adaptable to different situations?

Control flow in template syntax enhances AI prompts by allowing dynamic adjustments tailored to user input or specific contexts. This makes it possible to craft prompts that align with various scenarios, ensuring precise and context-aware AI responses across different applications.

With structured templates and features like conditional logic, developers can steer AI to produce outputs customized to the provided data. This approach proves especially useful in scenarios requiring context-driven interactions, such as customer service or personalized suggestions. Tools like Mustache or Jinja syntax further simplify the process by enabling reusable and flexible prompt structures, making it easier to manage a wide range of inputs effectively.