Top Features to Look for in Real-Time Prompt Validation Tools

Explore essential features for real-time prompt validation tools that enhance interactions with large language models and streamline workflows.

Real-time prompt validation tools are essential for improving interactions with Large Language Models (LLMs). They provide instant feedback, ensure prompt accuracy, and streamline workflows. Here’s what to look for:

- Real-Time Feedback: Tools like PromptPerfect offer immediate suggestions to refine prompts.

- Multi-Model Support: Compatibility with various LLMs, as seen in PromptLayer, is crucial.

- Testing Tools: A/B testing, accuracy metrics, and debugging features ensure reliability.

- Collaboration: Platforms like Latitude and LangSmith enable teamwork with shared workspaces and version control.

- Cost Management: Tools like PromptLayer help monitor and optimize API usage.

- Data Integration: Features for structured data extraction and workflow automation, as offered by Mirascope.

Quick Comparison

| Feature | Latitude | PromptLayer | LangSmith | PromptFlow | PromptPerfect |

|---|---|---|---|---|---|

| Real-Time Feedback | ✓ | ✓ | ✓ | ✓ | ✓ |

| Multi-Model Support | ✓ | ✓ | ✓ | ✓ | ✓ |

| Collaboration Features | ✓ | ✓ | ✓ | ✓ | ✗ |

| Testing Tools | ✓ | ✓ | ✓ | ✓ | ✓ |

| Cost Management | ✗ | ✓ | ✓ | ✓ | ✗ |

| Data Integration | ✓ | ✓ | ✓ | ✓ | ✗ |

These tools help teams refine prompts, improve AI outputs, and manage workflows efficiently. Choose based on your specific needs.

Must-Have Features in Prompt Validation Tools

When choosing prompt validation tools for real-time use, certain features set the best options apart from the rest. Knowing these key capabilities can help teams find tools that fit their development goals and workflows.

Real-Time Feedback Systems

Validation tools should deliver instant feedback to refine prompts. For example, PromptPerfect offers immediate suggestions to improve accuracy and output quality. Along with feedback, tools need to support a variety of models to handle different project requirements.

Support for Multiple Models

A good tool should work with various Large Language Models (LLMs). PromptLayer, for instance, integrates with multiple LLM providers, giving teams the flexibility needed for diverse AI tasks. Once compatibility is in place, robust testing features become equally important.

Here’s a breakdown of key features across popular validation tools:

| Feature | Purpose | Impact on Development |

|---|---|---|

| Prompt Sanitization | Ensures inputs are clean and error-free | Reduces mistakes and increases consistency |

| Error Handling | Identifies and manages prompt issues | Improves reliability and prevents failures |

| Version Control | Tracks changes to prompts over time | Simplifies updates and rollbacks |

| Analytics Dashboard | Tracks performance metrics | Helps optimize through actionable insights |

Testing and Evaluation Tools

Testing tools should include features like A/B testing to compare prompts, pipelines to assess different scenarios, and metrics to measure accuracy, speed, and costs. These tools ensure prompts are fine-tuned for real-world use. Strong testing capabilities also support better teamwork.

Collaboration Features

The Anthropic API, for instance, allows experts and engineers to work together seamlessly on prompt management. Collaboration tools are crucial for scaling AI workflows and ensuring consistency across teams.

"Getting user feedback is very useful for understanding practical challenges, but can be time-consuming and subjective." - Author Unknown

Data Extraction and Integration

Validation tools should support structured data extraction for easy integration with existing systems. Mirascope, for example, uses automatic data validation to simplify integration and automate workflows.

Cost Management

Good tools also help teams monitor and control API usage. PromptLayer’s analytics make it easier to track spending and find ways to improve efficiency.

These features have already shown success in real-world use. For example, Kern AI Refinery has demonstrated how combining feedback loops with collaboration tools can improve team workflows, leading to more accurate and efficient AI interactions.

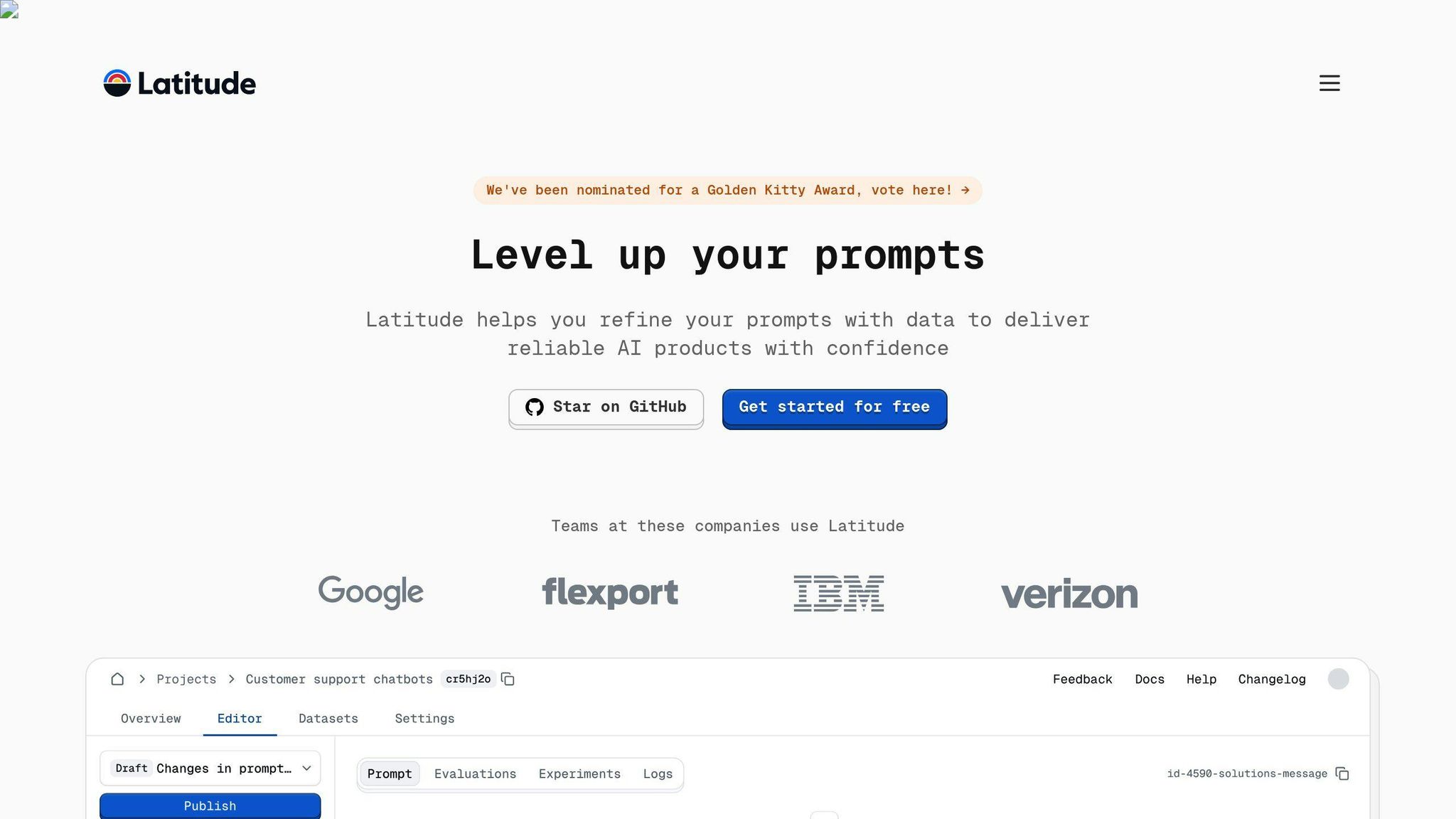

1. Latitude

Latitude is an open-source platform designed to simplify real-time prompt validation. Its framework bridges the technical aspects of implementation with the practical insights of domain experts.

Real-Time Collaboration

Latitude creates a shared workspace where teams can identify and fix issues quickly, ensuring accuracy from the start.

Flexible Integration

Thanks to its open-source nature, Latitude integrates smoothly with a variety of large language model (LLM) platforms.

Here’s a breakdown of its key validation features:

| Capability Type | Features | Development Impact |

|---|---|---|

| Prompt Development | Real-time testing tools | Speeds up iteration cycles |

| Quality Assurance | Continuous validation tools | Maintains prompt accuracy |

| Model Compatibility | Multi-LLM support | Broadens usability |

| Version Management | Change tracking system | Reduces risk of regressions |

Preventing Performance Drift

Latitude continuously monitors prompts, helping detect and address potential issues before they affect performance. This ensures consistent, high-quality results even in dynamic AI environments.

Resources and Community Support

The platform includes detailed documentation and active community resources. Teams can leverage guides, examples, and shared strategies available through GitHub and Slack to improve their prompt validation processes.

"Getting user feedback is crucial for understanding practical challenges in prompt engineering. Latitude's collaborative approach helps bridge the gap between technical implementation and domain expertise, making the validation process more efficient and effective." - From Latitude's technical documentation

Built for Large-Scale Use

Latitude is equipped with advanced tools for large-scale applications, offering features like load testing and performance monitoring to ensure prompt reliability under demanding conditions.

This platform showcases how open-source solutions can combine collaboration, adaptability, and robust validation to address the challenges of real-time prompt engineering.

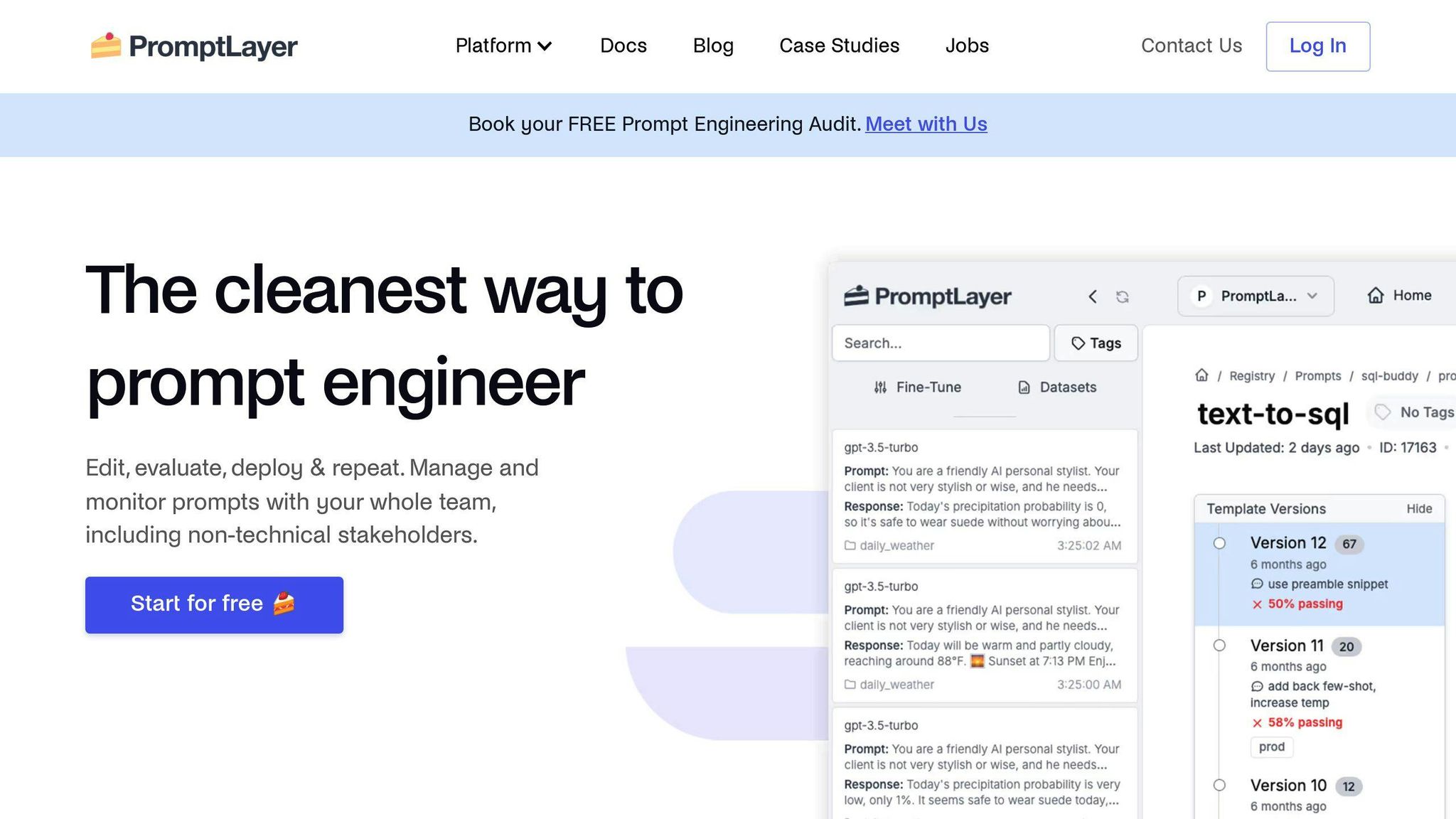

2. PromptLayer

PromptLayer works as a middleware platform designed to validate prompts in real-time, offering tools to track, manage, and refine interactions with large language models (LLMs). It’s well-regarded for its middleware design and easy integration, along with its strong validation capabilities.

Visual Prompt Management

With its user-friendly interface, PromptLayer lets teams create, test, and deploy prompts without needing to write code. It also includes version control, making it easier to iterate and roll out new prompt variations quickly, cutting down development time significantly.

Performance Tracking

PromptLayer monitors usage, latency, and execution logs, delivering practical insights to improve performance and reliability. For example, Ellipsis reduced debugging time by 75% while scaling operations, highlighting how effective PromptLayer can be in managing LLM workflows.

Team Collaboration

The platform allows non-technical team members to manage prompts independently, freeing up engineering resources. This feature is especially helpful for organizations expanding their AI capabilities.

"It would be impossible to do this in a SAFE way without PromptLayer." - Victor Duprez, Director of Engineering at Gorgias

Testing and Integration

PromptLayer supports A/B testing, regression testing, and evaluations across different models to ensure prompts perform well in various scenarios. It integrates easily with existing LLM setups, requiring minimal effort to get started while maintaining smooth operations.

With these features, PromptLayer is a valuable tool for teams aiming to streamline their prompt workflows, paving the way for other tools like LangSmith.

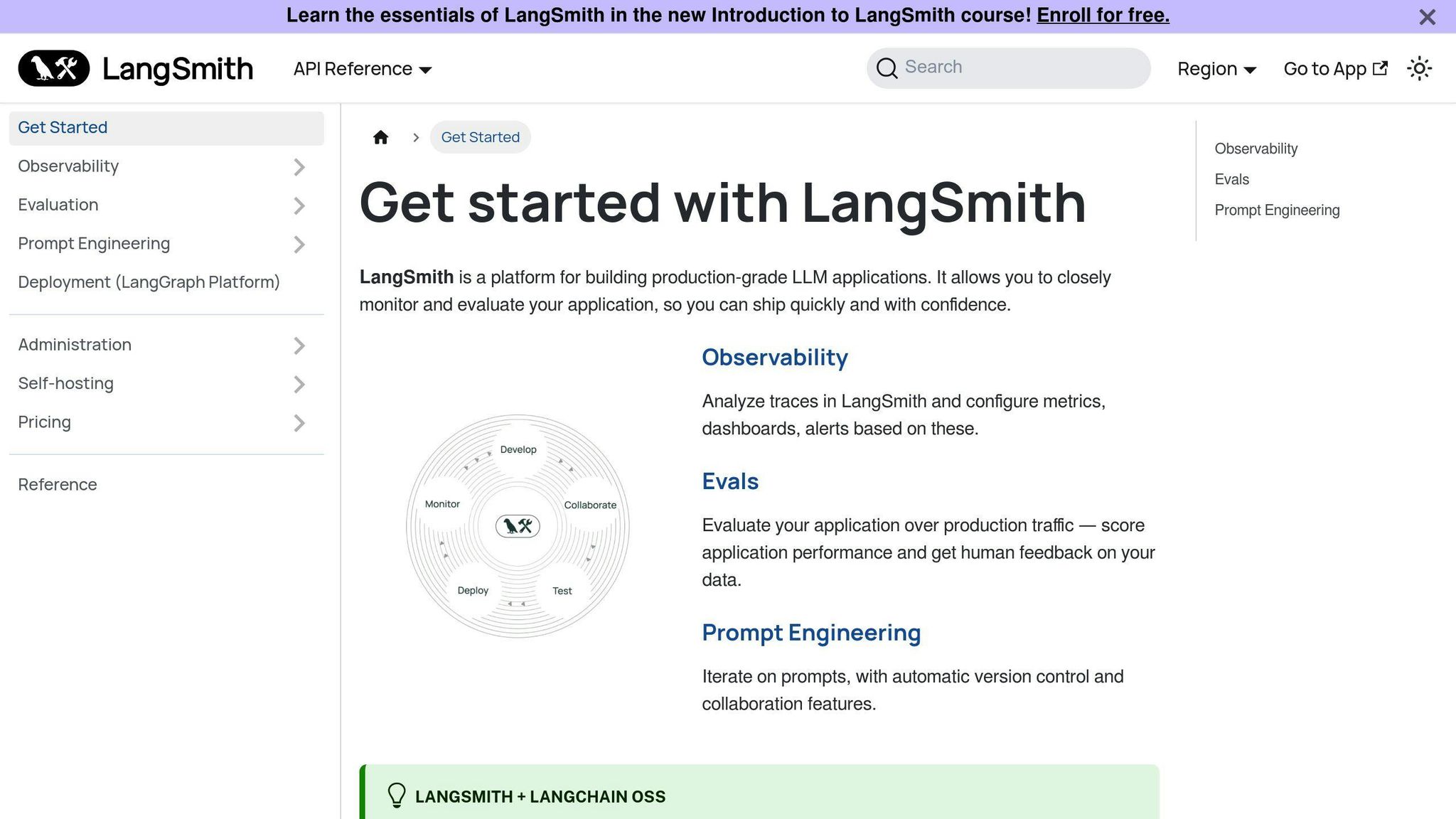

3. LangSmith

LangSmith is a platform built to handle real-time prompt validation with a focus on production-level quality. It’s packed with features that tackle the complexities of validating prompts in dynamic AI settings.

The platform’s evaluation framework allows teams to create custom evaluators for their specific needs, making it easy to test prompts systematically using golden datasets. It also tracks key metrics such as token usage, latency, error rates, and output quality, helping users thoroughly validate their AI models.

Collaboration Tools

LangSmith Hub offers tools like version control, annotation queues, and sharing options to streamline teamwork:

| Feature | Purpose |

|---|---|

| Version Control | Keep track of prompt changes over time |

| Annotation Queues | Facilitate human feedback and labeling |

| Sharing Options | Simplify collaboration with stakeholders |

| Dataset Management | Aid in evaluation and fine-tuning |

Debugging and Transparency

A standout feature of LangSmith is its detailed tracing functionality. This provides full visibility into the sequence of calls, making it easier to identify errors or bottlenecks.

"LangSmith helps take language models from prototype to production by offering a comprehensive suite of tools and features designed to enhance their capabilities." - Neil, Research Professional

Custom Evaluation Metrics

LangSmith allows teams to assess prompts based on factors like coherence, relevance, hallucinations, and other custom metrics. Its tools are built for scalability, ensuring high-quality validation at every stage.

With its powerful features, LangSmith sets a solid standard for prompt validation, laying the groundwork for tools like PromptFlow that focus on efficiency and automation.

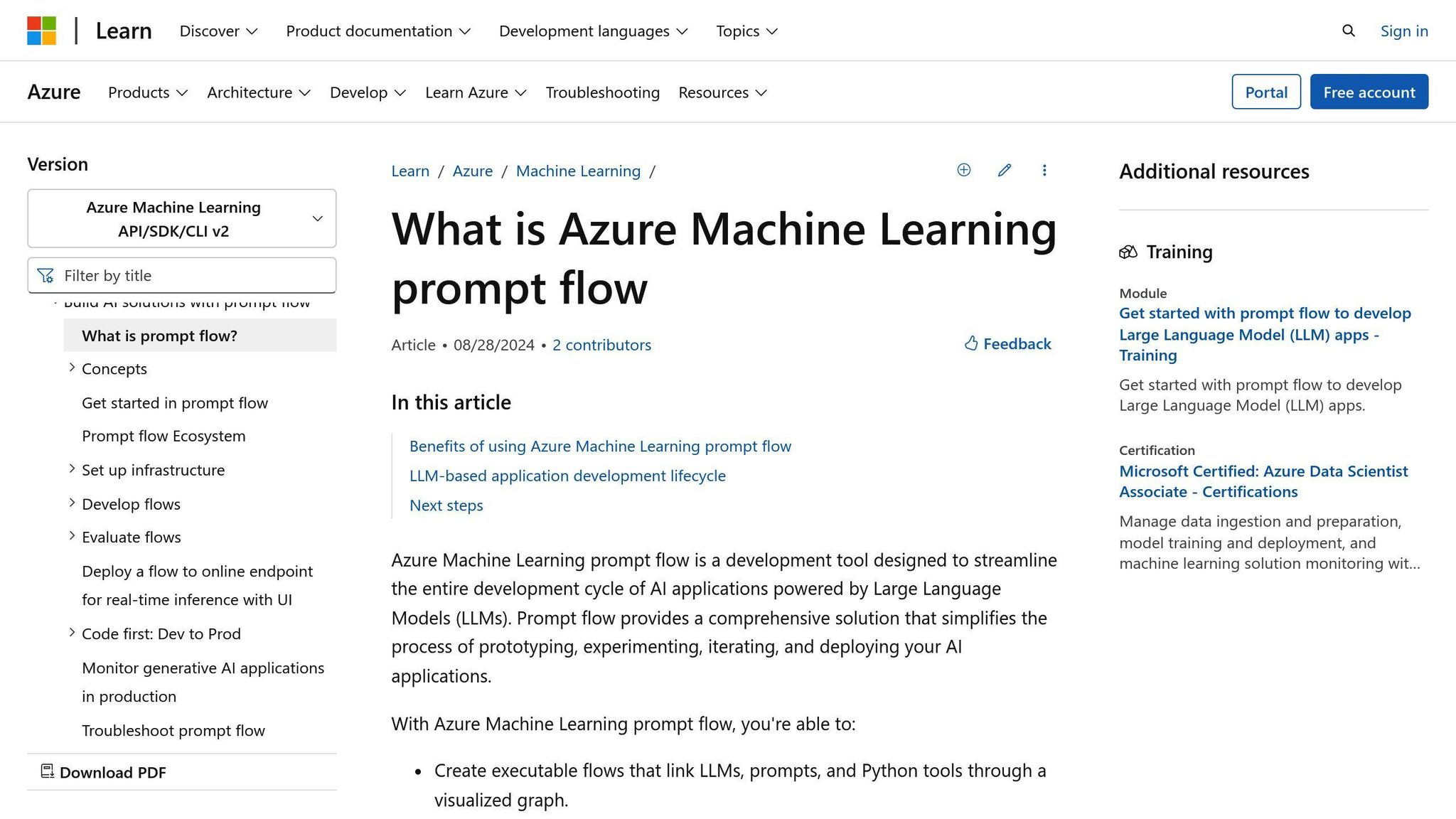

4. PromptFlow

Microsoft's PromptFlow, part of the Azure ML services, provides tools for real-time prompt testing and management. Its visual interface simplifies even the most complex prompt engineering workflows, making them easier to handle.

Key Features and Interface

PromptFlow offers a mix of node-based Flow view and Graph view, giving users a clear, visual way to manage their workflows. It supports three distinct flow types:

| Flow Type | Purpose |

|---|---|

| Standard Flow | For basic prompt testing and Python integration |

| Chat Flow | Designed for managing conversational AI workflows |

| Evaluation Flow | Focused on testing quality and accuracy metrics |

These flow types help users refine prompts efficiently, leading to better outputs in less time.

Testing, Deployment, and Collaboration

PromptFlow supports thorough dataset testing with automated quality metrics, making it adaptable to various business requirements. It integrates seamlessly with Azure ML endpoints, enabling real-time inference, performance tracking, and efficient resource usage.

For team collaboration, the cloud-based version of Azure AI offers shared workspaces and version control. This ensures smooth teamwork while maintaining high security and governance standards.

"Prompt Flow has streamlined prompt engineering workflows by providing a unified, data-driven, and collaborative platform that streamlines the development, testing, and deployment of LLM-based AI applications." - Amit Puri, Author

Practical Applications

One standout example of PromptFlow's capabilities is its Web Classification feature. For instance, it can accurately classify a URL like "https://www.imdb.com" as "Movie." This makes it a valuable tool for tasks like content categorization in digital marketing.

PromptFlow's features make it a solid choice for teams wanting an easy-to-use, enterprise-level solution. It also sets the stage for tools like PromptPerfect, which specialize in further optimization.

5. PromptPerfect

PromptPerfect is a tool designed to simplify prompt engineering for various AI models. It helps teams fine-tune their prompts quickly and effectively, thanks to its advanced validation features.

Key Features and Testing

PromptPerfect uses a machine learning-powered engine to analyze and improve prompts in less than 10 seconds. This allows for quick testing and adjustments. It supports text, image, and multimodal prompts for top models like ChatGPT, GPT-4, and DALL-E 2. With its ability to optimize prompts for specific goals, it ensures high-quality outputs tailored to different needs.

The platform also provides tools for comparing model outputs side by side, reverse-engineering successful prompts, and creating reusable templates with adjustable parameters. These features are especially useful for teams working on refining and validating their prompts.

Feedback and Integration

PromptPerfect includes a feedback system that offers instant insights into how well prompts perform, making it suitable for both beginners and experienced users. It’s available as a web interface and via API, which allows for bulk processing or integration into larger enterprise systems.

"If you think of prompts as the API for LLMs and image generators, then PromptPerfect is the IDE for prompts."

While PromptPerfect specializes in prompt optimization, it doesn’t offer broader AI lifecycle management tools like some competitors. Instead, it complements these tools by focusing on precise prompt refinement, helping teams streamline their workflows.

Conclusion

Real-time prompt validation tools are becoming an essential part of AI workflows, with their capabilities advancing to meet growing demands. Selecting the right tool requires a clear understanding of your workflow and the features that matter most to your team.

Here are some key features to keep in mind when evaluating prompt validation tools:

| Feature Category | Key Considerations | Example Tools |

|---|---|---|

| Prompt Management & Testing | Version control, real-time feedback, A/B testing | PromptLayer, PromptPerfect |

| Collaboration | Team workflows, sharing capabilities | Latitude, PromptFlow |

These examples highlight the range of options available, so it's important to focus on tools that best support your specific objectives. Features like logging, dataset export, and performance tracking are crucial for effective prompt engineering. For larger operations, advanced monitoring and analysis tools can provide the insights needed to scale AI systems effectively.

Additionally, open-source platforms like Latitude offer customization and scalability, making them ideal for teams focused on building production-grade LLM features across different functions.

"The right prompt validation tool bridges creativity and technology, enabling teams to scale AI workflows efficiently and effectively."