Top Tools for Automated Model Benchmarking

Explore essential tools for automated model benchmarking, enhancing AI model evaluation, collaboration, and adaptability in diverse environments.

Automated model benchmarking is essential for evaluating and comparing AI models like large language models (LLMs). It ensures consistent performance testing, identifies weaknesses, and guides improvements. This article reviews seven tools designed for benchmarking AI systems, each with unique features tailored to different needs:

- Latitude: Open-source, focuses on collaborative workflows for LLM evaluation.

- AgentBench: Evaluates LLMs in dynamic, agent-based environments.

- Mosaic AI Evaluation Suite: A commercial tool for enterprise-level benchmarking with customizable scoring and integration.

- Weights & Biases (W&B): Tracks experiments and metrics for LLMs, offering detailed visualization and automated workflows.

- DagsHub: Combines version control with ML-specific tools for tracking experiments and managing datasets.

- ToolFuzz: Open-source framework targeting robustness through edge-case testing.

- BenchmarkQED: Developed by Microsoft, benchmarks Retrieval-Augmented Generation (RAG) systems with automation.

Each tool caters to specific use cases, from enterprise-scale solutions to open-source options for research and development. Below, we explore their features, strengths, and ideal applications.

Key Criteria for Automated Model Benchmarking Tools

When choosing automated benchmarking tools for AI models, it's important to focus on evaluation criteria that go beyond simple accuracy metrics. Modern AI systems produce outputs that are far more nuanced, requiring assessment methods that capture this complexity.

Accuracy is still a critical metric, but traditional measures like BLEU and ROUGE often fall short. They fail to account for aspects like semantic accuracy, creative flexibility, and domain-specific requirements - factors that are crucial when evaluating large language models (LLMs). Effective benchmarking tools should aim to measure the actual quality of AI outputs, not just their surface-level resemblance to expected results.

A major hurdle with benchmarking is that static metrics lose relevance over time. As AI models evolve from initial prototypes to fully developed applications, pre-designed benchmarks often become outdated. This happens because such benchmarks may exist in the model’s training data, lack focus for specialized use cases, or fail to adapt to rare, application-specific scenarios.

To address these limitations, adaptive evaluation methods have gained traction. These methods are essential for assessing the open-ended and diverse outputs generated by LLMs. Static metrics simply can't capture the full range of possibilities. By using custom methodologies tailored to specific applications, adaptive benchmarking allows for a more comprehensive evaluation. It also tracks progress across multiple dimensions, helping to minimize the risk of performance regressions in real-world production environments. This multi-faceted approach ensures that quality assessment keeps pace with the growing complexity of AI systems.

1. Latitude

Latitude is an open-source platform designed for AI and prompt engineering. It bridges the gap between domain experts and engineers, enabling them to collaborate on developing and maintaining production-ready LLM features. This makes it a solid choice for automating model benchmarking workflows. Let’s take a closer look at what Latitude brings to the table.

Customizable Evaluation Processes

Latitude’s open-source framework allows users to create evaluation processes tailored to their specific needs. This means you can design assessments that focus on the criteria most relevant to your application, ensuring that model performance is measured in a way that aligns with real-world requirements.

Collaboration and Community Support

One of Latitude’s standout features is its focus on collaboration. Domain experts can work hand-in-hand with engineers to set evaluation criteria without needing extensive technical knowledge. This practical, results-driven teamwork makes customization more accessible. Latitude also provides detailed documentation and benefits from a vibrant community. Through its GitHub repository and Slack channels, users can exchange ideas, share experiences, and refine their workflows, creating a dynamic environment for continuous improvement.

Seamless Integration and Open-Source Benefits

Latitude integrates easily into existing AI workflows, offering teams the flexibility to adapt the platform as their benchmarking needs evolve. Its open-source nature ensures transparency, giving teams the ability to modify and control the platform to meet their unique requirements. Additionally, the active community contributions help expand and improve its features, making it a reliable and adaptable solution over time.

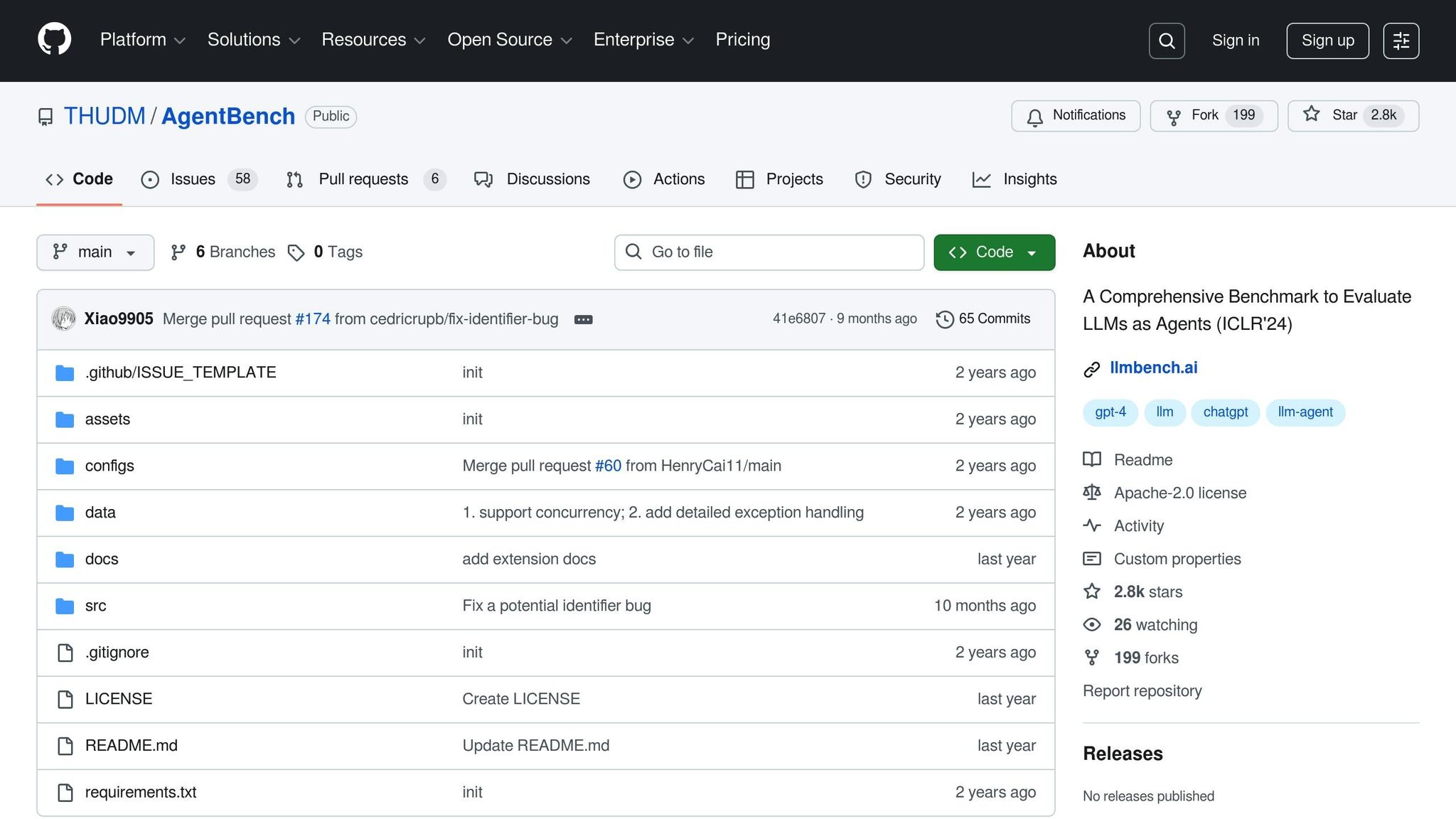

2. AgentBench

AgentBench is a benchmarking tool designed to evaluate large language models (LLMs) as agents in interactive environments. Unlike traditional benchmarks that focus on static evaluations, AgentBench tests how well models handle dynamic, decision-making scenarios.

A Flexible Approach to Benchmarking

AgentBench stands out for its flexibility, offering eight distinct environments to assess various aspects of AI agent performance. Five of these were custom-built - Operating System, Database, Knowledge Graph, Digital Card Game, and Lateral Thinking Puzzles - while three were adapted from existing datasets: House-Holding, Web Shopping, and Web Browsing. This diverse setup allows teams to gauge a model's performance on tasks that closely resemble real-world challenges. Beyond just measuring accuracy, AgentBench evaluates reasoning and decision-making skills, highlighting common weaknesses like poor long-term reasoning or difficulty following instructions. This comprehensive approach enables teams to create more tailored and automated workflows.

Streamlined Automation and Customization

To simplify the evaluation process, AgentBench provides an API and Docker toolkit that automates benchmarking workflows, eliminating the need for complex manual setups.

"To facilitate the assessment of LLM-as-Agent, we release an integrated toolkit to handily customize the AgentBench evaluation to any LLM based on the philosophy of 'API & Docker' interaction. This toolkit, along with the associated datasets and environments, are made publicly available for the broader research community." – Xiao Liu et al.

The toolkit supports a wide range of models, from commercial API-based systems to open-source alternatives, offering flexibility for different use cases. Teams can customize evaluations to suit their specific needs while maintaining consistency across various model types.

Fully Open-Source and Community-Driven

AgentBench is open-source and available under the Apache-2.0 license through the THUDM GitHub organization, where it has garnered significant attention with 2.8k stars and 199 forks. The package includes datasets, environments, and evaluation tools, all accessible to the research community.

In August 2023, the AgentBench paper, "AgentBench: Evaluating LLMs as Agents," by Xiao Liu et al., was submitted to arXiv and later published in ICLR 2024. The authors made the entire framework publicly available on GitHub, including development and test datasets, enabling researchers to benchmark LLMs across all eight environments.

"AgentBench is the first benchmark designed to evaluate LLM-as-Agent across a diverse spectrum of different environments." – THUDM/AgentBench GitHub Repository

The open-source nature of AgentBench promotes transparency and allows users to adapt the framework to meet their specific goals. This makes it a valuable tool for both academic researchers and commercial teams, setting a new standard for automated benchmarking in AI development.

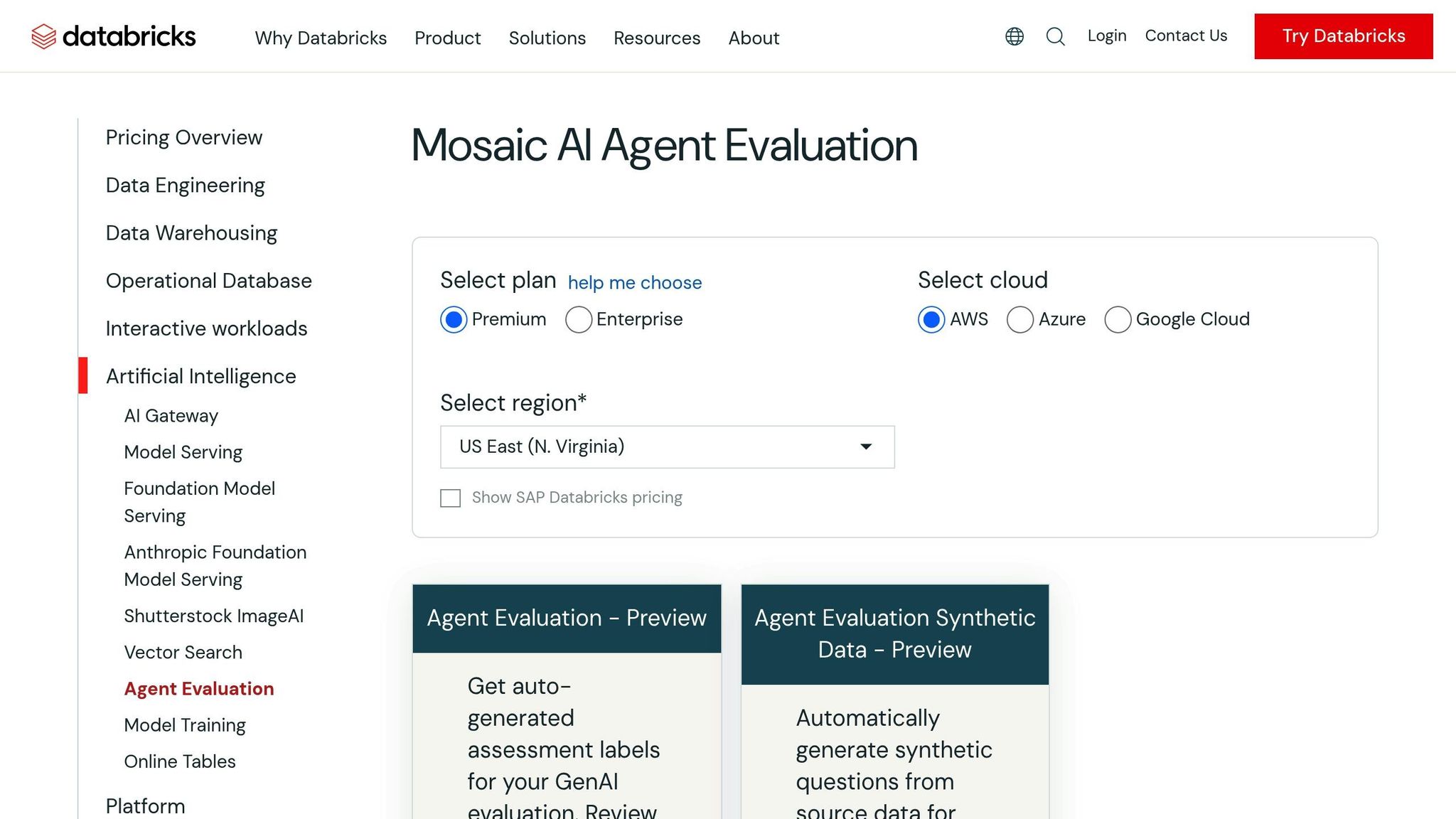

3. Mosaic AI Evaluation Suite

The Mosaic AI Evaluation Suite, an integral part of Databricks' commercial platform, is designed to benchmark Retrieval Augmented Generation (RAG) applications and outputs from Large Language Models (LLMs) using its Agent Evaluation framework.

Flexible Benchmarking Options

The suite offers customizable scoring mechanisms, allowing teams to define evaluation criteria through rule-based checks, LLM judges, or even human feedback.

What makes this tool stand out is its accessibility. Domain experts can easily assess and label data without needing technical skills in managing spreadsheets or creating custom tools. Additionally, the AI-assisted evaluation features, combined with an intuitive interface, make it easier to refine benchmarking processes over time.

This adaptability ensures that organizations can tailor workflows to align with their unique benchmarking needs.

Seamless Collaboration and Integration

The Agent Evaluation Review App simplifies teamwork by replacing manual spreadsheets with an integrated workspace. It connects directly to the Databricks Data Intelligence Platform and Managed MLflow, enabling teams to track performance and provide feedback in real time.

This integration eliminates the need to juggle multiple tools, allowing teams to compare agents, monitor performance trends, and troubleshoot issues efficiently.

Automated Workflows and Customization

The suite supports the creation of custom evaluation criteria, streamlining feedback collection to help teams speed up their development cycles. Its automated workflows allow for quick redeployment of improvements without requiring changes to the codebase.

Pricing and Availability

The Mosaic AI Evaluation Suite operates on a pay-as-you-go model. Pricing includes $0.15 per million input tokens, $0.60 per million output tokens for Agent Evaluation, and $0.35 per question for synthetic data generation. These charges are billed as DBUs under the Serverless Real-time Inference SKU.

This pricing structure, combined with its automation capabilities, sets the stage for a detailed comparison of benchmarking tools in the next section.

4. Weights & Biases (W&B)

Weights & Biases (W&B) is an MLOps platform designed to streamline the entire model lifecycle - from training and fine-tuning to deployment. It stands out with tools tailored specifically for benchmarking large language models (LLMs), making it a go-to solution for teams focused on detailed performance tracking.

Flexible Benchmarking Made Simple

W&B excels in tracking experiments and ensuring reproducibility. It logs hyperparameters, metrics, and model configurations in real time, enabling teams to make consistent comparisons across different model iterations. This capability is especially useful for evaluating multiple configurations or fine-tuning processes.

For LLM-specific needs, the W&B Weave toolkit offers advanced tracking and evaluation features. It’s designed to handle the unique challenges of working with large-scale models. Whether teams are training, fine-tuning, or deploying foundation models, W&B provides the tools to meet diverse benchmarking requirements.

Another standout feature is Artifacts, which allows for versioning datasets and models while maintaining a clear lineage. This ensures consistency and reproducibility throughout the benchmarking process. W&B also includes its Tables feature, enabling teams to analyze tabular data and uncover performance trends easily. Meanwhile, interactive dashboards display metrics like loss and accuracy, helping teams quickly identify and address issues during training and validation.

Seamless Collaboration and Integration

W&B integrates effortlessly with popular frameworks like TensorFlow, PyTorch, and Keras through its flexible API, making it adaptable to a variety of workflows. This integration simplifies experimentation and supports ongoing benchmarking efforts.

The platform’s Reports feature enhances collaboration by allowing users to document findings, embed visualizations, and share updates with team members. Experiment tracking further strengthens teamwork by maintaining a shared, detailed record of all experiments.

Automating Benchmarking Workflows

W&B Sweeps automates tasks like hyperparameter tuning and model optimization, reducing manual effort during evaluation. The platform logs metrics and visualizes training runs, enabling teams to focus on improving their models without worrying about complex setups. From training to deployment, W&B supports the entire model lifecycle, making automated benchmarking more efficient.

For custom workflows, W&B Core provides versatile tools for tracking and visualizing data, as well as sharing results. These features can be tailored to meet specific benchmarking needs, allowing for faster iterations and more precise evaluations. With these capabilities, W&B ensures that teams can efficiently manage and optimize their models at every stage.

5. DagsHub

DagsHub is a platform that combines the collaborative features of GitHub with tools specifically designed for machine learning projects. It serves as a central hub where ML teams can track experiments, manage datasets, and benchmark models - all while using familiar version control workflows. This setup makes DagsHub a strong choice for teams looking to streamline their benchmarking efforts.

Flexible Benchmarking Tools

One of DagsHub's standout features is its ability to simplify ML benchmarking by integrating datasets, models, and experiments directly into version control. The platform tracks performance metrics across multiple runs, making it easy to compare results from different algorithms or hyperparameter settings. By logging everything from training metrics to model artifacts, and offering seamless integration with MLflow, DagsHub allows teams to visualize trends and ensure consistency in their workflows.

Managing large datasets is made efficient through Git LFS and DVC integration, enabling users to version control massive training data alongside their code. This ensures that benchmarking results remain reproducible, even as data evolves. Additionally, DagsHub’s data lineage graphs provide a clear view of how datasets and preprocessing steps contribute to specific model outcomes, helping teams better understand their results.

Collaboration and Workflow Integration

DagsHub enhances teamwork by allowing users to manage model updates using familiar development workflows. Team members can create pull requests for model changes, review updates to training pipelines, and discuss results - all within the platform. This centralized approach ensures that benchmarking insights are shared across the team and not siloed in individual workspaces.

The platform integrates smoothly with popular ML frameworks like PyTorch, TensorFlow, and scikit-learn, making it easy to incorporate into existing workflows. It also connects with major cloud providers such as AWS and Google Cloud, enabling teams to scale their benchmarking processes across various environments. Dashboards and notifications provide team leads with quick access to experiment updates, helping them allocate resources more effectively.

Open-Source and Commercial Options

DagsHub offers a flexible range of deployment options to suit different needs. Its freemium model is ideal for individual researchers and small teams, providing unlimited public repositories, essential experiment tracking, and limited support for private repositories - all at no cost. This makes it easy for teams to start automating their benchmarking workflows without any upfront investment.

For larger organizations, enterprise plans include advanced security features, expanded support for private repositories, and dedicated customer assistance. On-premises deployment is also available for teams with strict data governance requirements. Furthermore, DagsHub’s open-source integrations with tools like DVC and MLflow ensure that experiment data and model artifacts remain portable, allowing organizations to adapt their workflows or integrate with other tools as their needs grow.

6. ToolFuzz

ToolFuzz steps in as a key player in ensuring robustness by targeting edge-case vulnerabilities. It’s an open-source fuzzing framework designed to systematically create diverse test cases. These test cases help uncover vulnerabilities and performance issues in machine learning models when they encounter unexpected or malformed inputs.

Automation and Customization

ToolFuzz is built with flexibility in mind, allowing developers to customize the testing process to meet specific benchmarking needs. Its open-source nature means it’s easy to modify and extend for different evaluation scenarios. Plus, its permissive licensing model ensures that adapting the tool to unique requirements is a straightforward process.

Open-Source Availability

Released under the MIT license, ToolFuzz offers broad usability for both academic and commercial purposes.

"The MIT License is a permissive open-source license that grants users significant freedom to use, modify, distribute, and sublicense the software without the requirement to disclose source code."

This licensing approach ensures developers can freely use, modify, and share the software with minimal restrictions. Teams can tweak ToolFuzz to fit their specific needs, while contributions from the community continue to improve its functionality and expand its potential.

7. BenchmarkQED

BenchmarkQED is a toolkit developed by Microsoft Research to evaluate Retrieval-Augmented Generation (RAG) systems on a large scale. This open-source suite combines automation and flexibility to tackle the challenges of assessing RAG systems effectively.

Automation and Custom Benchmarking Workflows

BenchmarkQED includes three automated engines designed to streamline the evaluation process:

- AutoQ: This engine generates synthetic queries across four categories, removing the need for manual dataset creation.

- AutoE: By leveraging large language models as judges, AutoE scores outputs based on factors like relevance, diversity, comprehensiveness, and empowerment. It can also integrate ground truth data for creating custom evaluation metrics.

- AutoD: This engine automates data sampling by defining topic clusters and sample counts, ensuring consistent datasets that meet specific requirements.

Together, these engines create a seamless workflow, making it easier to benchmark RAG systems with varying configurations.

A Modular and Flexible Toolkit

BenchmarkQED’s modular structure allows teams to automate the entire benchmarking pipeline - from generating queries to evaluating results - or use individual components as needed. This makes it particularly helpful when working with diverse datasets or applying specific evaluation criteria across different models. The flexibility of the toolkit ensures rigorous and reproducible testing, regardless of the complexity of the RAG system being assessed.

Open-Source and Community-Driven

BenchmarkQED is freely available under the MIT license on GitHub, allowing teams to adapt and expand the toolkit to meet their unique benchmarking needs. Microsoft Research describes the toolkit as:

"BenchmarkQED, a new suite of tools that automates RAG benchmarking at scale, available on GitHub."

Comparison Table

When deciding on the right tool for automated model benchmarking, having a clear understanding of how different platforms compare can make all the difference. The table below highlights each tool’s current status, key strengths, limitations, and ideal use cases:

| Tool | Status | Primary Strengths | Notable Constraints | Best For |

|---|---|---|---|---|

| Latitude | Open‑source | Encourages collaborative prompt engineering, offers production-grade LLM features, and bridges domain experts with engineers | Primarily focused on LLM benchmarking | Teams developing LLM applications that require cross-functional collaboration |

| AgentBench | Open‑source | Provides multi-environment agent evaluation and standardized benchmarks | Geared toward agent-based systems | Researchers and developers working with AI agents |

| Mosaic AI Evaluation Suite | Commercial | Scales for enterprise needs, integrates MLOps workflows, and delivers advanced analytics | Higher costs and potential vendor lock-in | Large enterprises managing complex ML pipelines |

| Weights & Biases (W&B) | Commercial (free tier available) | Tracks real-time metrics, offers experiment comparison dashboards, and integrates seamlessly with popular frameworks | Relies on cloud infrastructure and may introduce performance overhead | Teams needing detailed experiment tracking and visualization |

| DagsHub | Commercial (integrates open-source tools) | Supports Git-like collaboration, automatic experiment logging, and robust data versioning with DVC | Has a steeper learning curve for DVC and command-line tools | Teams focused on reproducibility and effective version control |

| ToolFuzz | Open‑source | Specializes in automated fuzzing for robustness and security evaluations | Limited to security and robustness testing | Security researchers and scenarios requiring robustness testing |

| BenchmarkQED | Open‑source (MIT license) | Tailored for evaluating retrieval-augmented generation (RAG) systems | Exclusively focused on RAG systems | Teams building and assessing retrieval-augmented generation systems |

This table provides an overview of each tool, but let’s dig into how they compare in terms of automation, collaboration, integration, and cost.

Automation, Collaboration, Integration, and Cost

Automation is a standout feature for many of these tools. For instance, Weights & Biases shines with its real-time tracking and automation capabilities. Meanwhile, DagsHub combines version control with streamlined collaboration, and Latitude places a strong emphasis on teamwork between domain experts and engineers.

When it comes to collaboration, DagsHub and Weights & Biases both leverage Git-like workflows and shared experiment insights to enhance teamwork. On the other hand, Latitude is uniquely designed to foster collaboration specifically in LLM development, bridging the gap between engineers and domain specialists.

Integration options are another critical factor. Weights & Biases supports popular frameworks like TensorFlow, PyTorch, and Keras, making it a natural fit for many existing workflows. DagsHub, by contrast, integrates open-source tools like Git, DVC, and MLflow to create a unified environment. The right choice here depends on your existing tech stack and workflow preferences.

Cost structures vary widely across these platforms. Open-source tools like Latitude, AgentBench, ToolFuzz, and BenchmarkQED provide full functionality without licensing fees. On the other hand, commercial platforms like Weights & Biases and Mosaic AI Evaluation Suite offer premium features through subscription models. DagsHub takes a hybrid approach, combining commercial hosting with open-source tool integration.

Carefully evaluate these factors to choose the tool that best fits your project’s needs and budget. Each platform offers distinct advantages, so aligning their features with your specific requirements will ensure you make the most informed decision.

Conclusion

From the comparisons above, it’s clear that the world of AI development has outgrown the limitations of manual benchmarking. Today’s AI workflows demand speed and precision, which is why automated benchmarking tools have become a must-have for teams aiming to build reliable, high-performing AI systems.

Selecting the right tool boils down to aligning it with your team’s specific workflows. For instance, Latitude is ideal for fostering collaboration in LLM development. On the other hand, BenchmarkQED is tailored for teams focused on retrieval-augmented generation systems, while enterprise solutions like the Mosaic AI Evaluation Suite offer the scalability required by larger organizations.

The key to effective benchmarking lies in three pillars: scalability, flexibility, and collaboration. Scalability supports growth, flexibility adapts to evolving models, and collaboration bridges the gap between technical and domain expertise.

Investing in automated benchmarking infrastructure often leads to major improvements in AI development cycles. These tools remove the inefficiencies of manual evaluations, deliver consistent and reproducible results, and empower teams to make data-driven decisions. By automating repetitive testing, they also free up valuable engineering time for innovation.

Whether you’re a startup experimenting with your first LLM application or a large enterprise managing intricate ML pipelines, the right benchmarking tool can become the backbone of your AI strategy. It enables faster iterations, better models, and more confident deployments, making it a critical component for success in AI development.

FAQs

How do adaptive evaluation methods enhance benchmarking for large language models (LLMs) compared to traditional static metrics?

Adaptive evaluation methods bring a fresh approach to benchmarking large language models (LLMs) by creating dynamic, real-time test scenarios tailored to specific contexts. Unlike traditional static metrics that depend on fixed datasets, these adaptive techniques assess models across a wider variety of tasks, keeping evaluations relevant as new use cases emerge.

This approach offers a clearer understanding of a model's flexibility and reliability, providing a closer look at its performance in practical, ever-changing situations. By moving beyond the limitations of static benchmarks, adaptive methods reveal strengths and weaknesses that might otherwise go unnoticed, painting a more accurate picture of how LLMs handle diverse challenges.

What are the key advantages of using open-source tools like AgentBench and ToolFuzz for automating AI model benchmarking?

Open-source tools such as AgentBench and ToolFuzz play an important role in automating the benchmarking of AI models.

AgentBench is designed to test large language models (LLMs) by simulating their performance as agents in various scenarios. It focuses on key aspects like reasoning, decision-making, and collaboration, ensuring that models can handle a wide range of tasks effectively.

Meanwhile, ToolFuzz helps identify problems in tool documentation and runtime behavior. This enables developers to spot and resolve errors more efficiently. By reducing manual work and enhancing reliability, these tools make the benchmarking process smoother and inspire greater trust in model performance.

What factors should organizations consider when choosing an automated tool for model benchmarking?

When selecting an automated benchmarking tool for your AI projects, it’s crucial to start by defining your specific objectives and needs. Think about factors such as how well the tool works with your current systems, whether it can scale to meet future demands, and how user-friendly it is for your team. Additionally, check if it can track critical metrics like performance, accuracy, and cost efficiency.

You’ll also want to evaluate how well the tool aligns with your particular use case. This includes considering input/output requirements and overall reliability. Matching the tool’s capabilities to your goals ensures it will streamline your AI development and benchmarking efforts effectively.