Top Tools for Contextual Prompt Optimization

Explore top tools for optimizing prompts in AI models to enhance accuracy, consistency, and efficiency in your workflows.

Want better results from AI models? Contextual prompt optimization is the key. With tools like LangChain, PromptPerfect, and LLMStudio, you can refine prompts to improve accuracy, consistency, and efficiency when working with large language models (LLMs). Here’s what you need to know:

- Why it matters: Optimized prompts lead to 21% better retrieval accuracy, faster workflows, and more coherent outputs.

- Top tools:

- LangChain: Pre-made templates for flexible prompt engineering.

- PromptPerfect: Automated testing and performance metrics.

- LLMStudio: Visual interface for managing prompts and models.

- Latitude: Collaboration-focused platform for teams.

- Prompt Engine: Dual-engine system for complex workflows.

- Choosing the right tool: Consider your team’s needs - collaboration, budget, or technical flexibility.

Quick Comparison:

| Tool | Features | Best For | Pricing | Supported Models |

|---|---|---|---|---|

| Latitude | Collaboration, production-ready | Team collaboration | Free (Open-source) | Multiple LLMs |

| LangChain | Templates, few-shot learning | Flexible prompt engineering | Free/$39+/Custom | Custom connectors |

| LLMStudio | Centralized interface, storage | User-friendly prompt design | Free (Open-source) | OpenAI, Azure, AWS Bedrock |

| PromptPerfect | Automated testing, metrics | Accuracy-focused teams | Contact for pricing | Multiple LLMs |

| Prompt Engine | Dual engines, overflow management | Complex workflows | Free (Open-source) | Multiple LLMs |

Next steps: Explore these tools to simplify prompt optimization and get better results from AI models.

5 Leading Open-Source Prompt Optimization Tools

Prompt optimization tools are evolving to keep up with the demands of LLM development. Here’s a look at five open-source tools making an impact in this space.

Latitude

Latitude provides a platform designed for building production-ready LLM features. It offers a collaborative space where domain experts and engineers can work together to create, test, and refine prompts effectively.

LangChain

LangChain is a Python framework that simplifies prompt engineering for context-aware applications. It includes pre-built prompt templates with structured instructions and few-shot examples, making it a great fit for specific use cases. The platform supports multiple LLM models and includes a free Developer tier, making it accessible to teams of all sizes.

PromptPerfect

PromptPerfect focuses on efficient prompt testing with a credit-based evaluation system. It allows teams to generate and refine prompts automatically, speeding up the optimization process. The platform also includes performance metrics to help teams measure and improve their prompts systematically.

LLMStudio by TensorOps

LLMStudio offers a user-friendly graphical interface for designing prompts, with features like:

- A centralized gateway for managing multiple LLM models

- Built-in tools for storing and versioning prompts

- A Python SDK and REST API for backend integration

- Compatibility with major LLM providers like OpenAI, Azure OpenAI, and AWS Bedrock

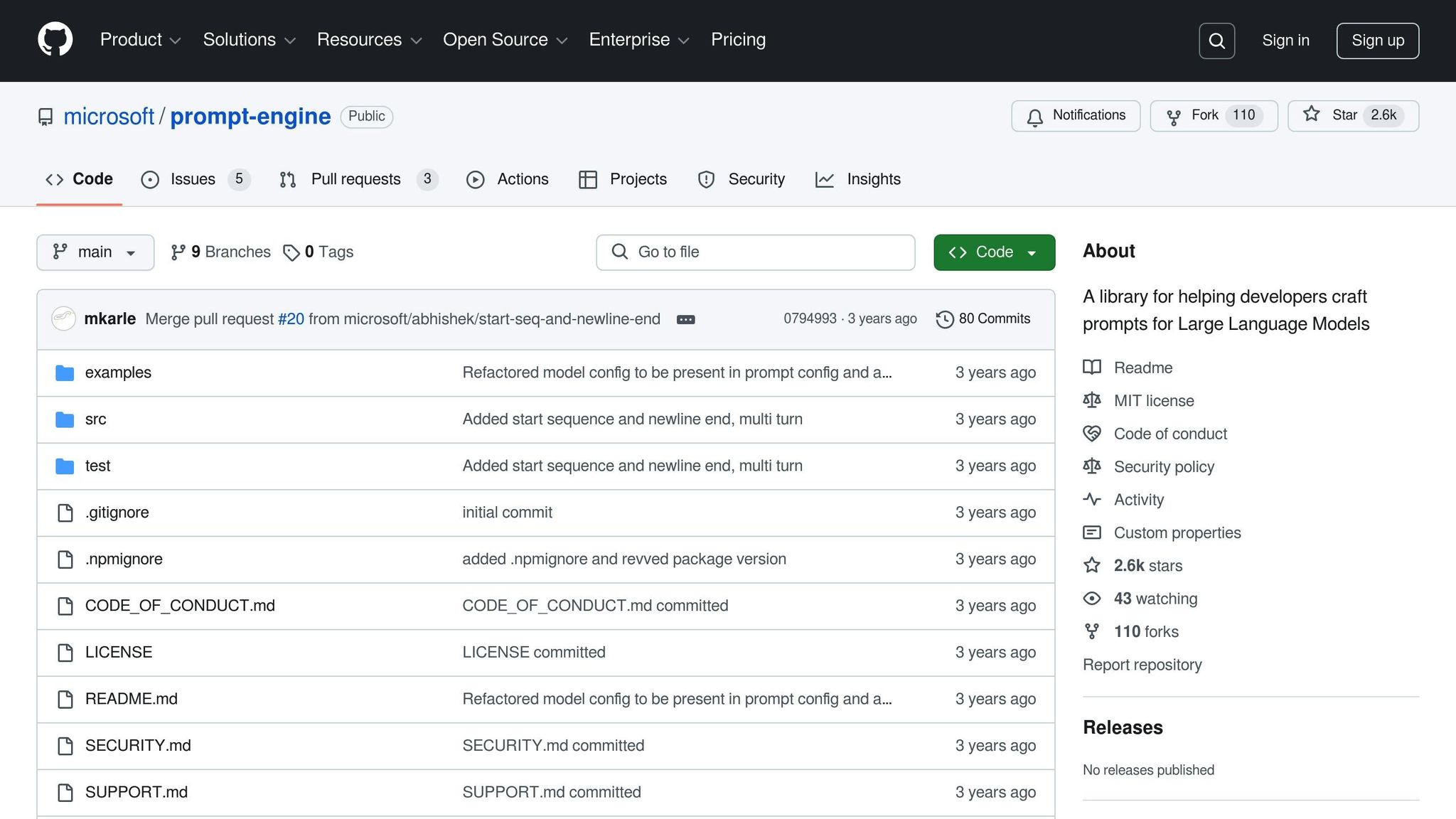

Prompt Engine

Prompt Engine is a developer-focused TypeScript framework with a dual-engine setup:

- A code engine for converting natural language into executable code

- A chat engine tailored for natural language interactions

- Tools to manage prompt overflow efficiently

- Support for multiple programming languages

Each of these tools brings unique features to the table, allowing teams to choose the one that best fits their workflow and prompt optimization needs.

Tool Features and Applications

Team Collaboration Tools

When it comes to prompt engineering, teamwork between engineers and domain experts is key. Latitude provides a shared platform designed specifically for refining LLM prompts, while LLMStudio offers a centralized interface that allows multiple team members to access and manage LLM models. Its user-friendly design makes collaboration more seamless, helping teams stay organized and focused.

Context Optimization Methods

Once collaboration is in place, the next step is improving the content and structure of prompts. This is where tools like LangChain come into play. LangChain provides a structured approach to crafting prompts, including:

- Task-specific, well-defined instructions

- Few-shot examples to guide the model's responses

- Context-aware mechanisms to improve accuracy

These tools help ensure consistency while allowing for the flexibility needed to tackle different tasks. Plus, with a free Developer tier, LangChain makes it easy for teams to experiment and refine their prompts before committing to larger-scale projects.

Prompt Organization Systems

Managing prompts effectively is just as important as creating them. Organized prompt libraries save time and effort by making successful prompts easy to access and reuse. LLMStudio supports this with features like:

| Feature | Purpose | Benefit |

|---|---|---|

| Prompt Storage | Centralizes prompt library | Simplifies access and reuse |

| Integration Options | Connects with other tools | Fits seamlessly into existing workflows |

Prompt Engine takes organization a step further with its dual-engine setup. The code engine translates natural language into executable code, while the chat engine handles conversational tasks. It also includes a prompt overflow management system that automatically clears older interactions, keeping performance smooth and efficient.

These features simplify the process of developing, testing, and maintaining prompts, making them more effective for a wide range of LLM applications.

Tool Comparison Guide

Feature and Price Comparison

Here’s a breakdown of features, pricing, and best use cases for each tool:

| Tool | Key Features | Best For | Pricing | Model Support |

|---|---|---|---|---|

| Latitude | • Collaborative platform • Production-grade LLM features • Domain expert integration |

Teams needing collaboration between experts and engineers | Open-source, free | Multiple LLMs |

| LangChain | • Pre-made prompt templates • Few-shot learning support • Python-based structure |

Developers requiring flexible prompt templates | • Free Developer tier • Plus: $39/user/month • Custom Enterprise pricing |

Custom connectors for any LLM |

| LLMStudio | • Interactive prompt design • Centralized LLM gateway • Visual interface |

Teams wanting user-friendly prompt design | Open-source, free | OpenAI, Azure OpenAI, AWS Bedrock |

| PromptPerfect | • Context optimization • Step-by-step breakdowns • Few-shot learning |

Technical teams focused on prompt accuracy | Contact for pricing | Multiple LLMs |

| Prompt Engine | • Dual-engine setup • Overflow management • Chat engine |

Developers managing complex prompt systems | Open-source, free | Multiple LLMs |

What Sets These Tools Apart?

Each tool brings something unique to the table, catering to different needs:

- Collaboration Features: Latitude is designed to bridge the gap between technical and non-technical team members, making it ideal for organizations that rely on teamwork across departments.

- Integration Options: LLMStudio excels with integration capabilities, while LangChain’s custom connectors offer flexibility across various LLM providers.

- Optimization Approaches: PromptPerfect focuses on breaking down tasks step-by-step for precise context optimization. On the other hand, Prompt Engine uses a dual-engine system to handle complex prompt workflows efficiently.

- Budget-Friendly Choices: Open-source tools like Latitude and Prompt Engine are great for teams looking for no-cost options, while LangChain’s tiered pricing provides scalability for different budgets.

When deciding, think about what matters most for your team - whether it’s collaboration, technical flexibility, budget, or compatibility with specific LLMs. Tools that combine ease of use with broad support for multiple LLMs are becoming increasingly popular.

Next Steps in Prompt Optimization

Key Tools Overview

Tools like LLMStudio, with its centralized interface, and CiC prompting - which has shown a 21% improvement in retrieval accuracy - highlight the fast-paced progress in prompt design. Latitude now connects technical implementation with domain-specific knowledge, making prompt engineering more accessible as organizations expand their use of large language models (LLMs). These tools and methods are paving the way for further improvements in prompt optimization.

Future of Prompt Engineering

As context windows grow and models become more capable, new approaches to prompt optimization are emerging. Open-source tools are already laying the foundation for automated and context-aware prompt engineering.

Some promising areas of development include:

- Streamlined Prompt Compression: Tools like LLMLingua are working on reducing token usage without compromising the quality of outputs.

- Smarter Context Handling: Context-aware methods are evolving, improving both the accuracy and efficiency of prompts.

- Automation in Optimization: Solutions like Vertex AI Prompt Optimizer are enabling automatic tuning, especially useful for setups involving multiple models.

These advancements suggest a future where prompt optimization becomes increasingly automated and efficient, blending technical innovation with human expertise. As tools and techniques continue to evolve, organizations will find it easier to integrate LLMs into their workflows effectively.