Ultimate Guide to Training Experts in Prompt Engineering

Explore the essentials of prompt engineering to enhance AI interactions, from crafting precise prompts to understanding limitations.

Prompt engineering is the skill of crafting clear and precise instructions for AI systems like large language models (LLMs). It’s growing rapidly as industries adopt AI tools to boost productivity. This guide simplifies everything you need to know, from creating effective prompts to understanding AI limitations.

Key Takeaways:

- What is Prompt Engineering? Writing inputs that guide AI to produce accurate, desired results.

- Why It Matters: Domain experts enhance AI outputs by applying industry-specific knowledge.

- Core Skills: Learn to write structured prompts, test them, and refine for better performance.

- AI Limitations: Understand challenges like hallucinations, outdated knowledge, and reasoning gaps.

Quick Tips for Better Prompts:

- Be Clear: Directly state the task, context, and constraints.

- Use Specific Terms: Include field-specific language for precise outputs.

- Test & Improve: Regularly test prompts and refine based on results.

- Track Metrics: Measure relevance, accuracy, and efficiency to improve outcomes.

Tools to Help:

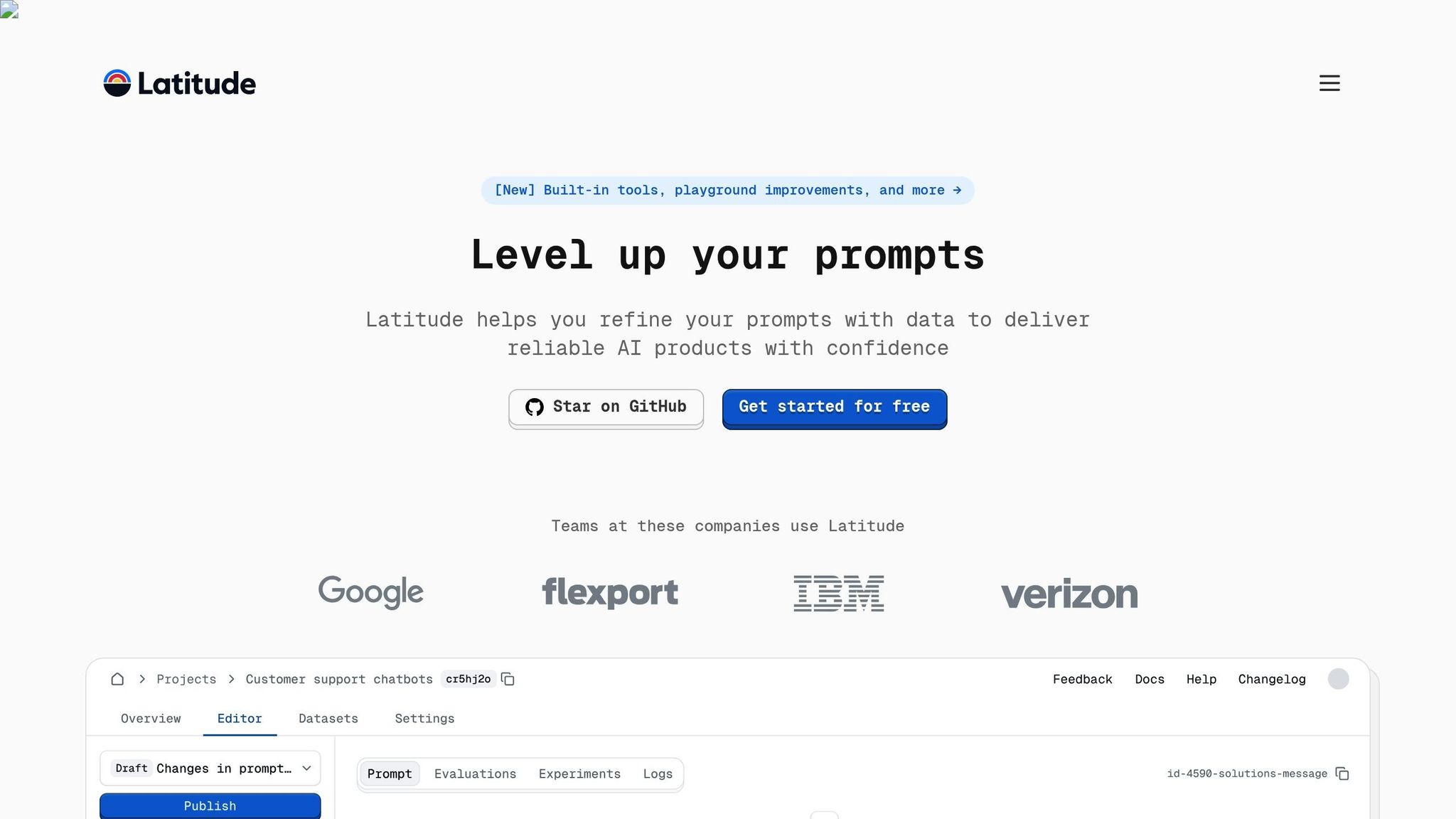

- Latitude: Great for team-based prompt engineering with features like version control and batch testing.

- Other Options: Tools like PromptLayer and LangChain offer additional functionalities for refining prompts.

Mastering prompt engineering is about combining technical skills with domain expertise to create effective, reliable AI interactions. Let’s dive deeper.

Prompt Engineering Basics

Learn the essentials of crafting prompts to make the most of large language models (LLMs).

Basic Concepts

Creating effective prompts is all about clarity and structure. A good prompt includes these components:

| Component | Purpose | Example |

|---|---|---|

| Introduction | Sets the context | "You are an expert financial analyst" |

| Context | Provides background info | "Analyze Q4 2024 earnings report" |

| Instructions | Explains the task | "Identify key growth metrics" |

| Constraints | Defines boundaries | "Focus only on revenue figures" |

Focus on describing what you want from the model instead of emphasizing what you don’t want. This approach ensures clearer and more accurate results. Additionally, understanding the limits of LLMs will help you refine your prompts.

Understanding LLM Limits

LLMs are powerful, but they have their boundaries. Here are some important limitations to keep in mind:

1. Knowledge Cutoff

LLMs are trained on data available up to a certain point. For time-sensitive or recent information, you’ll need to verify accuracy manually.

2. Reasoning Boundaries

While LLMs can handle many tasks, they may struggle with advanced math or intricate logic. For such cases, rely on specialized tools.

3. Output Reliability

LLMs can sometimes produce errors or inconsistencies. Here’s a quick guide to common challenges and how to address them:

| Challenge | Solution | Implementation |

|---|---|---|

| Hallucinations | Double-check outputs | Use external verification tools |

| Inconsistent responses | Adjust temperature | Lower temperature for factual tasks |

| Complex queries | Break tasks into steps | Split into smaller, simpler tasks |

Understanding these limitations will make your interactions with LLMs more effective.

Common AI Terms

Familiarizing yourself with these terms will improve your ability to design prompts and collaborate with AI:

- Natural Language Processing (NLP): The technology that enables AI to interpret and process human language.

- Few-Shot Learning: A technique where the model is provided with a few examples to guide its responses. For example, when classifying customer feedback, you might show 3–5 examples of correctly labeled responses.

- Fine-tuning: The process of customizing a pre-trained model for specific tasks or datasets.

Start with straightforward prompts and gradually experiment with more complex ones as you gain confidence in the model's capabilities. Test and tweak your prompts based on the results to achieve the best outcomes.

Core Prompt Engineering Methods

Mastering prompt engineering involves a thoughtful process of creating, testing, and refining prompts. Below are some practical techniques that can help domain experts achieve better results.

Writing Clear, Detailed Prompts

Clear prompts are key to effective communication with AI. Break them into these components for better structure:

| Component | Best Practice | Example |

|---|---|---|

| Goal Definition | Clearly state the desired outcome | "Generate a technical analysis report for Q1 2025 sales data" |

| Context | Provide relevant background | "Using historical data from 2020–2024" |

| Format | Specify the output structure | "Present findings in bullet points with supporting data" |

| Constraints | Define boundaries | "Focus on top 3 performing product categories" |

Using Field-Specific Terms

Incorporate terminology specific to your field to guide the AI toward more accurate and relevant responses. For example:

- Healthcare prompts: Include diagnostic terms, standard protocols, or regulatory requirements.

- Finance prompts: Use standard metrics, key ratios, or compliance-related language.

"Avoid slang and metaphors; use precise technical terms." - Stephen J. Bigelow, Senior Technology Editor

This approach ensures clarity and aligns the AI's output with professional standards.

Testing and Improving Prompts

Testing and refining prompts is essential for achieving consistent, accurate results. Here's a step-by-step guide:

-

Initial Testing

Evaluate the prompt for:- Accuracy of responses

- Consistency in output

- Processing speed

- Cost-effectiveness

-

Iterative Refinement

Document results and improve prompts using these steps:Stage Action Evaluation Method Baseline Record initial prompt performance Measure accuracy and relevance Variations Experiment with different formats Compare output quality Validation Use real-world datasets Test practical applicability Deployment Implement the best version Monitor performance over time -

Continuous Improvement

Regular testing ensures that accuracy and efficiency are maintained as the model evolves.

If you're using tools like Latitude (https://latitude.so) for team-based prompt engineering, leverage its features to collaborate effectively. Share successful prompts, track version history, document test outcomes, and gather feedback from your team.

Common Prompt Engineering Problems

Domain experts often face challenges in prompt engineering. Recognizing these issues and addressing them effectively can speed up learning and improve results.

Learning Technical Basics

For those without a background in AI or machine learning, understanding the technical side of prompt engineering can feel overwhelming. Breaking it down into manageable steps can make the process easier:

| Learning Area | Challenge | Solution |

|---|---|---|

| AI Capabilities | Knowing what LLMs can and cannot do | Start with simple tasks and gradually tackle more complex ones |

| Technical Terms | Understanding AI/ML jargon | Focus on key concepts first and build your vocabulary over time |

| Model Behavior | Predicting AI responses | Use systematic testing to observe and learn model behavior |

Start by building a strong foundation before diving into advanced techniques.

"Prompt engineering is like giving clear directions to a smart but sometimes confused friend. You're figuring out the best way to ask questions or give instructions so they understand exactly what you want and can give you the best answer or help possible. It's all about finding the right words to get the right results from an AI." - Tajinder Singh, Author

Once the basics are clear, the next step is understanding how to navigate the limitations of LLMs.

Working with LLM Limitations

After mastering foundational skills, it’s important to address the constraints of LLMs to refine your approach further.

-

Context Management

- Break down complex queries into smaller, manageable parts.

- Include only the most relevant details in each prompt.

- Use Retrieval Augmented Generation (RAG) to access external data when necessary.

-

Accuracy Enhancement

Applying advanced strategies can lead to tangible improvements. For example, Amazon Q's implementation resulted in:- 36% faster upgrade processes

- 42% time savings per application

- Four times faster modernization of legacy systems

- 40% reduction in licensing costs

Combining Accuracy and Innovation

Once you've tackled the basics and addressed LLM constraints, the challenge becomes finding the right balance between precision and creativity.

| Aspect | Strategy | Impact |

|---|---|---|

| Specificity | Include detailed context and desired format | Produces consistent, targeted outputs |

| Flexibility | Allow room for creativity within limits | Encourages new ideas |

| Iteration | Test and refine prompts regularly | Improves quality and relevance over time |

For instance, when working with GitHub Copilot for Java 21, combining clear requirements with example-based prompts significantly improved accuracy.

The trick is to provide enough structure to guide the AI while leaving room for it to apply its strengths. Regular testing and adjustments ensure your prompts deliver results that are both accurate and relevant.

Prompt Engineering Tools

Selecting the right tools is crucial for training domain experts in prompt engineering. These tools simplify the process of creating, testing, and refining prompts, addressing challenges like accuracy and efficiency.

Latitude: Team Prompt Engineering

Latitude is a collaborative platform built for teams developing production-level LLM features. It bridges the gap between domain experts and engineers, offering tools that streamline the entire process. Some standout features include:

| Feature | How It Helps Domain Experts |

|---|---|

| Dynamic Templates | Manage complex scenarios with variable-based templates |

| Version Control | Keep track of changes and manage multiple prompt versions |

| Batch Testing | Test prompts on large datasets, whether real or synthetic |

| Reusable Snippets | Share context and guidelines across various prompts |

| Performance Analytics | Track response times, costs, and accuracy to improve results |

Latitude also offers tools to evaluate prompts for accuracy and identify hallucinations, while automatic logging simplifies debugging and fine-tuning.

Alternative LLM Tools

Other tools provide unique functionalities for prompt engineering. Here's a quick comparison:

| Tool | Rating | Strength | Limitation |

|---|---|---|---|

| PromptLayer | 4.6/5 | Advanced logging and team collaboration | Focused on text generation only |

| PromptPerfect | 4.3/5 | Auto-optimization for text and images | Lacks version history control |

| Helicone | 4.4/5 | Strong version control | Limited options for parameter adjustments |

| LangChain | 4.1/5 | Supports multi-step workflows | May create overly detailed prompts |

These tools complement Latitude by offering additional capabilities, giving teams more options based on their specific needs.

Using Tools Effectively

To get the most out of these platforms, focus on three key areas:

- Testing and Iteration: Use built-in evaluation and logging tools to refine prompts quickly and effectively.

- Collaboration Features: Platforms like Latitude enable smooth collaboration between domain experts and engineers, ensuring clear documentation and version control.

- Performance Monitoring: Regularly track performance metrics to maintain quality. Tools like Promptmetheus help monitor over 80 LLMs, making it easier to spot and fix issues.

Integrating these tools into your existing workflows is essential. Many platforms offer flexible deployment options, whether you prefer cloud-based solutions for quick implementation or self-hosted setups for greater control.

Tracking Prompt Results

Tracking how prompts perform allows experts to fine-tune and improve results. By keeping an eye on key metrics and refining methods, you can ensure better outcomes over time.

Success Metrics

To gauge how effective a prompt is, focus on these key metrics:

| Metric | Description | How to Measure |

|---|---|---|

| Relevance | How well the output matches the user's intent | Semantic similarity tools, manual review |

| Accuracy | How factually correct the responses are | Comparison with ground truth, BLEU/ROUGE scores |

| Consistency | How reproducible the responses are | Multiple runs, variance analysis |

| Efficiency | How well resources are used | Response time, CPU/GPU usage tracking |

| User Satisfaction | How happy users are with the results | Feedback forms, satisfaction surveys |

Even small tweaks can improve user satisfaction according to research. These metrics guide the refinements discussed earlier.

Output Review

When reviewing outputs, follow a structured approach:

- Build evaluation datasets with realistic examples.

- Use automated tools like BLEU, ROUGE, or METEOR for initial checks.

- Combine automated scores with human judgment for deeper insights.

- Keep detailed records of findings and changes for future reference.

"The evaluation of prompts helps make sure that your AI applications consistently produce high-quality, relevant outputs for the selected model." - Antonio Rodriguez, Sr. Generative AI Specialist Solutions Architect at Amazon Web Services

Skill Development

Improving your skills in prompt engineering is just as important as evaluating outputs. Experts should focus on these areas:

- Performance Monitoring: Use tools like PromptLayer or integrated platforms to track changes. Document successes and failures to build a knowledge base.

- Testing and Iteration: Run A/B tests with at least 1,000 users per variant over a week to ensure statistically meaningful results.

- Collaborative Learning: Work closely with engineers and subject matter experts. Share insights using detailed documentation and version control systems to encourage ongoing progress.

Conclusion

Main Points

Prompt engineering blends technical skills with specialized knowledge. Studies reveal that experts in specific fields create more effective prompts compared to generalists, as they leverage their deep understanding of domain-specific details. Success hinges on grasping both the strengths and limitations of large language models, while being mindful of challenges like hallucinations.

The process of effective prompt engineering relies on several key elements:

| Component | Key Consideration | Impact |

|---|---|---|

| Technical Foundation | Understanding LLM parameters | Ensures consistent and high-quality outputs |

| Domain Knowledge | Using field-specific terminology | Boosts accuracy by 37% |

| Testing Protocol | Applying structured testing | Helps minimize errors |

| Collaboration Tools | Encouraging team-based approaches | Improves teamwork and results |

These elements serve as the building blocks for applying prompt engineering in real-world scenarios.

Getting Started

To put these concepts into practice, consider starting with a simple project. Tools like Latitude (https://latitude.so) provide both cloud-based and self-hosted options to help you get started.

Here’s how to take your first steps:

- Set Up Your Environment: Use version control systems and collaborative platforms to create a dedicated workspace.

- Start Small: Develop straightforward prompts tailored to your area of expertise.

- Implement Testing: Conduct batch evaluations to test how prompts perform across different situations.